A web browser made from scratch in Rust with a custom HTTP Client, HTML+CSS parser and renderer, and JS interpreter.

Changes

-

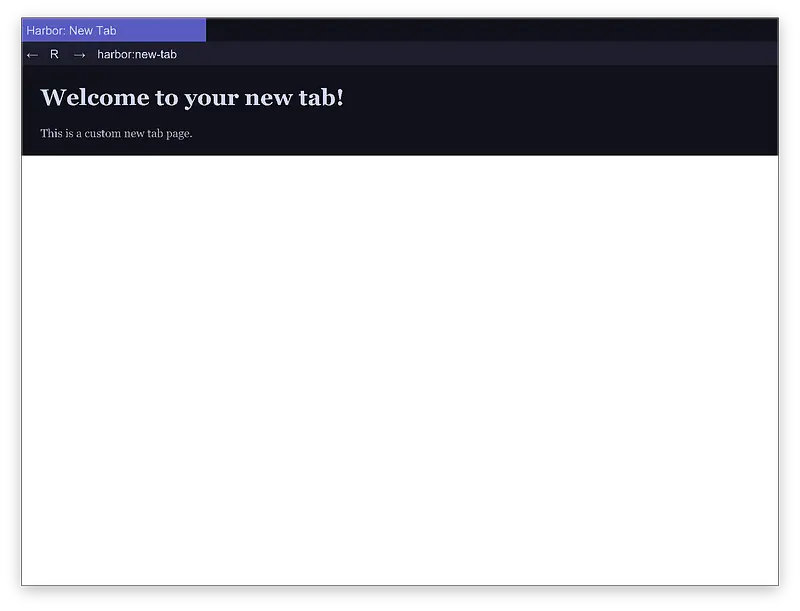

f170cd5: I added a custom New Tab page that is displayed on the internal scheme

harbor:and is set as the default New Tab page. - 251e781: I wanted to add back, forward and refresh buttons to the toolbar, so I spent this commit making space for those buttons in the toolbar and declaring offsets for them.

- 1a46593: Added in the actual buttons to the toolbar and made the forward and back buttons functional. There is a small bug where if I click a link, I can then click the back button infinitely many times. idk why this is happening, but I’m guessing it’s something to do with a race condition. I’m calling it a feature not a bug.

-

b2eece2: Made the refresh button functional. btw, the refresh button’s icon is just the character

Rand not a refresh icon because the font I’m using doesn’t have a refresh icon. -

81b58b6: Added some keybinds:

Cmd + Tfor new tab,Opt + Tabfor switching to the next tab,Opt + Shift + Tabfor switching to the previous tab. -

e2f3a22: I wanted to make pages for errors so the browser doesn’t just crash when something goes wrong. In doing that I tried to make a global stylesheet for all my builtin pages, but it didn’t work because I didn’t implement relative URLs (hmm if only i saw this coming). So I got relative URLs working and made

!importantbe respected. - 69fcf94: Styles were being resolved weirdly under the new refactor, so I fixed that by just resolving everything once more after the declarations are initially handled and use specificity rules.

Next Steps

- Add more builtin pages

- Track history and make a page for that

Log in to leave a comment

Changes

- bed7f9b: Made tabs in the tab bar clickable to switch between them. There’s a tiny bug where you can press between tabs and it still switches to a tab, but the gap is so small that I couldn’t be bothered to fix it.

-

7e5a03e: Added support for fetching stylesheets from the

<link>tag in HTML documents. I’m pretty sure this has a bug that means relative paths won’t work. I haven’t tested that but I know I didn’t implement it, so it probably doesn’t work. - d8ce0a4: Made the tabs in the tab bar resize automatically with the number of tabs open.

- bb139f9: Added the basic address bar display that shows the URL of the current page. It doesn’t do anything yet, but it’s there.

- 491f59b: Added editing capabilities to the address bar. You can click on it and type, and press enter to navigate to the URL you typed. It doesn’t do any error handling or anything, so if you type something that’s not a valid URL it will just crash the browser. But it works for valid URLs.

Next Steps

- Add forward and back buttons to the address bar and make them functional.

Log in to leave a comment

Changes

-

3da9e0a: Made the

TabDatastruct store the tab’s document and layout objects (well, pointers to them) to allow for reusing layouts when switching between tabs. This was a nice performance improvement. -

93374d8: Made the tab bar render the document’s title from it’s

<title>element (and fallback to the url if there is no title). You can now actually tell that there are multiple tabs open. - a43551a: Played around with the colors in the tab bar. Looks nicer now but the colors are still random so I have to find some actually good colors to use. Also made color of the currently active tab different from the others so you can easily see which tab is active.

- 1c096af: Updated color scheme to be from Catppuccin Mocha (mostly). It’s very pretty now!

Next Steps

- idk

Note

I hate that the catp color colors (blue, red, etc.) contrast so poorly with the text color that they’re genuinely unreadable. I had to use a reskinned version of the Surface 2 color to get a nice color for the active tab that still has good contrast with the text.

Log in to leave a comment

Changes

- 7a60c5f, 0828283: I realized that when many people do something a certain way, it’s usually because that’s the best way to do it. So, I refactored my dumbass structures to not be stupid.

-

28c7e93: The browser only supported URLs with

httpandhttpsscheme so I added thefilescheme too since it wasn’t too much work. I can now open local files in a more modern-browser way kinda way. -

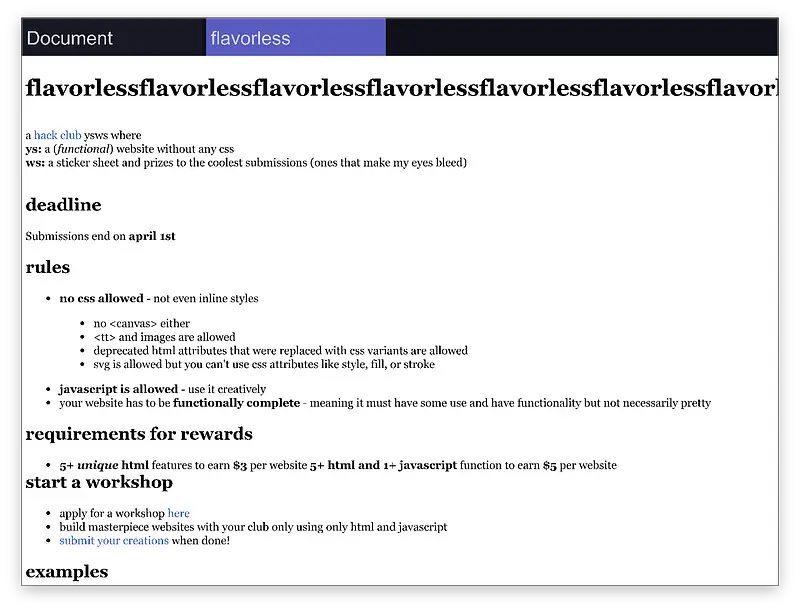

ecb0f93: Added basic support for tabs. And by basic I mean clicking

-opens a background tab with URLhttps://flavorless.hackclub.com/. You can navigate between tabs using the number keys. Obviously, this is all temporary stuff just to get a MVP. - 76a9adc: Added a little bar rendered at the top of the screen which will go on to become the tabs bar. Took a while to get my objects to scroll behind the bar instead of going on top of it.

Next Steps

- Make tabs actually be a presentable feature.

- Think about working on javascript again.

Changes

- 19d406f: Refactored the tokenizer to use constants instead of harcoded values for character ascii values. Code is a lot more readable now.

- 550ad1f: Added tokenization of numerics, but only integers for now. Floats next

- bfce508: Added tokenization of floats as I said I would. Wrote some tests for it as well.

- 0e72d9b: Added tokenization of string literals. Both single and double quoted strings are supported. Escape characters are somewhat supported I think? I remember I got ragebaiting trying to implement them, and idk if I ended up doing them or not.

- 425f63c: Added the structs required for parsing the tokens into an AST. There’s SO MANY.

-

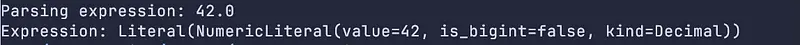

ff98632: Added the most basic parsing possible for identifiers and numerics. Just parsing into

PrimaryExpressionfor now, but it’s a start. - 48a9b11: Copied over the Zig structs into my Rust codebase. This took a while and was pretty tedious. Nothing much interesting, just adapting structs to be Rust-y.

-

4a55934: Finally called the parser from the Rust side. Added some

Debugimpls to make it easier to see the output. It’s definitely working!

Next Steps

- More advanced parsing.

Log in to leave a comment

(I keep procrastinating)

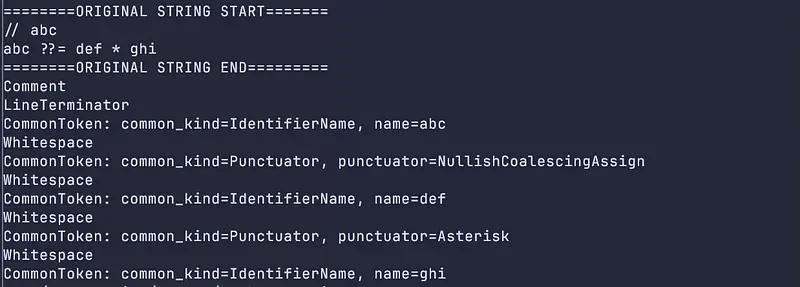

Changes

- e38e75bf: I started working on the JavaScript tokenizer. The docs are really unhelpful, at least compared to the HTML and CSS docs. I added whitespace, line terminators, comments and hashbang comments.

- 563b56d1, 93d2cfd8, 24c23fd9: I added tokenizing for identifiers, private identifiers and punctuators respectively. Also added a couple tests to make sure I’m not being dumb.

- 737be8b1, 1575e8b9: Linked up the functions and structures I defined in Zig to my Rust code.

- 1558578b: Forgot that memory allocated by Zig must also be freed by Zig, so I added a function to free the tokens and made sure to call it in Rust.

Next Steps

- I’ve started work on numeric literals, so I’ll finish that up and then move on to string literals.

- After that I’m probably gonna ignore template and regex literals for a while and move on to parsing and AST construction.

Note

I haven’t devlogged in 3 days mainly because exams but I’ve been working on this a lot too (11 commits since the last devlog). The progress just wasn’t significant enough to warrant a devlog.

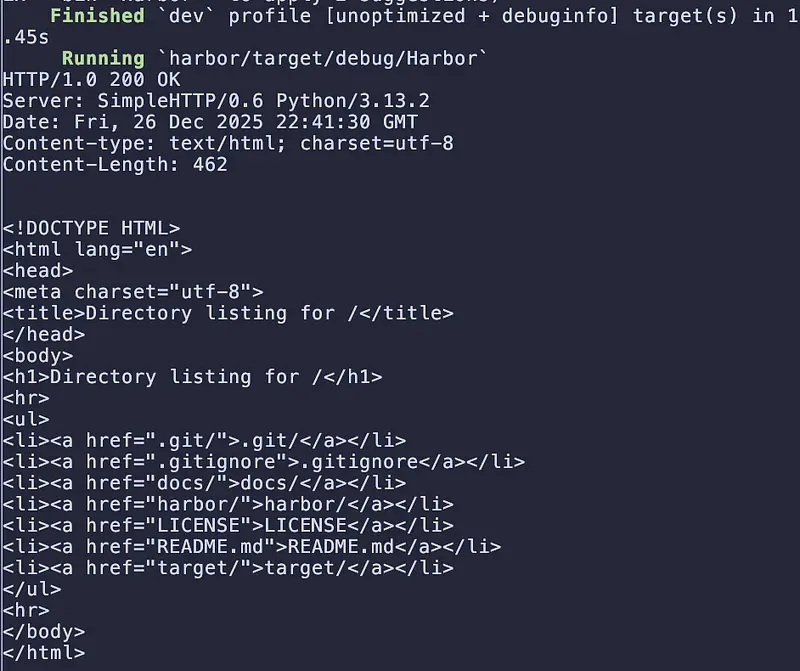

PS: Screenshot is taken from code executed by Rust

Log in to leave a comment

(I’m dumb)

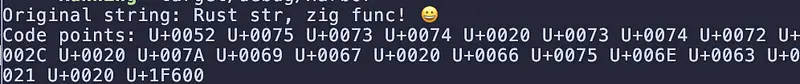

Changes

- a156097-8155f5f: I started work on Javascript in C. The first commit was setting up the build system for integration with Rust but by the last commit I got too frustrated with C and switched to Zig.

- 90a870c: Refactored the build system to work with Zig instead of C. Took a bit of playing around but was pretty simple.

- 1a19748: Added some of the source text functions as defined in the ECMA-262 specification. There’s like.. 4? idk i didn’t count

-

254dd1f: I added structures corresponding to the ones I defined in Zig as

extern structin Rust. It’s kind of annoying to call some of the functions. I might have to write some wrapper functions in Rust to make it easier to call them.

Next Steps

- Write the tokenizer and parser

Changes

- 67d84d1: I implemented caching of glyphs in the font renderer, but idk how I feel about it because it has to create a buffer every time it renders a glyph. There’s definitely a better way to do this.

-

29a4fd5: Fixed the positioning of markers to use a

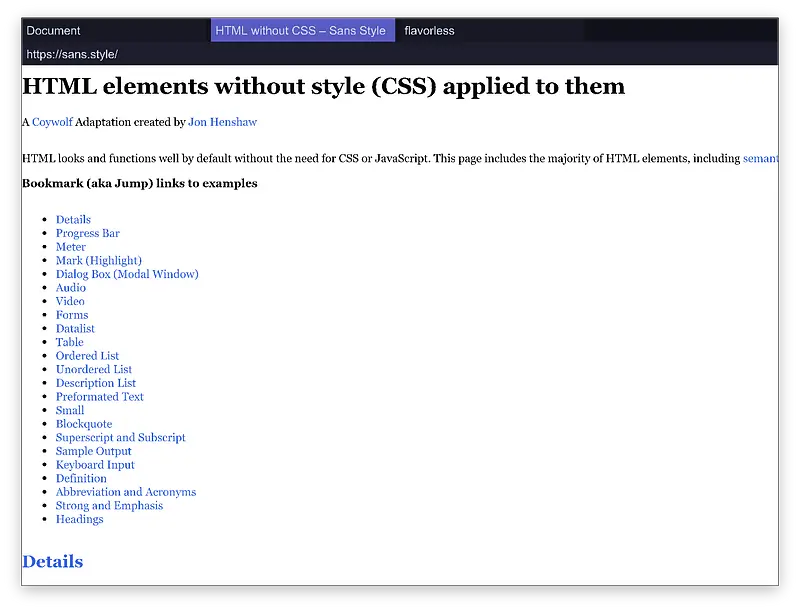

line-heightto calculate their position rather than content height. In the previous devlog video you can see the marker being in the middle of theli“no css allowed”, which is what I fixed. - 617e609: Added scrolling because having to resize the screen every time I wanted to click a link lower the in the page was getting annoying.

Next Steps

- Javascript i swear

Note

This was a pretty small devlog because it was a small update, but still something I wanted to share.

Log in to leave a comment

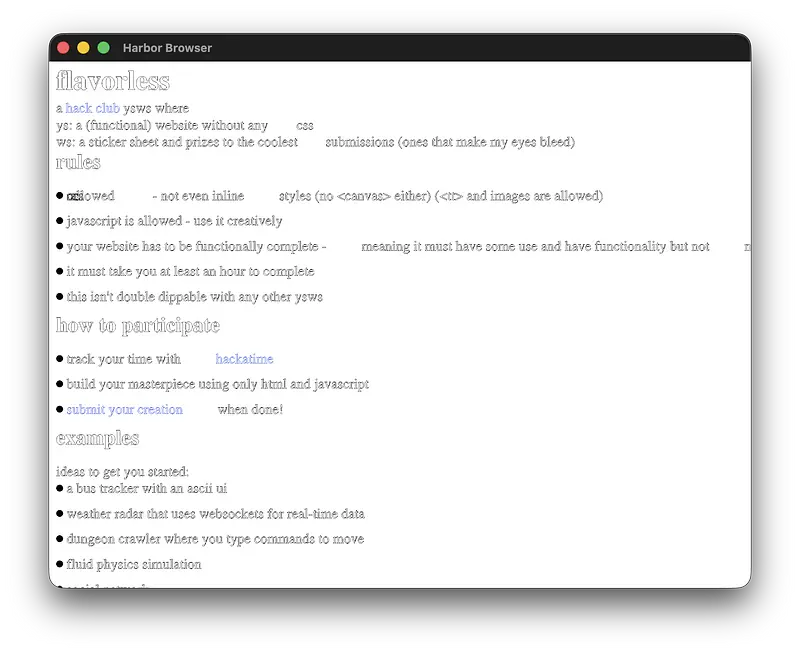

Changes

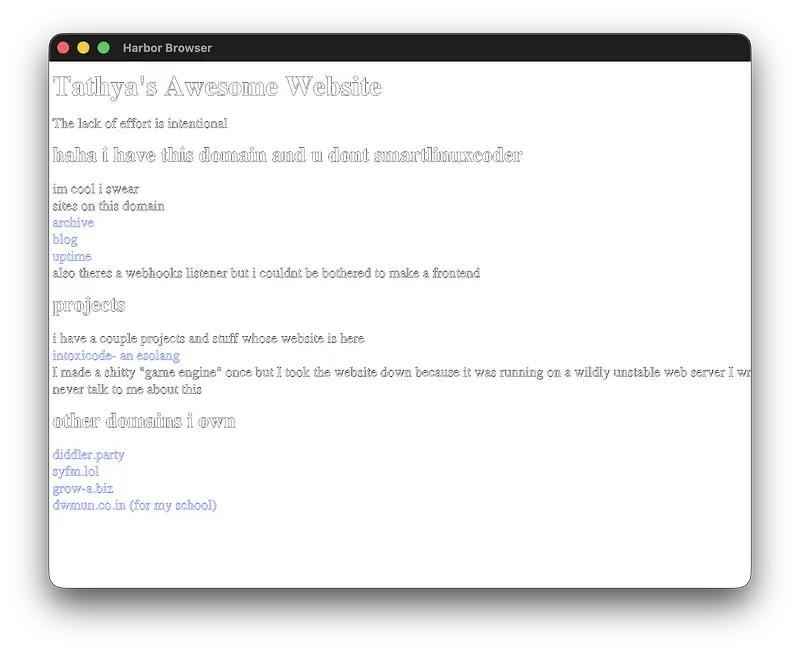

- d6bf1fc: I made links work by refactoring the User Agent structure to be a child of the App, and linked a callback which sends an event when a link is clicked. This event is then handled by the App to switch the page.

- ac52a84: The program was being annoyingly slow, so I ran it through flamegraph and found that most of the (reducable) time was being spent in cloning text renderer objects. I refactored the code to use references instead of cloning, which sped up the program significantly.

Next Steps

- Javascript babyyyyyyy (Go or C++?)

Note

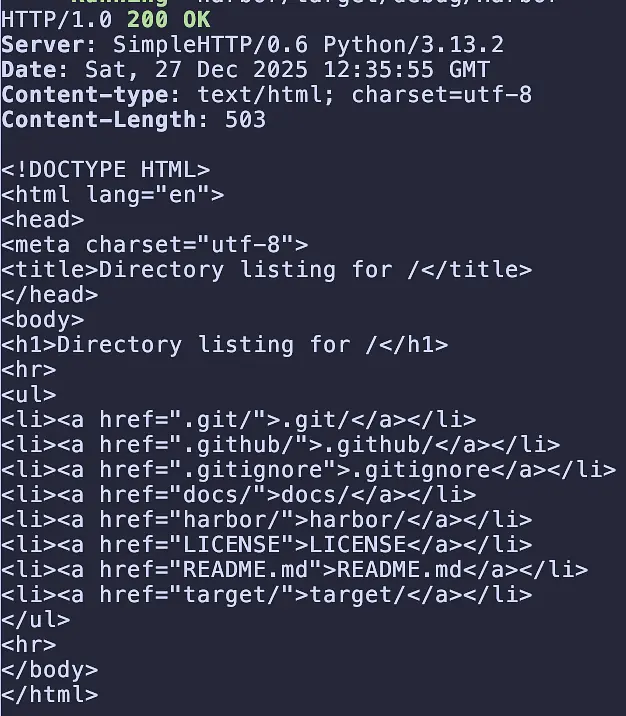

The site being rendered in the screenshot is the flavorless website, which links to sans style.

Log in to leave a comment

Changes

-

fe65d19: I added some click detection for

<a>tags. This was pretty simple because of my existing infra. -

5327aa0: I added a struct called

Agentwhich coordinates all the browser’s jobs. This will make it easier to add things like navigation with links later (and a whole lot more, that I’m not thinking about right now!). -

fc89b22: Added support for HTTP responses sent with

Transfer-Encoding: chunked. Spent some time understanding how that even works, but it was a not-so-difficult implementation after that. I only found out this existed because I tried to renderexample.com, which uses chunked encoding. -

5a95e92: Added some (basic) support for

vhandvwunits in CSS. This was kinda annoying because it was a lot of refactoring of my functions to accept the viewport size. It’s not even all that rewarding tbh. But whatever, it’ll be useful later.

Next Steps

- Link up the click detection to navigation in the browser.

- I swear to god the moment I’m done with navigation, I’m starting with JavaScript. Which language should I write the JS engine in though? I don’t really wanna use Rust, should I use C++ or Go?

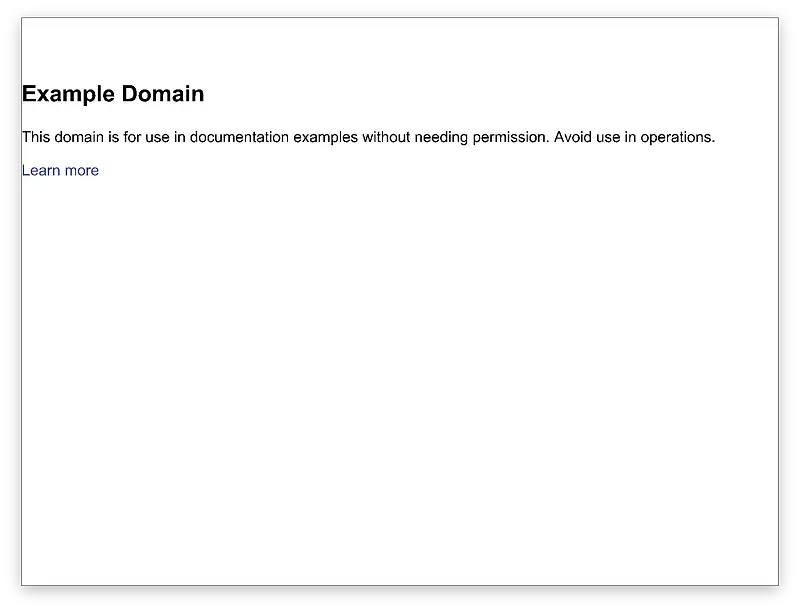

Note

The screenshot is my browser’s attempt at rendering example.com. You’ll notice that the text isn’t centered properly. This is because they use margin: 15vh auto, where the auto is supposed to center the element horizontally. I haven’t implemented that yet.

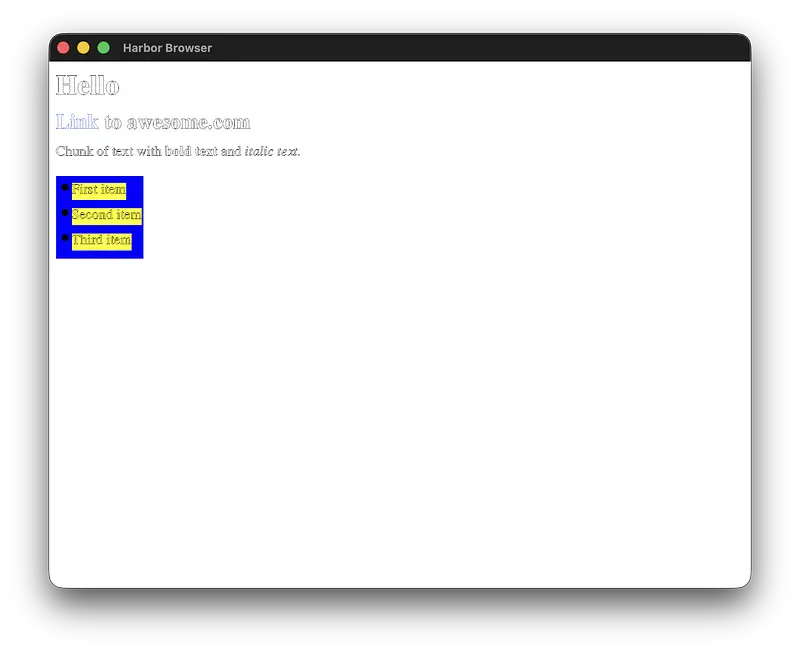

Changes

-

7759e07: In CSS, when something is styled:

h1 { font-size: 2em; margin: 1em; }, theemunit for margin is relative to the font size of the element itself, while forfont-size, it is relative to the font size of the parent element. My code however used only the parent element’s font size for both cases, which was incorrect. A big ass refactor of my value resolution code later, and this can now be respected. -

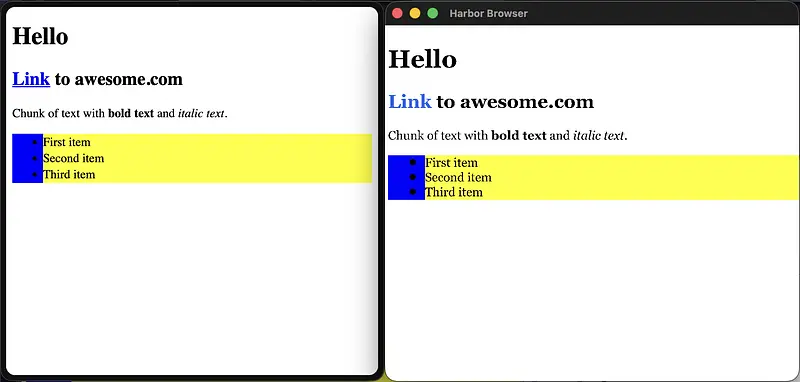

0a5dd89: Lots of bugfixing of layouts and margins because the refactor screwed up my code and introduced like a billion bugs. Also I fixed how

<ul>s (and specifically the<li>s inside) look, the result is almost indistinguishable from a modern browser!

Next Steps

- Antialiasing please I need this

Note

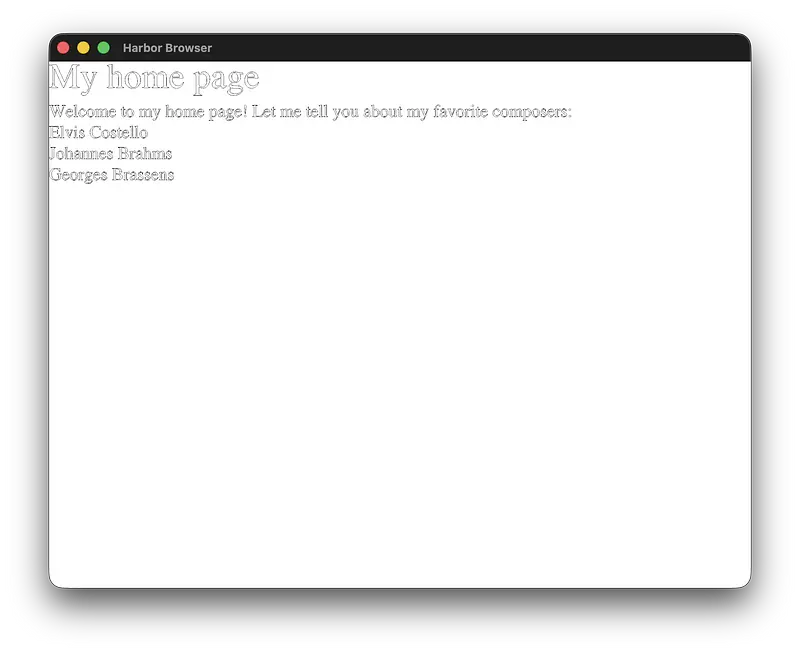

The attachment shows Zen (left) and Harbor (right) rendering assets/html/custom003.html. The only differences I can see are pixely text (I need antialiasing) and links not having underlines!

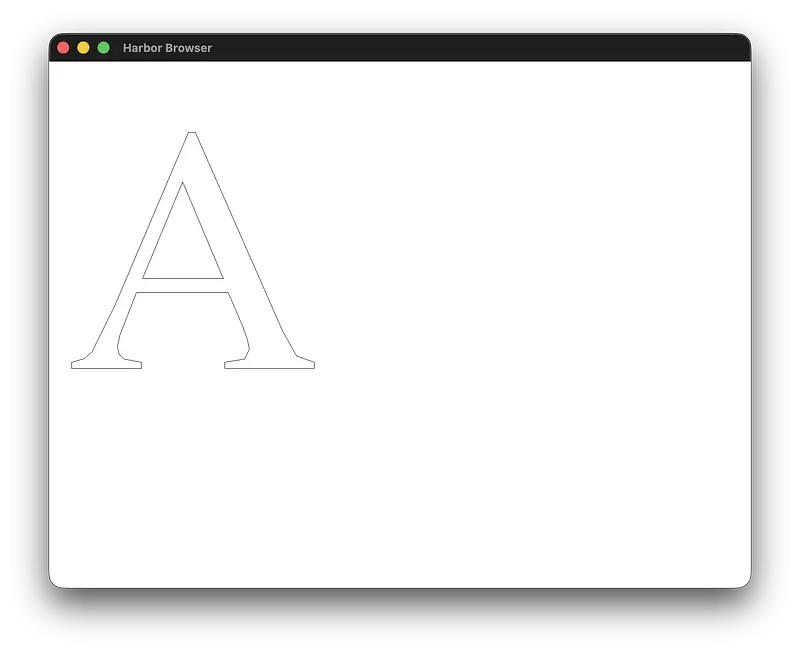

(As first promised 16 devlogs ago)

Changes

-

6eb1651: I implemented glyph filling for basic glyphs - glyphs with no curves (like

H,T,E, etc.). Curved glyphs were giving weird artifacts when filled, so I had to leave them out. -

f704dab: Turns out the bug with curved glyphs was duplicated vertices after flattening bezier curves. Removing the duplicates fixed the filling issue. So I can now fill glyphs without holes (like

S,C, but notO,A, etc.) -

e324354: This was the most annoying bug to track down. I spent probably 2 hours convinced the bug was in my rendering pipline with stencils, but turns out it was because my code wasn’t generating triangles for holes because of their winding order. After fixing that, I can now fill glyphs with holes (like

O,A, etc.)

Next Steps

- Figure out how to implement antialiasing for filled glyphs

- Back to more CSS properties?

Note

I told you I’d eventually get around to filling glyphs :P (even if I am 16 devlogs and 20 days late)

Changes

- 6fc34c9: I added web-safe fonts: Arial, Helvetica, etc. and removed Times New Roman because apparently that’s not a web-safe font(??)

- 53305f8: I was facing some issues with the codebase using different font objects which was leading to inconsistent font rendering. So I made the layout functions require the font directory as an argument to ensure consistency.

-

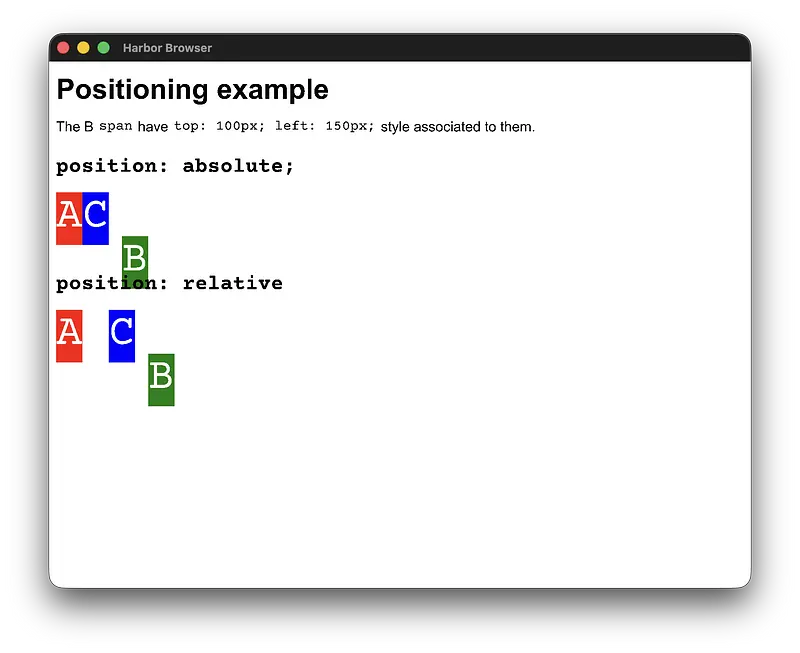

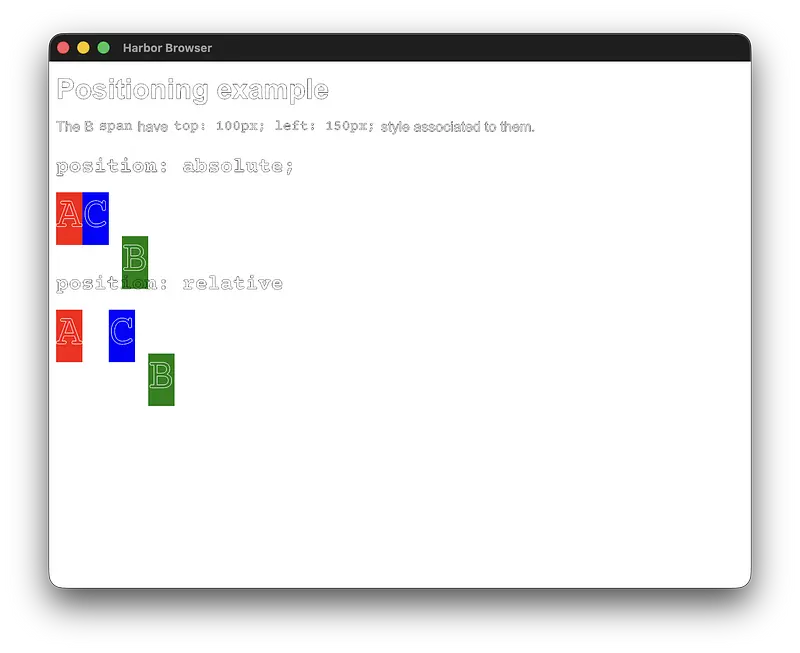

ec44c88: Added structures for handling the

positionproperty. Pretty basic. -

4be038a, 10983fd: Added

position: absolutesupport. This was a bit tricky because I had to deal with respecting position of different parents depending on the position property. -

1321f10: Added

position: relativesupport. This was easier than absolute positioning since I could just offset the element from its normal position.

Next Steps

- Make positioned elements be painted later than normal elements so that they appear on top.

Note

I’ve left out a few commits because between this devlog and the last there were ~12 commits that were mostly minor changes, bug fixes, etc. Also I feel like the browser is really starting to come together - the screenshot looks so good.

Log in to leave a comment

(Holy refactor)

Changes

- e2e8c8c: The bugs with layout from the last devlog were fixed by this commit. The bug with layouts was that it was displaying multiple consecutive spaces rather than collapsing them into a single space. I also removed some redundant parameters from the layout functions.

-

bc810db: Turns out margins weren’t being applied correctly because boxes were ignoring them. This fixed that, along with me adding

largerandsmallersize keywords for CSS. - 2847abc: I refactored my entire text rendering system because it wasn’t very well designed and probably would’ve been a nightmare to implement glyph filling onto. I couldn’t explain all the bugs and changes I made in this commit, because there were so many. At one point my text was having an upside-down seizure where it was just jittering out of control (while upside-down?). But now everything is working perfectly.

Next Steps

- Implement glyph filling?

Note

This was the most painful refactor I’ve done in my life. Wouldn’t wish it upon my worst enemy.

Log in to leave a comment

Changes

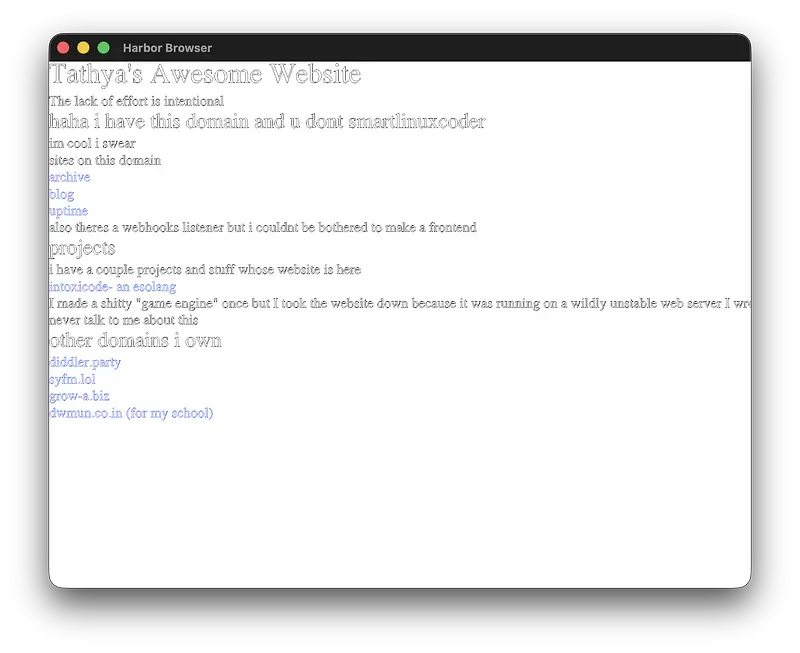

- 809d448: I was trying to render the flavorless website and it was refusing to work because I half-assed the HTML tree parser. So I added support for a couple more tags in the parser and completed a couple TODOs from the tokenizer (ts was a pain)

-

ca4ca6f: I added support for

brtags by adding a flag for whether or not the layout engine is requesting a line switch. That works ok, but there seem to be a ton of other bugs that I need to fix (as you can probably tell from the photo)

Next Steps

- Fix as many bugs as possible from the screenshot

Note

This entire devlog was just me fixing my shit codebase :yay: also ts was a smaller devlog than what I normally post because I’m super tired today. Really could not be bothered to fix the bugs today before posting this devlog.

Log in to leave a comment

(YAY)

Changes

-

8ed25ab: I realized that

lis were being positioned super incorrectly (the box was ~20px to the left of where it should be). I discovered this because I was trying to implement hovering and added abackground-colortoliwhen hovered, which showed the box and the text were not aligned. - 6e9f441: Made elements have associated styles rather than boxes, because boxes having associated styles makes hovering way more complicated to implement.

- 18d27e5: After a ton of debugging and almost rewriting my entire text rendering engine, I implemented proper hovering. This style:

h1:hover {

color: red;

}

Now actually makes h1s red only on hover.

Next Steps

- Implement underlines?

- Make links work?

Note

This was a ton of work, the basis of hovering (detecting which elements are hovered) was pretty easy but applying styles only on hover was the bulk of this devlog.

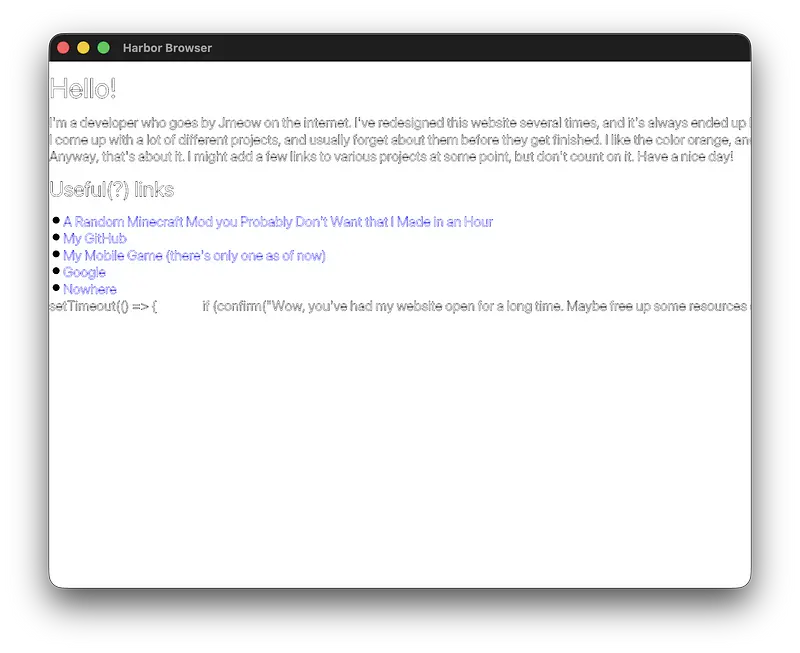

Changes

-

eb86c45: My code was trimming all leading and trailing whitespace from HTML content, which caused issues with things like

<p><a>Link</a> to something</p>being rendered without a space between “Link” and “to”. Fixed it by trimming left or right whitespace only when the child is the first or last child, respectively. - d49038f: I added margin collapsing, which is when vertical margins of block elements collapse into a single margin. Also fixed a bug with items not being popped from the parents stack at all.

-

2fee9db: I added the

list-itemdisplay type, which is used forlis. This took SO long because I tried to make it once, and it just refused to work for some reason. So I stashed all my changes and started over from scratch, and it worked after a while. I ran into a bug where bullets where being rendered as ellipses rather than circles, which I fixed by properly converting to clips space.

Next Steps

- I really don’t know right now.

Note

The screenshot in this devlog is from jmeow.net! The last bit of JavaScript being rendered is because my browser doesn’t understand that script tags shouldn’t be rendered.

Log in to leave a comment

Changes

-

87a2cbc: I refactored text rendering to use

ttcs instead of individualttfdata so that bold and italics are possible. With this possibility, I implemented bold font weights. -

49c4534: I added support for italics, and bold italics. I should probably add the

<b>and<i>tags now. -

4aeb201: Headings were feeling super cramped, so I added support for the

marginCSS property. Also added basic support formargin-*properties (margin-top,margin-bottom,margin-left,margin-right). I added these margins onto headings in theua.css. The style rule forh1now looks like this:

h1 {

display: block;

font-size: 2em;

font-weight: bold;

margin: 0.67em 0;

}

Next Steps

- Implement

<b>and<i>tags. - Add support for more CSS properties (padding? border?).

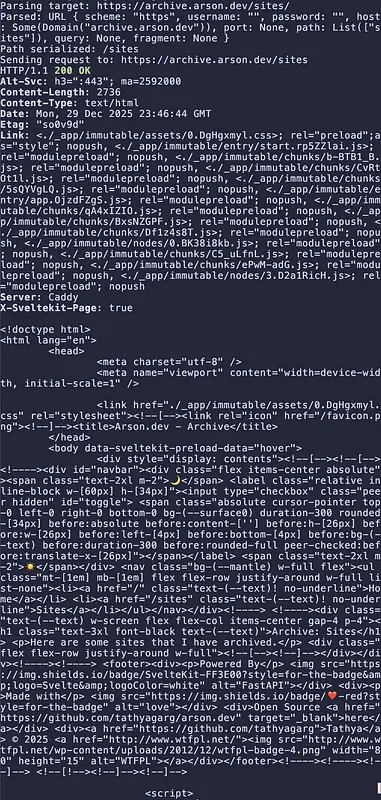

Changes

-

29f9e4c: I decided to start implementing the

fontproperties to my CSS, so I started with creating the structures required for parsing afontdeclaration into an object. -

6c09fe1: I used the structures I created to implement parsing of

fontdeclarations. This was pretty similar to how I implemented parsing forbackgrounddeclarations. -

0014074: I remembered reading about User Agent stylesheets and decided to implement support for them in Harbor. So now

assets/css/ua.cssis the user agent stylesheet that Harbor uses by default. -

800515f: Small fix with font size resolution so that it can now correctly resolve

remandemunits. I realize as I’m writing this devlog that this means thatsmallerandlargerkeywords should also work with minimal implementation. -

186f319: I added actual support for

displayblock and inline. Reworking the layout engine to support block and inline elements properly was really annoying because of how messy my code was (what the hell was I thinking when I wrote it??). -

0b0f6c6: I forgot to implement system colors (

AccentColor, etc.) in my color parser, so I added support for those.

Next Steps

- Implement text decoration?

Note

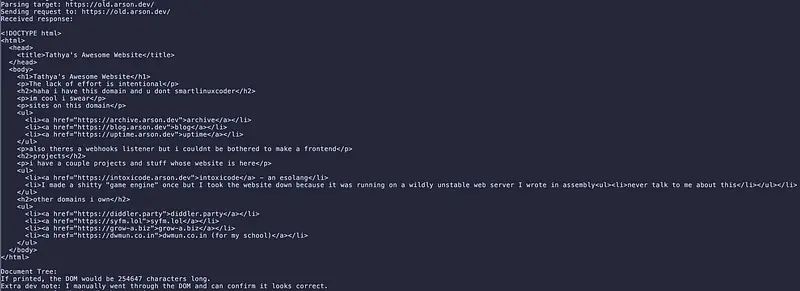

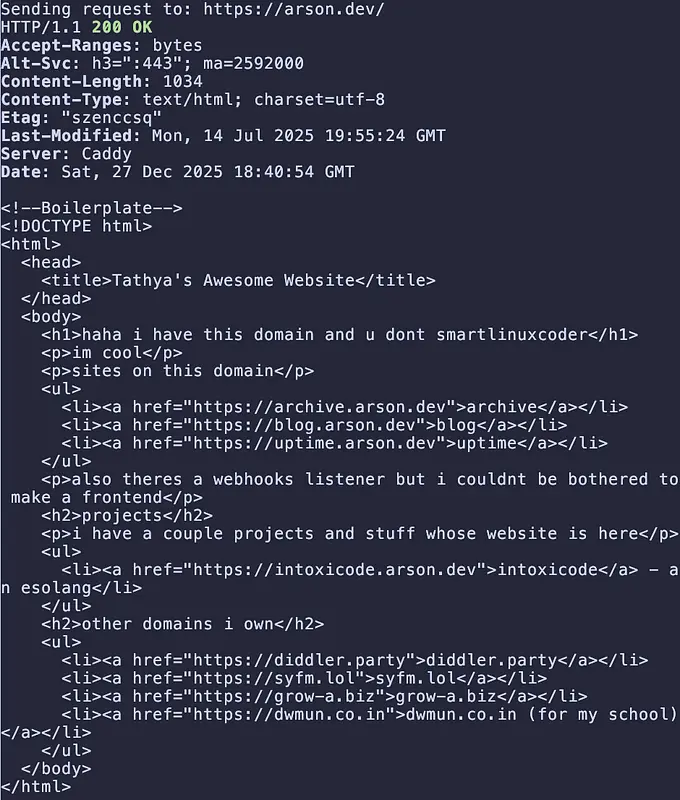

The site being rendered in the screenshot is old.arson.dev, which is my old personal website.

Log in to leave a comment

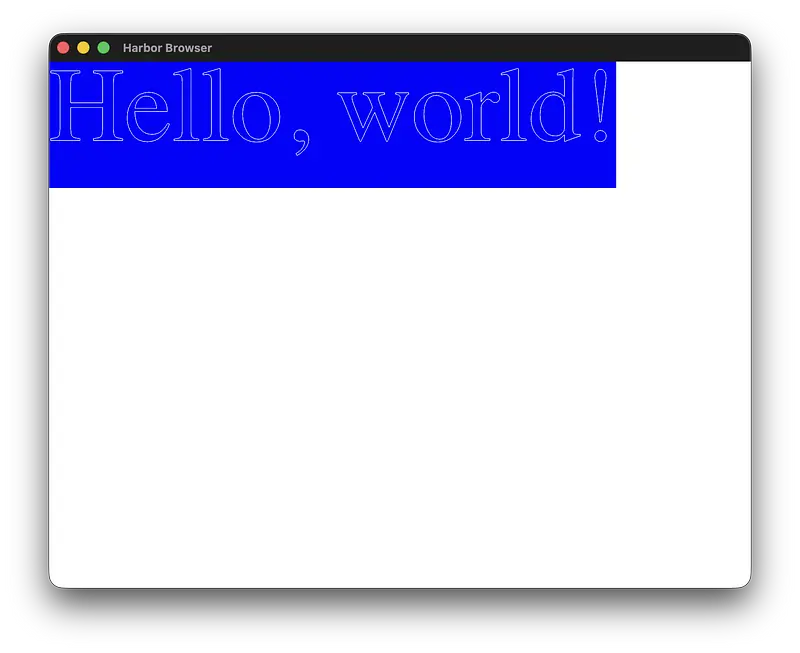

Changes

- d86e3c7: I added background colors to boxes, and refactored the render pipeline to include a separate renderer for fillings.

- 51f0a95: Added the width style property too, so that boxes can have custom widths. I wanna implement overflows soon too.

-

e0c3506: lwk I forgot that

background-coloris a subproperty ofbackground-*properties, so I refactored the codebase to accommodate that. -

1423835: A part of the CSS spec defines how

backgroundproperties should be parsed, so I implemented that. Also turns out I severely misread the spec when I was first refactoring the codebase last commit and got a very wrong idea of what a background layer is. So I had to do a lot of refactoring of that too. -

a6f6232: I added individual properties for all the

backgroundsub-properties (likebackground-color,background-imageetc).

Next Steps

- Font? Maybe png parser then image rendering?

Note

I’m super locked in for my exams rn, so updates might be a bit slow. But I’ll try to push as much as I can.

Log in to leave a comment

OFFICIALLY AT 100 HOURS

(Holy shit)

Changes

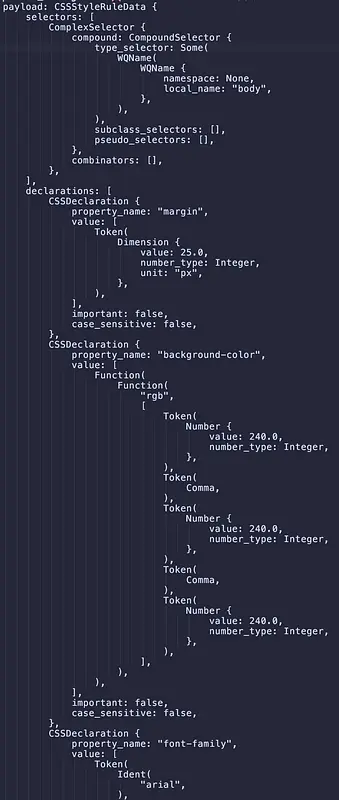

-

3018fe0: I made it so that when the HTML parser encounters a

</style>it automatically reads the contents of the style tag and tries to parse it as aCSSStyleSheet, and adds it to a vec of stylesheets - 329a395: I added specificity calculations for selectors. I’m not using this as of now because my first priority was linking CSS into the render process.

-

ed2b385: I added a

ComputedStylestruct which stores data about the actual styles that an element should use when rendered. I used a depth-first traversal to go through the DOM and try to compute styles based on selector matches for every element. - e4ca0bb: Linked element computed styles into the Layout Box engine, and hooked that into the actual rendering pipeline. Seeing the red text was crazy.

Next Steps

- Change colors to take an alpha value and implement color functions?

- Hook up margins, paddings, borders from CSS to the layout engine

Note

Crazy progress + this devlog marks 100hr milestone!

(Finally something to show)

Changes

- de03232: I had a CSSRule struct which took a payload of a few types, but I realized that it’d probably be smart to take the payload in as a generic so that impl’s on specific rule kinds can access the parent CSSRule object’s properties. This makes it easier to implement things like serialization later on.

- c2fe3c6: Going through the spec I came across this line:

Let parsed rule be the result of parsing rule according to the appropriate CSS specifications

With no further explanation. After digging I found that this meant I needed to implement parsing for individual rule kinds , so I started with that for the classic style rule. So, I started implementing a selector parser.

- b8c1008: I completed the selector parser, which was actually pretty enjoyable. Took a while though.

- 6fc8820: Was getting ready to go sleep when I realized that I already had a block parser implemented for style rules, so I went ahead and hooked that up to the CSS parser so that style rules can be fully parsed now. YAY

Next Steps

- MAYBE implement more rule kinds. Not sure if I want to do that yet.

- Cascading? Inheritance?

Log in to leave a comment

(What do I even upload as the attachment)

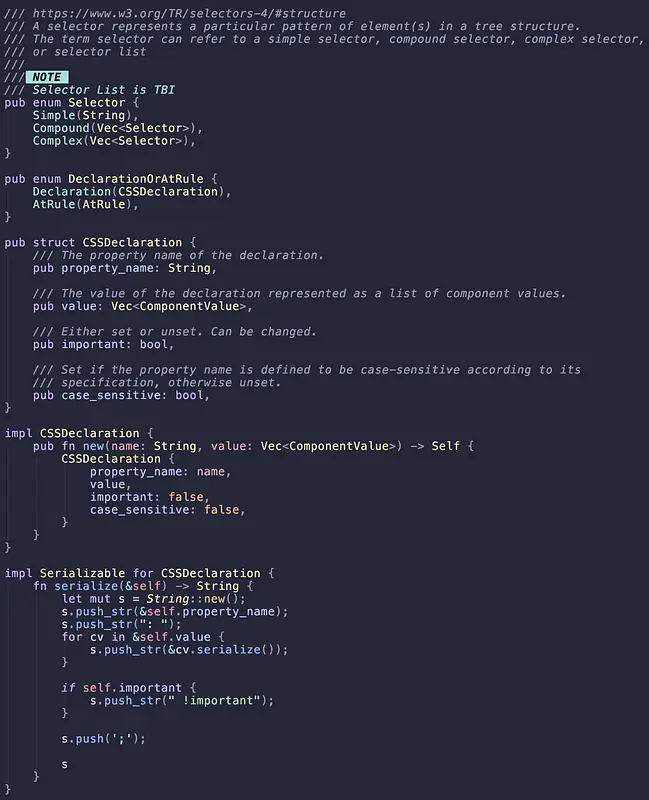

Changes

- 90b6c62: My codebase used to have a parser struct for parsing CSS, which I decided to refactor out into just functions since it didn’t really need to maintain any state. I thought about doing the same to the HTML parser, but it does maintain some state (current tokens) so I left it as is for now.

- 182e138: Boring commit. Was mostly just me reading through the CSS spec and trying to understand how to implement the various parsing algorithms.

- d002d3d: I started working on serializing values back to text because something in the CSS spec mentioned that certain properties need to be serialized in a specific way. I hate this so much.

Next Steps

- Finish serialization.

- Implement more CSS spec.

- Start implement cascading and inheritance.

Note

This is probably one of the most boring updates I’ve ever written. CSS parsing is just not very exciting. It’s a whole lot of reading through poorly written specs with conflicting wording and trying to implement them. It’s so boring that I don’t really have anything to show for it.

Log in to leave a comment

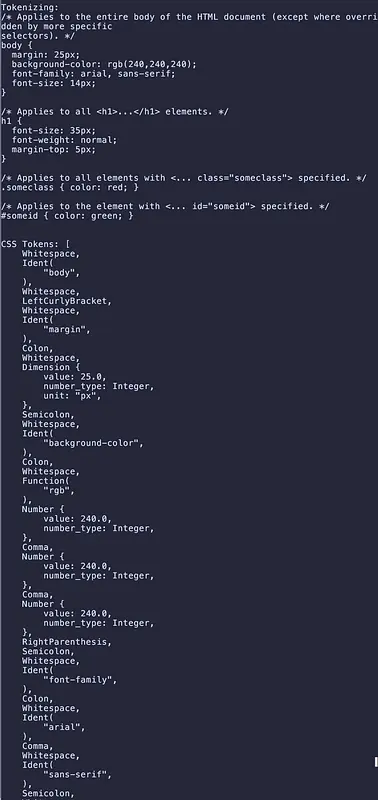

Changes

- 391bcdd: I started working on CSS, starting with a tokenizer. This should’ve been really simple but the CSS spec is just really weirdly worded. Got stuck on the stupidest bug ever for like an hour, I was reading the current and next character instead of the next 2 characters.

Next Steps

- Finish the CSS parser.

- Write tests for the CSS tokenizer.

Log in to leave a comment

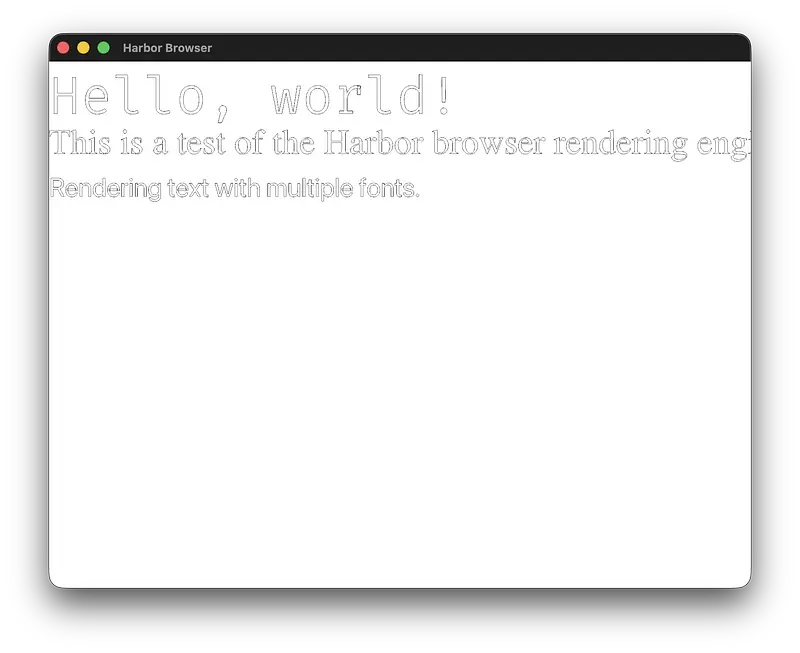

Changes

-

753e436: I realized as I was copying random HTML code from the CSS specifications that my code doesn’t always work. So I added a couple of test cases in the

tests/directory so I have a way to verify that my code is working as expected. - c7f088a: I reworked my layout system to make use of the CSS spec more effectively. This was pretty much a complete rewrite of my layout engine, but it was super worth it when I ran the code with an example HTML file and saw that it rendered correctly!

Next Steps

- Make a CSS parser?

- Start adding defaults for various CSS properties

Note

The image has text magnified to 2.5x the normal size for better readability.

Log in to leave a comment

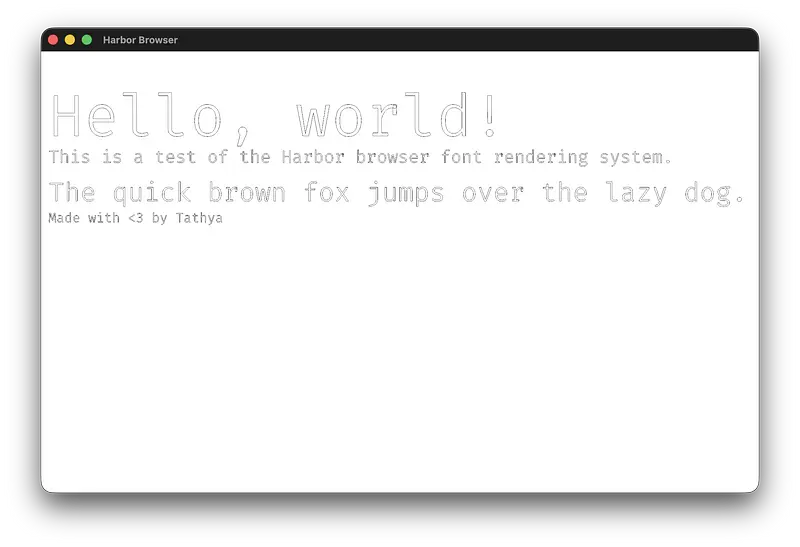

Changes

- 8f47563: I refactored the code base so that text renderers have their own vertex buffer objects which they write to. Should hopefully improve performance when rendering multiple text objects.

- ef4a97f: Did some more refactoring, but this time to improve the layout structure I have. I refactored text renderers a little more so they’re now font-specific rather than text-specific. Also the layout system allows for multiple available fonts and sizes via separate text renderers.

Next Steps

- The layout system is kind of like absolute positioning right now. I want to implement a more flexible layout system that allows for relative positioning and alignment.

Log in to leave a comment

(Where’d all the time go come from?)

Changes

-

aa54f4f: Lots of quality of life improvements relating to text rendering:

- Moved text rendering to a separate text rendering manager

- Added support for CMAP Subtable Format 12

- Spent way too much time trying to advance a line height properly

- Added anti-aliasing support for text rendering

- Added support for composite glyphs, which included implementing scaling and translation of glyph components

Next Steps

- Move the vertex buffer object to be a property of the text renderer, to make it easier to render multiple things in the future

- Try to add some filling to the shapes being drawn

Note

I feel like I didn’t spend THAT much time on this devlog, but looking back at it I did add a whole lot of features. I have no idea why I didn’t commit more often, but it might have something to do with the fact that work on this commit started at 1 AM.

Log in to leave a comment

(My favorite devlog so far)

Changes

- 7c7cefe: I added the HDMX table - just for funsies.

- 5eb379b: I did some basic work on the render pipeline so I could actually start rendering glyphs. I did a really messy merge right before this, so I can’t really explain the details of the changes.

-

c227220: I fixed the parsing for the

glyftable. There was barely anything done correctly when I first wrote it, but I had no way of telling until I tried rendering. This took WAY TOO LONG. I think the core of the issue was that x- and y-coordinates are stored as deltas, not absolute values. I refactored the codebase to hold contours rather than raw points, so that may have somehow solved part of the issue too. -

bf3d0c9: I realized I needed to use a

LineListrather than aLineStripto render the contours properly. ALineStripconnects the last point to the first point automatically, which is not what I wanted (it would connect points from different contours). - efbef46: I completely forgot that I had to handle bezier curves in the glyph rendering. So I added that functionality in. It seems to be working fine now. I do need to find a way to dynamically calculate a decent precision for the curves.

Next Steps

- Fill in the glyphs so they’re not just outlines.

- Implement rendering of composite glyphs.

- Implement rendering multiple glyphs (i.e. words, sentences).

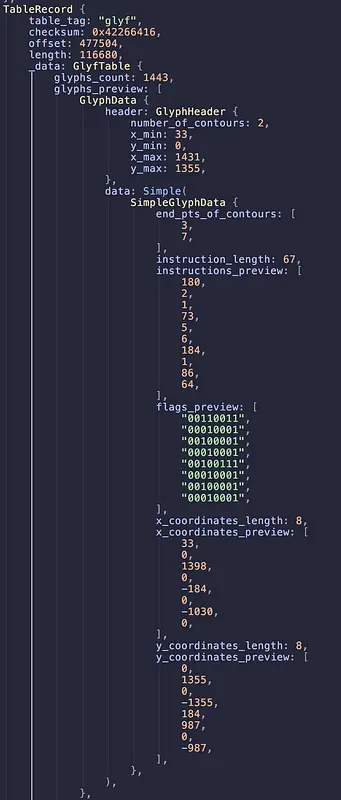

Changes

-

db4c07f, cca929f: I implemented the

postandlocatables. I uniquely remember enjoying writing theposttable’s code, I don’t know why.locawas pretty boring. -

4016b3e, 399c66a: I added the most important table,

glyf. This table was a pain to implement. I had to individually implement both simple and composite glyphs. Composites were especially tricky because there wasn’t a well defined structure for them in the docs. -

5681a6a, 80b8865: I implemented 5 more tables,

cvt,fpgm,prep,gaspandmeta. I’m almost done adding tables - there’s only a couple left (4 more that I plan on implementing).

Next Steps

- Implement the remaining tables:

hdmx,kern,vhea,vmtx. - Consider adding support for AAT (Apple Advanced Typography) tables like

bsln,feat, etc. (probably won’t) - Start with the rendering engine - this will be a big task.

(Micro devlog)

Changes

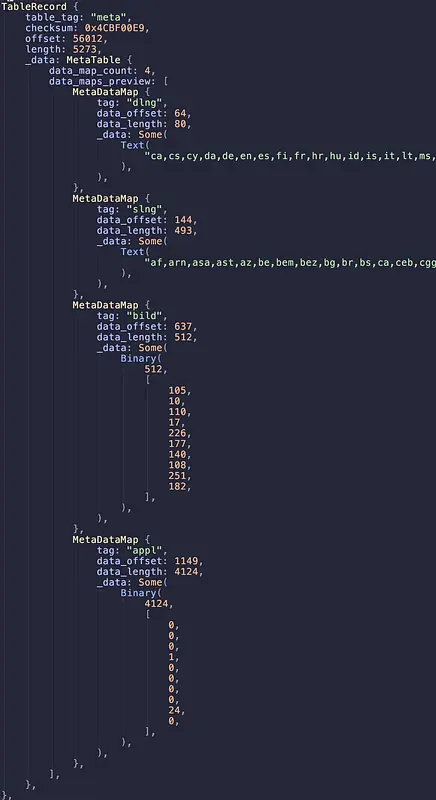

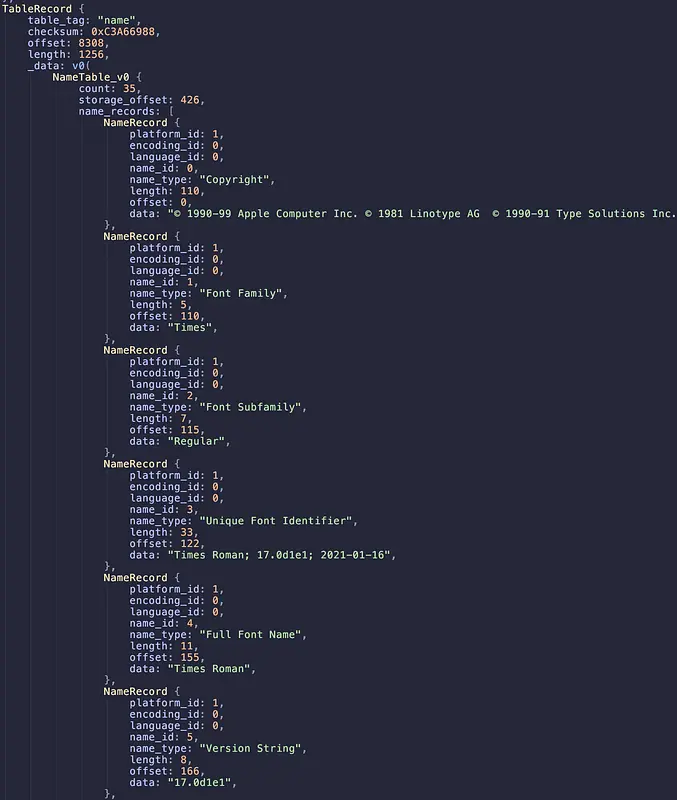

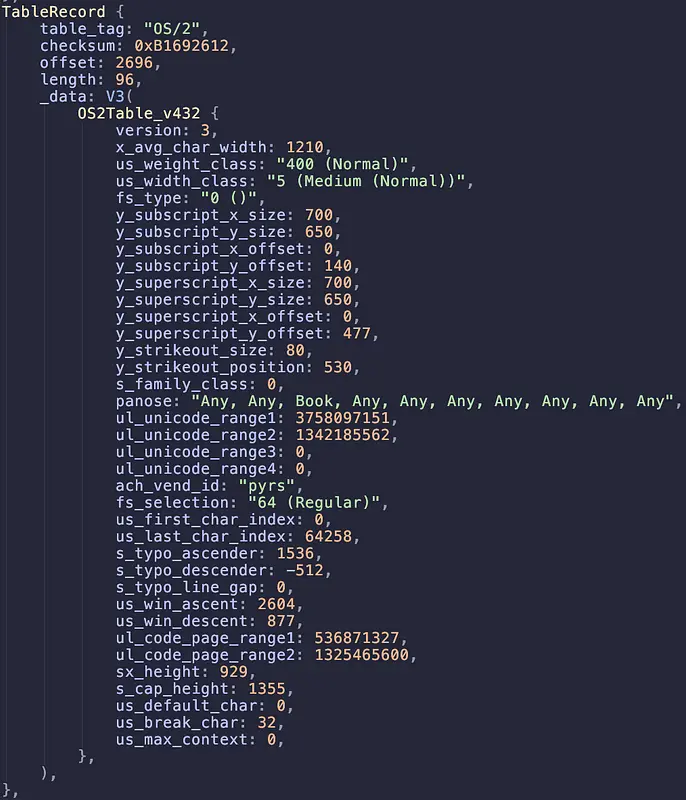

-

b753d4c: I added parsing for

OS/2andnametables, though thenametable parsing only supports version 0 currently.

Next Steps

- Finish

posttable parsing.

Note

I really only made this devlog because it pushes me over the 60h mark for this project.

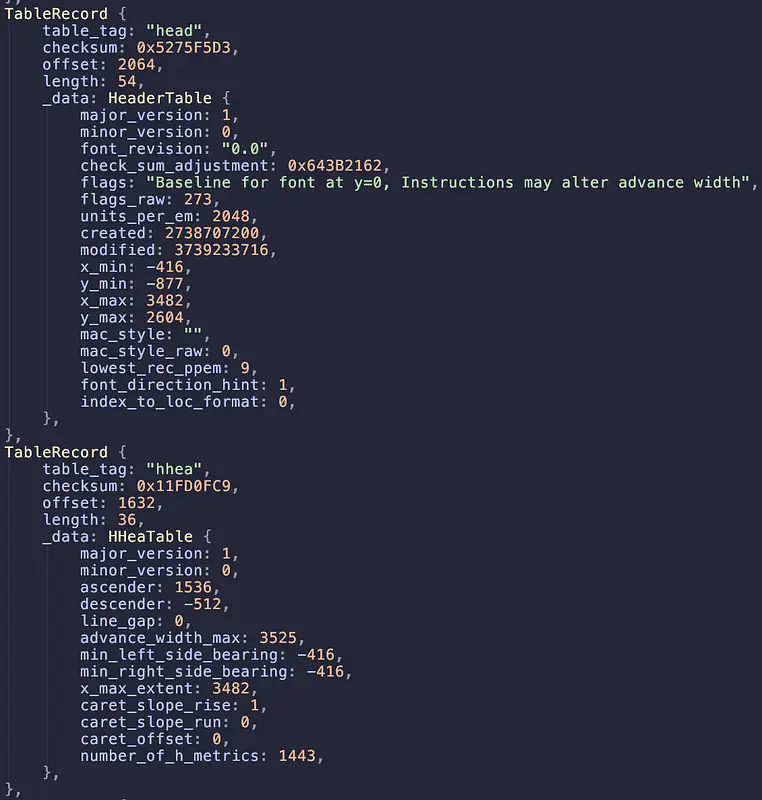

Changes

- b3661e7: I completed CMAP format 6 parsing - it was actually pretty easy. I’ve learned that font parsing is WAY easier than HTML parsing because they have such a strict format. I can literally just read bytes in order and know exactly what they mean.

-

5d11d0f: Added the font header table

headparsing. -

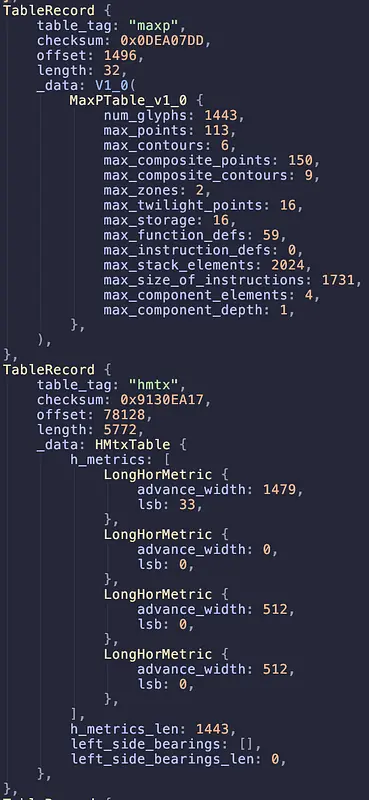

0f25790: I added parsing for

hhea,maxpandhmtxtables. This was actually a lot harder than I expected because thehmtxtable’s length depends on variables defined in themaxpandhheatables - but it’s not guaranteed that those tables will come beforehmtxin the file. So I had to set up a deferred parsing system which allowed me to defer parsing of certain tables until their dependencies were parsed.

Next Steps

- Complete the remaining required tables

name,OS/2,post. - Start parsing of tables required for glyph outlines -

locaandglyf.

Log in to leave a comment

Changes

-

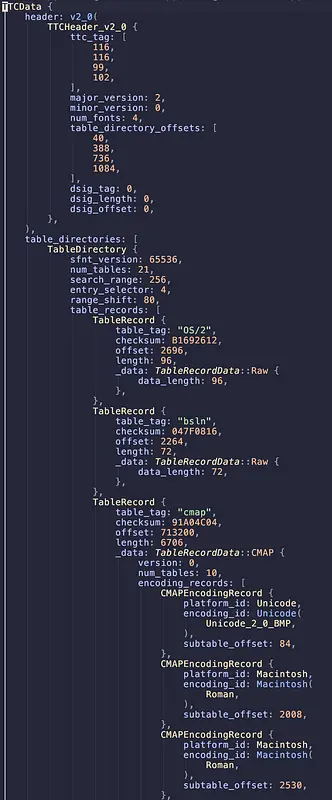

375abc7: I decided that I’m going to write my own font parsing library. I saw Sebastian Lague’s video on font rendering and it inspired me to try it out myself. I created some basic structs and functions that will help me parse font files and extract glyph data. I’m using MacOS’s

/System/Library/Fonts/Times.ttcas my test font file for now. - ad91bfd: I implemented basic parsing, so I can now parse the TTC data to get a header, and table directories. Each table directory is furhter parsed to get table records. I’m currently working on parsing the ‘cmap’ table to get character to glyph mappings. I’ve completed cmap subtable format 0 and 4 so far.

Next Steps

- Complete parsing of cmap table by implementing format 2, 6, 8, 10, and 12, 13, 14. I’m considering only doing format 4 and 12 for now since they cover most use cases.

- Start work on next table to parse, likely ‘glyf’ table to get glyph data.

Log in to leave a comment

(Small, but extremely significant change)

Changes

-

1bc896d: I implemented the

_adoption_agencyfunction which meansatags (along with all the other formatting tags) are now being properly serialized. Honestly I’m super sceptical about this implementation but it seems to be working fine for now. I’m too scared to test it.

Next Steps

- Possibly a deserialization to serialize the DOM into HTML.

- Actually start on rendering the DOM?

Log in to leave a comment

Comments

If you can’t read the last lines, here’s what they say:

“Document Tree:

If printed, the DOM would be 254647 characters long.

Extra dev note: I manually went through the DOM and can confirm it looks correct.”

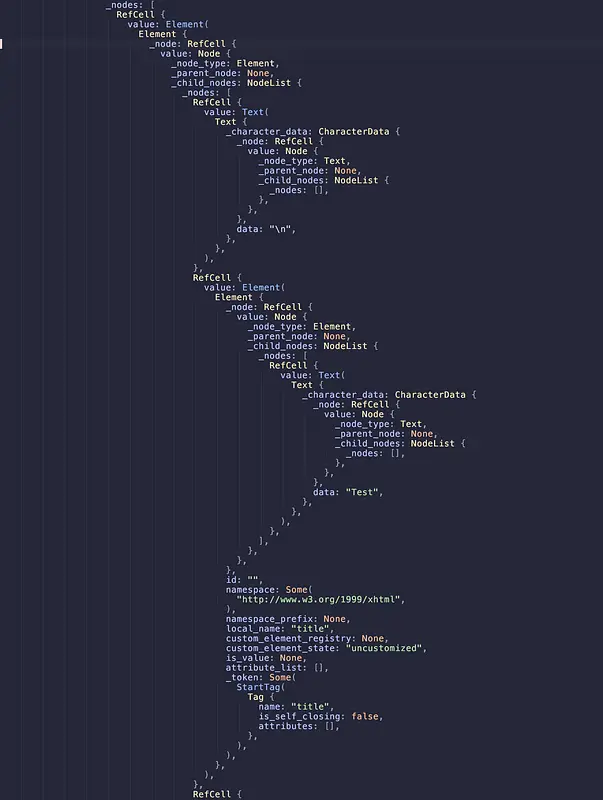

(This devlog is mostly refactoring)

Changes

- 839d015: I fixed a small bug in the code where certain elements were lacking parents and node documents.

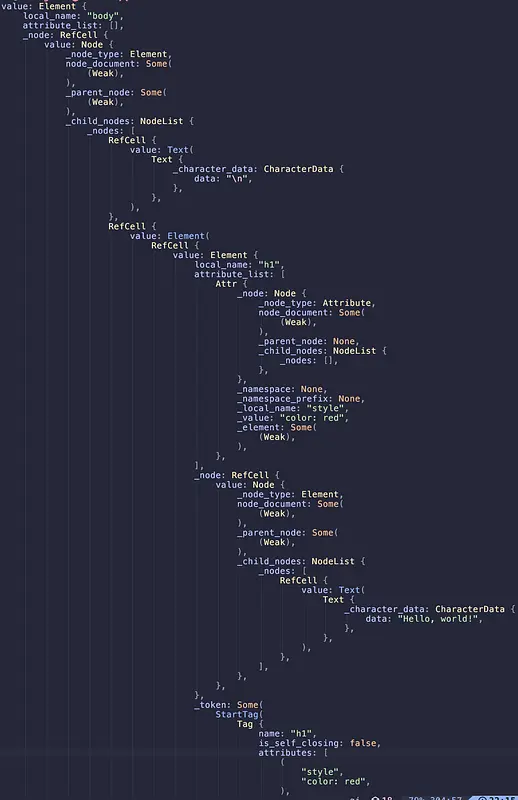

- 00861d6: Refactor of all time 2, electric boogaloo: I refactored Attrs to hold Rc<RefCell> instead of Element directly. This allows for better management of element references and avoids ownership issues. It was also a massive pain in the ass to refactor.

- 871232f: The tree constructor can now properly construct the tree of the dummy HTML document I’ve been feeding it. It was very rewarding to see the child nodes populate correctly.

Next Steps

- I hate the In Body state but I have to finish it anyway

Note

Every commit in this devlog had at least some work on the tree constructor even if not explicitly mentioned in the change notes.

Log in to leave a comment

Changes

-

e1a25d6: I left out adding

list of active formatting elements, so I finally added it here. It was a lot more annoying that I thought it would be. - afb2530: I refactored most of my codebase to make use of Rc<Refcell<>> instead of Box<> and other shitty workarounds. The code is a lot… fatter? now. But it is also a lot cleaner and easier to work with. I also got started with the 7th state and it’s going well, I noticed a bug from the refactor but I couldn’t be bothered to fix it as of now.

Next Steps

- Complete the 7th state (the “in body” state).

- Trace the bug that I noticed after the refactor and fix.

Note

The attachment is ~90 lines of an almost 300 line long tree structure that I use to represent the DOM!

Log in to leave a comment

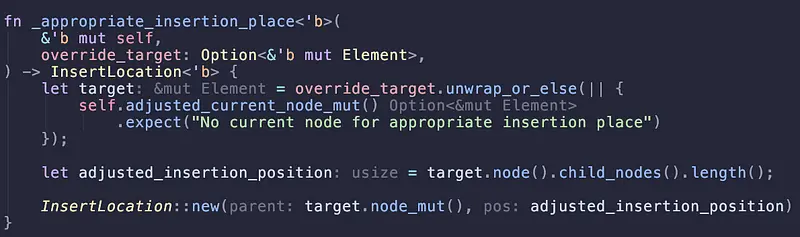

(I keep forgetting to devlog)

Changes

- f0f4b470: I decided that my old implementation of some of the structs in the HTML specification was not very good, so I rewrote them. This should make future changes easier. Along with this, I started working on the HTML parser again - this time on the tree construction stage.

- 951e074e: I completed the first 2 insertion mods states in the tree construction stage of the HTML parser.

- db893cd: Not very eventful, I just added a few more insertion modes to the HTML parser’s tree construction stage.

-

19f26fe: I added 2 more insertion modes (6 total now), and also properly implemented a function that I’ve been meaning to implement for a while now (

_appropriate_insertion_place). Refactoring my code to support this function was very satisfying.

Next Steps

- Continue implementing more insertion modes for the HTML parser’s tree construction stage.

Log in to leave a comment

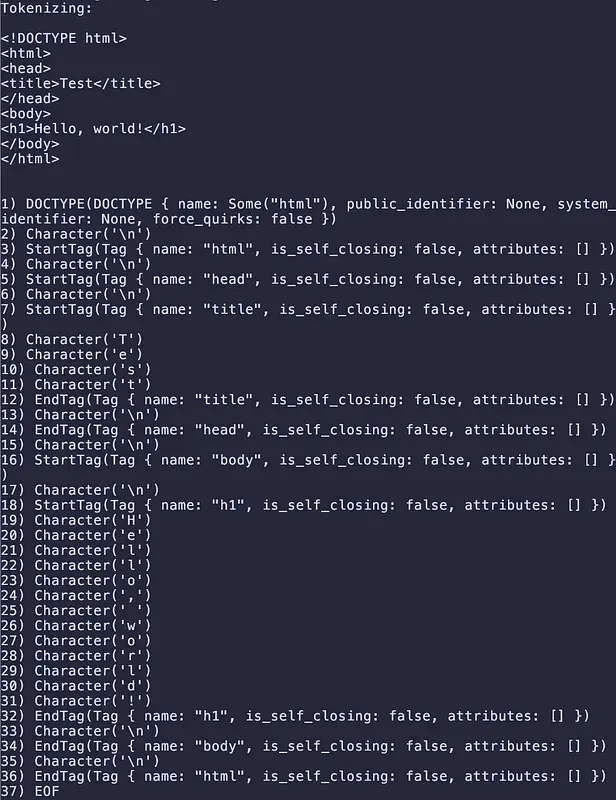

(This was a LONG coding session)

Changes

The core theme of this devlog is the implementation of the HTML Tokenization spec - comprising an absolutely enormous state machine with 80 different possible states, at least 40 different kinds of errors, and extremely poor documentation on specifics. Commit-wise breakdowns are pretty uneventful but out of habit I’ll do it anyway - but with no details.

- 6d3c54d: 21/80 states

- fa9cb38: 33/80 states

- 3882f6c: 55/80 states

- eb2aeb5: 80/80 states + test run on tiny dummy HTML

Next Steps

- I’ve got my tokenizer completed, but I still need to construct a Node tree using the emitted tokens. That’s what I’ll be working on in the next devlog, along with implementing more parts of the HTML spec as is required.

Note

This was probably the most boring coding session I’ve ever gone through. The code, like the specification, is repetitive, and can probably be modularized to oblivion. But if you think I’m voluntary touching those 2600 lines of code EVER again, you’re crazy.

(Edit: Changed a commit link that was broken after commit reword)

Log in to leave a comment

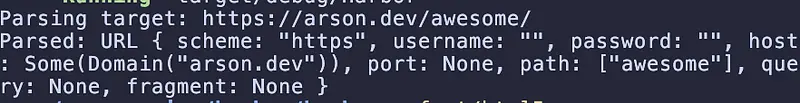

Changes

- f7eec2d: I added some serialization functions to all the new URL-related structures, refactored the URLPath structure to be more representative of the specification, and integrated the new URL infrastructure into my existing HTTP client code.

Next Steps

- Implement the HTML parser (for real this time)

Note

The next devlog is probably gonna be a BIG one, like 5+ hours minimum (most likely) - given the fact that the HTML parser specification is just so LONG. I’ve been reading through it and the main state machine literally has 80 states and that’s not even the entire specification because there’s probably a billion utility functions and side quests I’ll have to go on to implement it right.

Log in to leave a comment

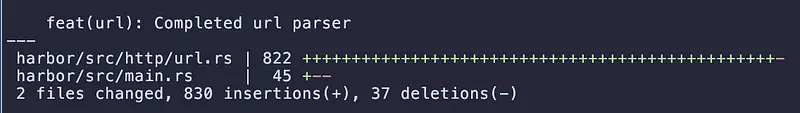

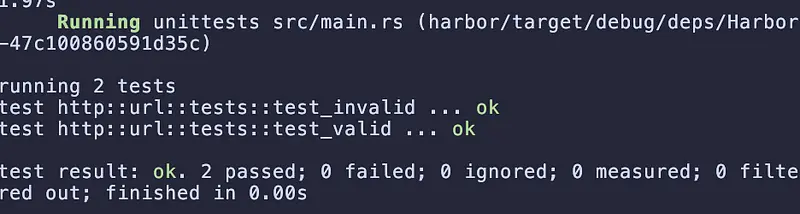

(This was a LONG coding session)

Changes

- 5fb1292: As promised, I worked on IPv4, opaque and domain parsing. That concludes the host parsing algorithm, it was a LOT lengthier than expected;

- 7728d02: I completed the ENTIRE URL parsing specification’s implementation. This took WAY TOO LONG. Seriously, there’s no way parsing a URL is THAT deep. For reference, the entire implementation spans almost 1500 lines, consisting 4 different individual parsers (IPv6, IPv4, Opaque, Host), 5 different encoding sets, 27 different custom errors, that all come together with a 600 line long state machine to parse a URL.

Next steps

- sleep

Log in to leave a comment

Changes

- 47f712d: Little bit of work on the HTML spec.

- a849fb1: I was trying to implement the URL specification but turns out I need to implement a host spec and for that I need to implement IPv4, IPv6 and a couple other things. So I’ve implemented IPv6, but this devlog has been mostly just busy work that doesn’t have much immediate benefit to the project.

Next Steps

- IPv4, opaque and domain parsing (basically, finish up host parsing as a whole)

- URL parsing (I need to finish host parsing for this)

Log in to leave a comment

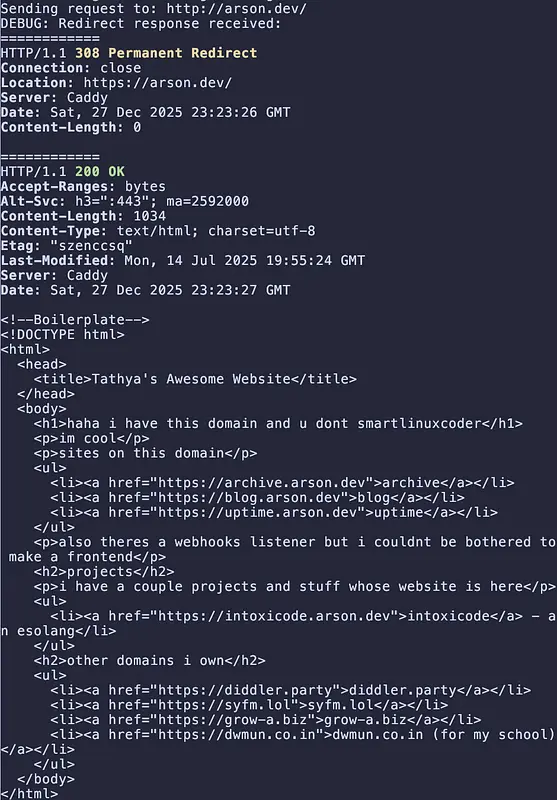

Changes

- f351b9c: I moved from calling DNS resolution functions independently to having a DNS resolver which caches resolved addresses and does cache invalidation after a TTL is elapsed.

- d443140: Added automatic redirect following, so responses with status code 3XX automatically trigger a redirect check

- abea778: Started work on the HTML stuff by adding some structures I saw in the official HTML5 specification.

Next steps

- Ignore most of the remaining structures/traits (and the stuff I marked as TODO), and go straight into the HTML parser

Notes

Maybe trying to start with expressing the entire HTML spec in code wasn’t the best idea. I don’t like leaving things mid-way but I don’t really see another way out here, at least for now.

Log in to leave a comment

Changes

- bc11bca, 076345e: I added basic DNS resolution so you don’t have to type in IP addresses when sending requests, and can just write a domain/hostname

- 17ad97c: I abstracted streams away into a trait allowing me to implement a new kind of stream which supports TLS, which means I can now send and receive responses with HTTPS!

Next steps

- DNS caching and cache invalidation

- Maybe some work on the rendering?

Log in to leave a comment

Changes

- 9eae3a1: I added response decoding using a state machine system that populates a request object as its fed data. I designed the system to be robust to an extent that even if it receives data in chunks of 8 bytes it is able to properly construct a response object.

- c9c0f02: Added a little helper function to get a nice display of the response

Next steps

- Actually look at HTTPS

Log in to leave a comment

Changes

-

0784aeb: Started work on the HTTP client that will be sending requests and receiving responses. The next commit should have the actual sending moved into a separate function so that different HTTP Protocols can send requests differently.

-

ba895e8: I added integrity checks to requests, different send methods depending on protocol, basic response decoding (only enough for HTTP/0.9 for now), and a basic Client struct so you can specify an address once and send requests to it multiple times

Next Steps

- Implement response handling for HTTP/1.0 and HTTP/1.1

- Look into adding support for HTTPS

Log in to leave a comment

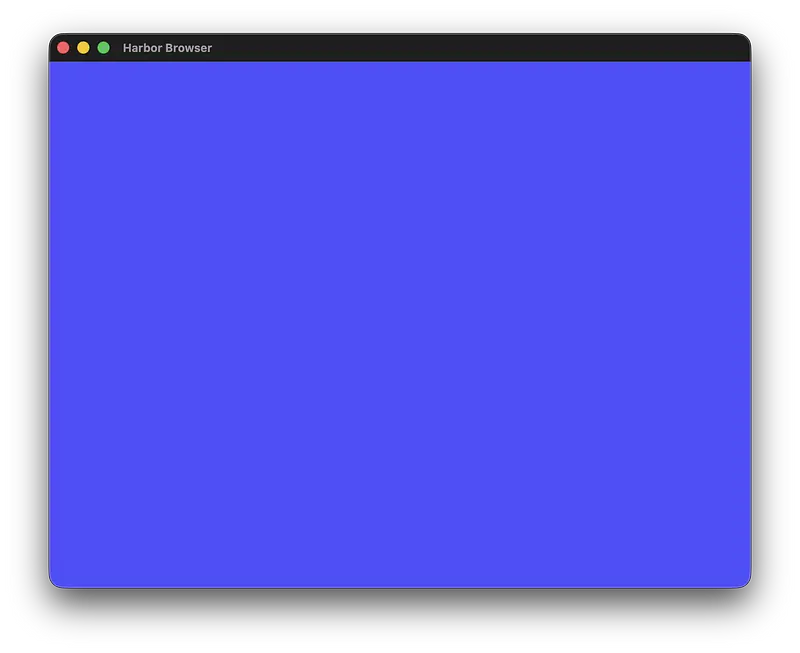

Harbor Browser

Harbor Browser is going to be a browser where I write every major service myself. This means everything from:

- HTTP client

- HTML/CSS parser and renderer

- JS interpreter

And anything else a traditional browser would have must be written by me. I’m trying to limit myself to as few dependencies as possible.

Progress

I decided to use winit and wgpu as the windowing and GPU abstraction layers. They’ll be doing a lot of the heavy lifting for this project, I suspect. I got a basic window opening and cleared with a color. It doesn’t sound like much but that was 250 lines of code :|