I’ve officially escaped the gravitational pull of my desktop. After a long, caffeinated battle between Python’s logic and JavaScript’s quirks, the project has successfully landed on the Web.

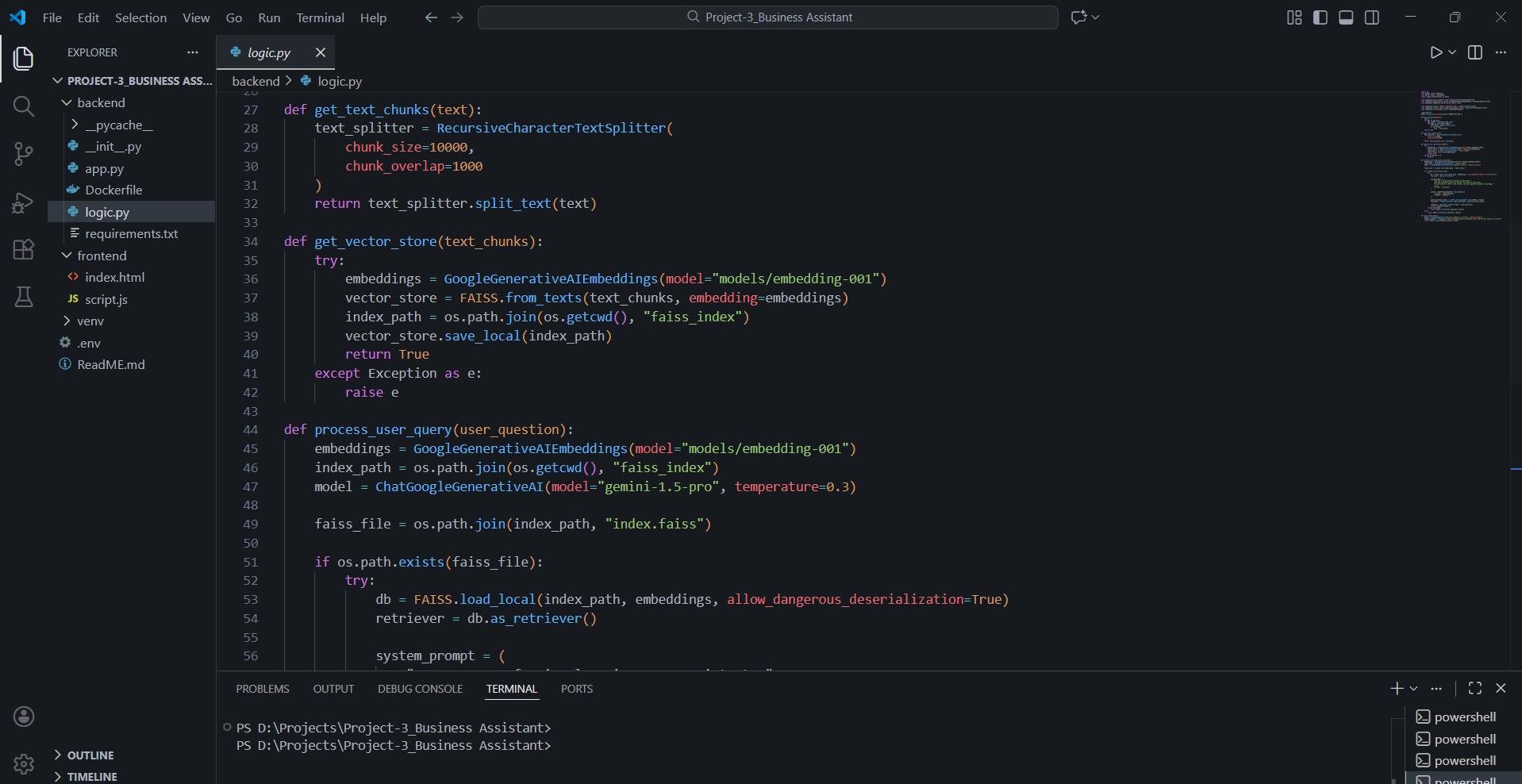

🔌 Bridging the Galaxy (Python ➡ JS)

Converting the physics engine from Python to JavaScript was like trying to explain orbital mechanics to a goldfish. I had to translate every vector, every gravitational pull, and every trigonometric function into the language of the browser. It wasn’t just a “copy-paste” job; it was a complete brain transplant for the spacecraft.

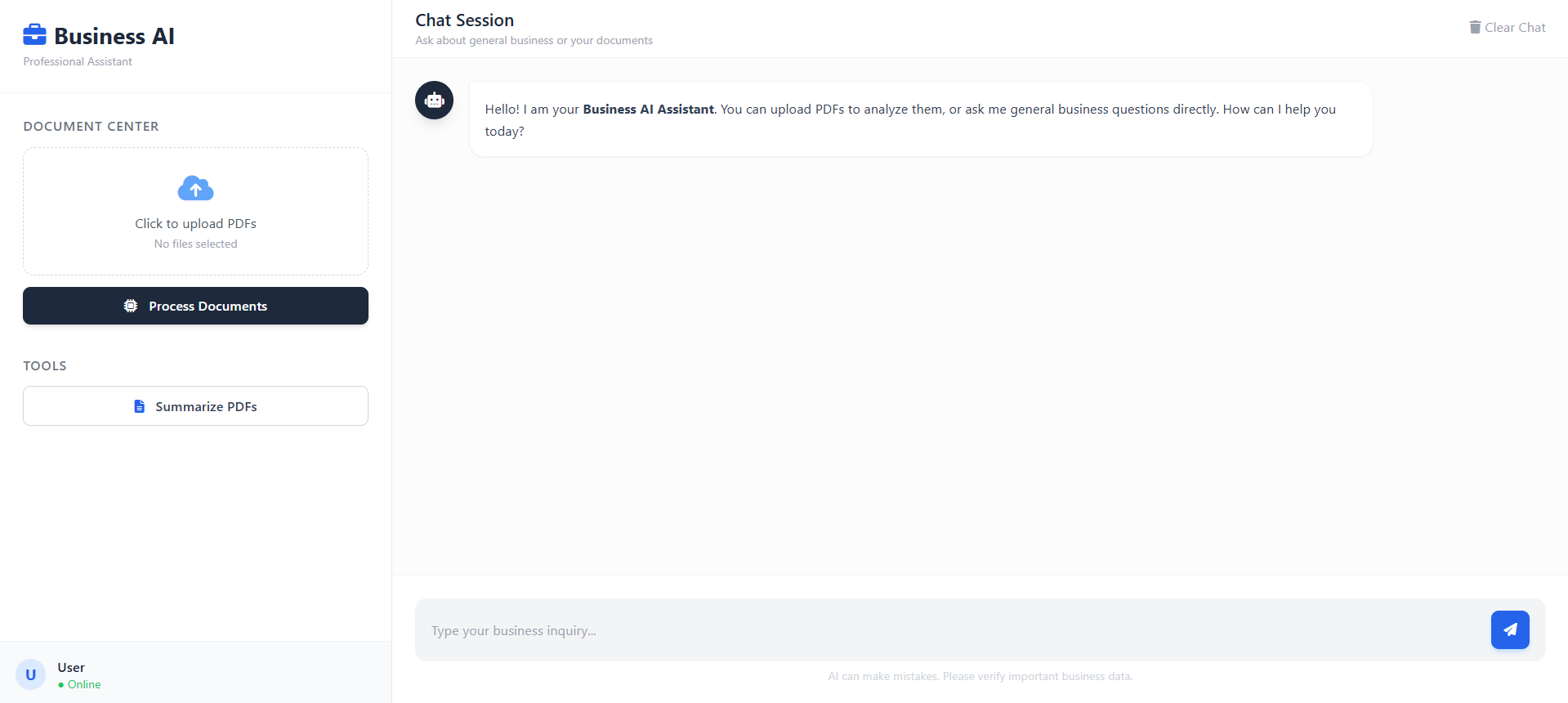

🎨 The Navy Blue Transformation

I decided the simulation needed to look as “cool” as the physics behind it. I’ve revamped the UI with a modern, sleek Navy Blue design. No more clunky windows; just a smooth, Canvas-based experience that runs at 60 FPS without breaking a sweat.

🛰️ The Final Frontier

What I worked on exactly? I rebuilt the entire core using HTML5 Canvas and Vanilla JavaScript. I optimized the collision detection to ensure Jupiter feels as massive as it should, and I polished the click-and-drag launch system so you can yeet ships with surgical precision.

The project is now live, responsive, and ready for anyone with a browser to test their luck against the laws of physics.

Stay grounded, but keep launching.

Log in to leave a comment