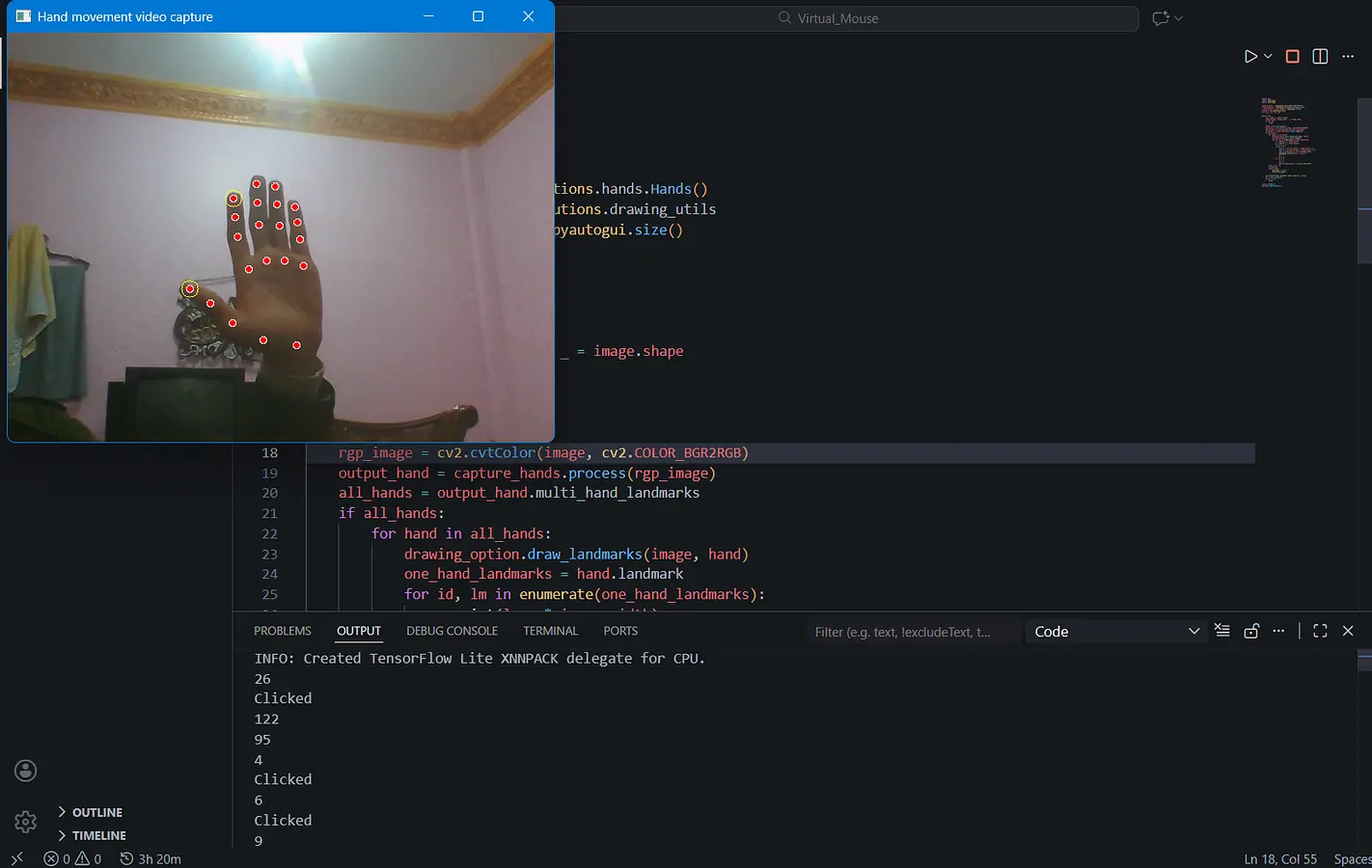

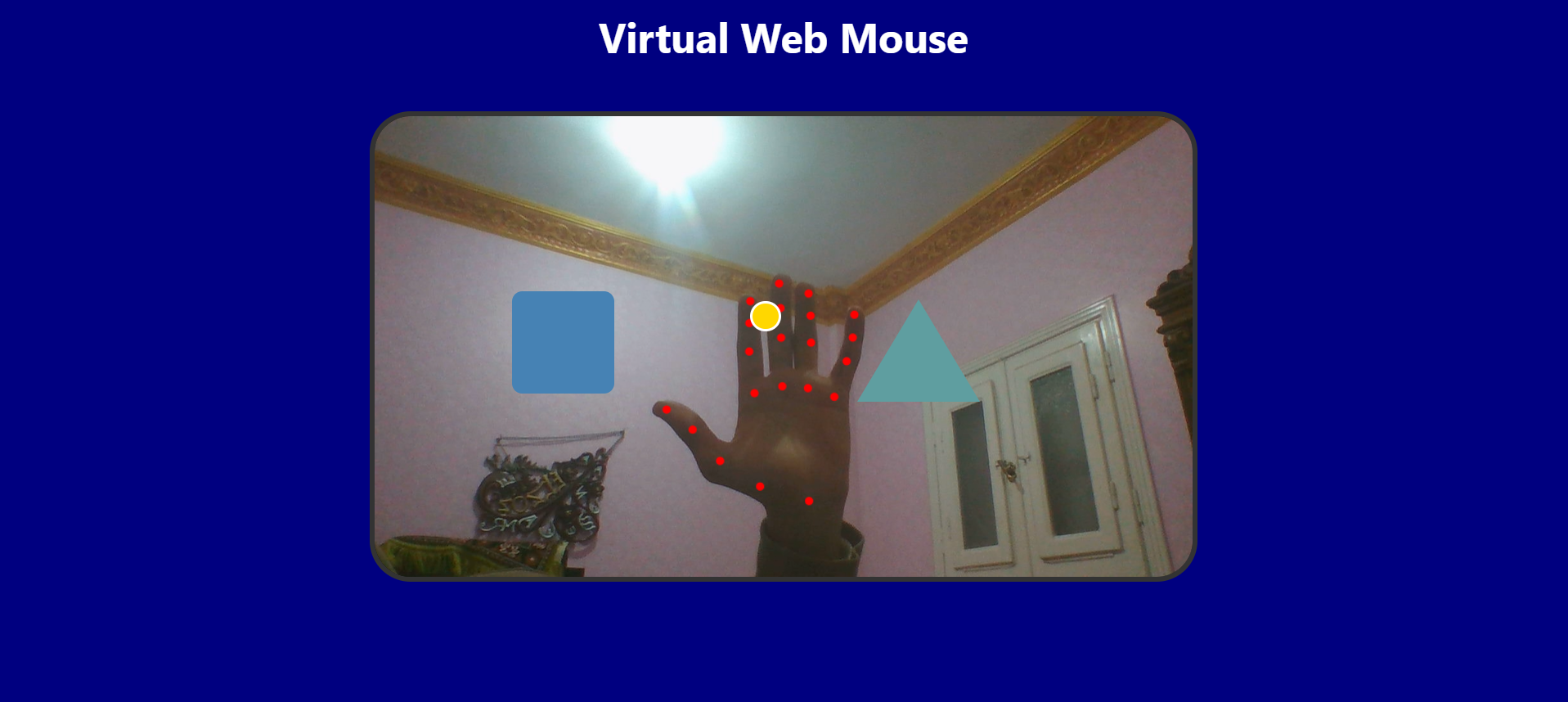

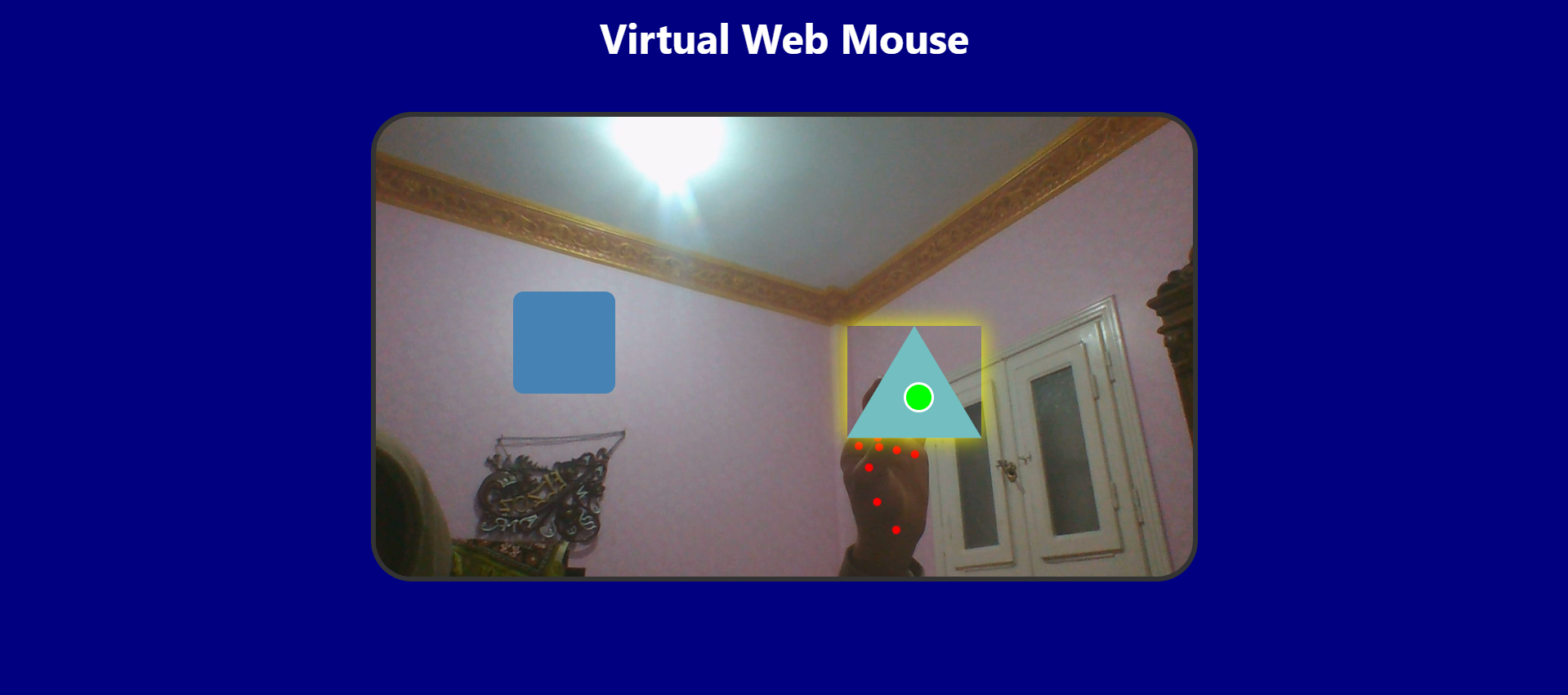

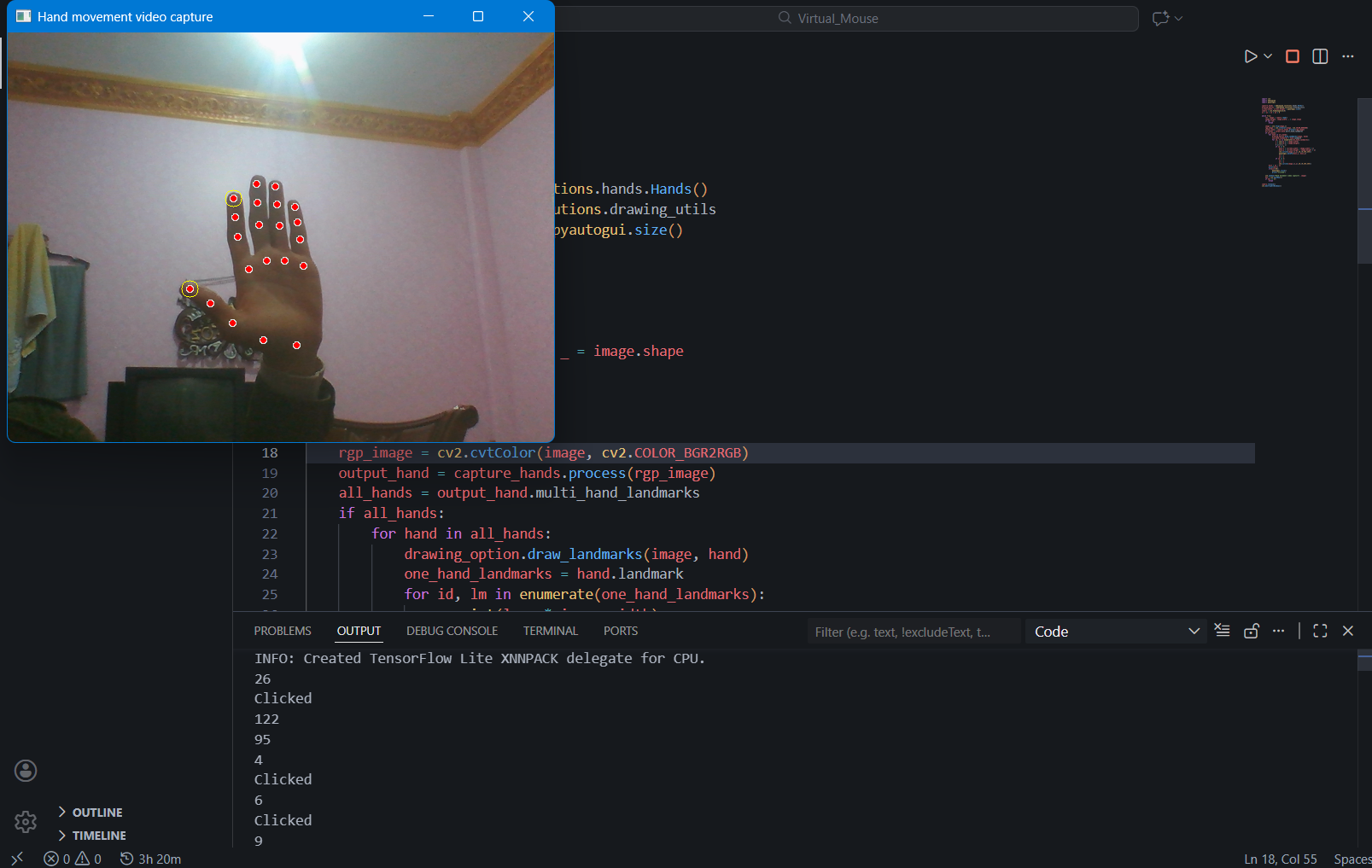

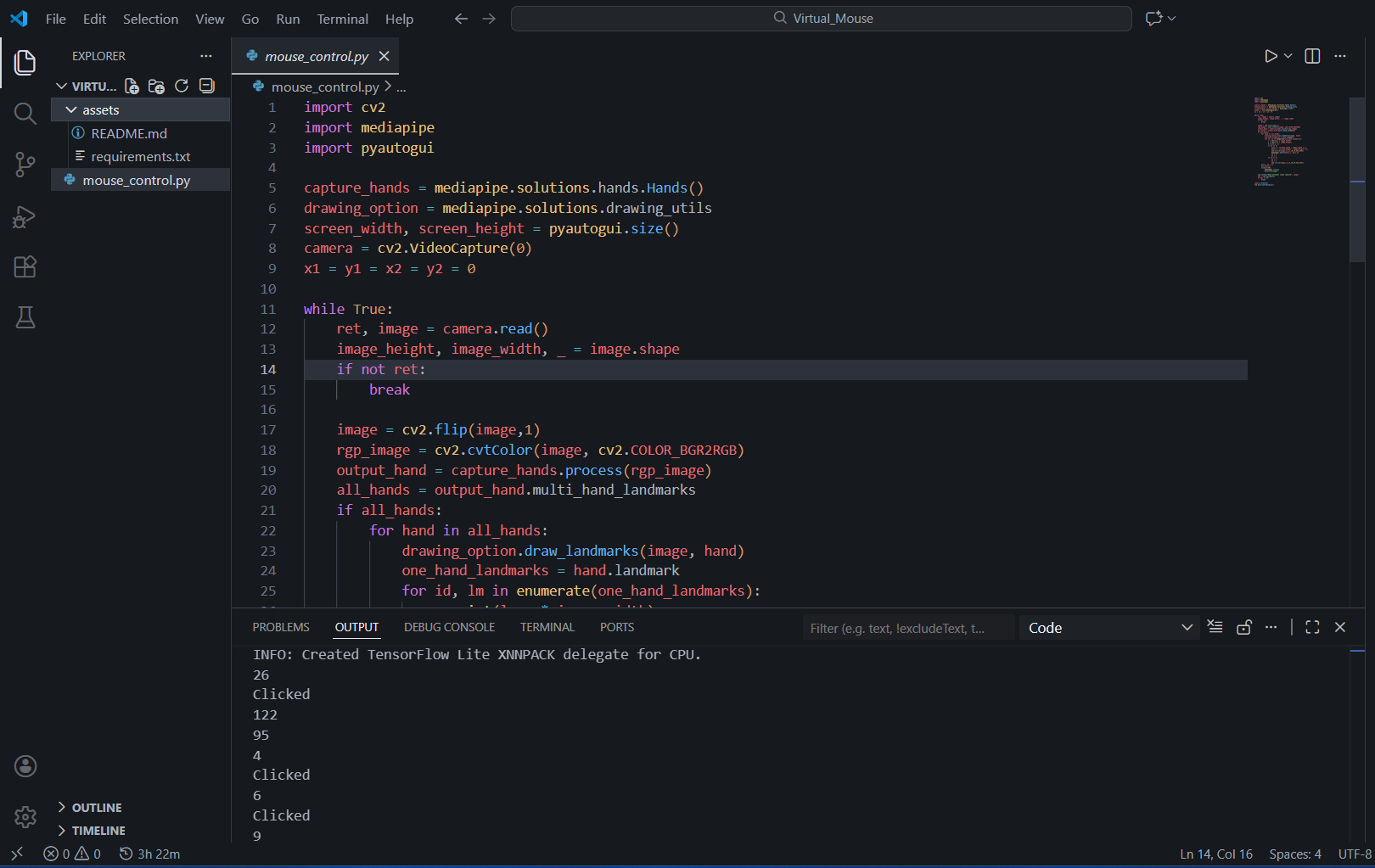

“I built an AI-powered Virtual Mouse that lets you control your computer with hand gestures! Using OpenCV and MediaPipe, it tracks your hand in real-time. I mapped the index finger landmarks to screen coordinates for movement and implemented a ‘pinch’ gesture (measuring vertical distance) to trigger left-clicks. It’s basically a touchless mouse experience built with Python!”

Who’s working on it?

“Just me! I handled the computer vision logic, coordinate mapping, and system integration using PyAutoGUI.”

What’s next?

“Next, I’m planning to add ‘Right Click’ using a different gesture and implementing a smoothing algorithm to reduce cursor jittering for a more fluid experience.”