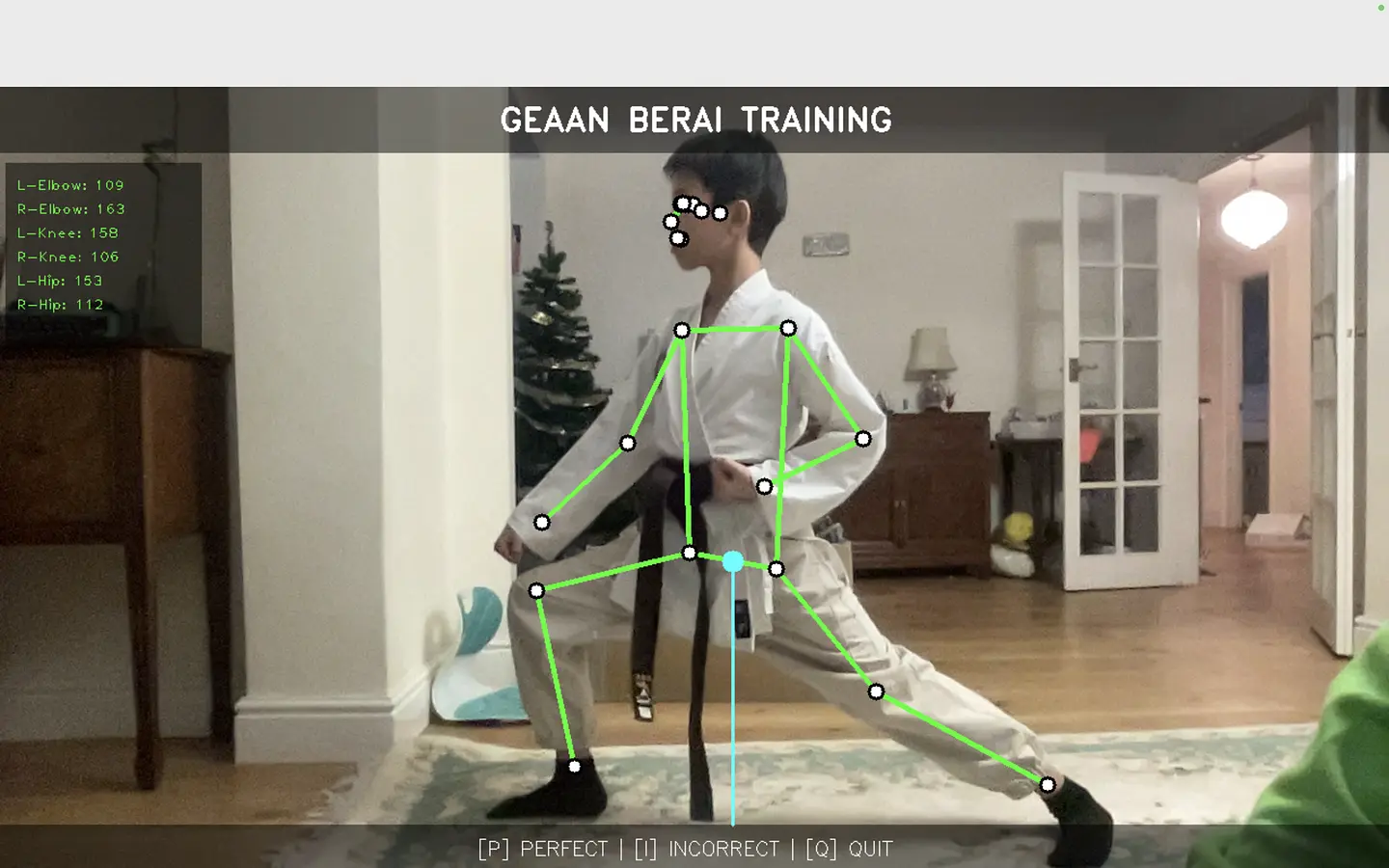

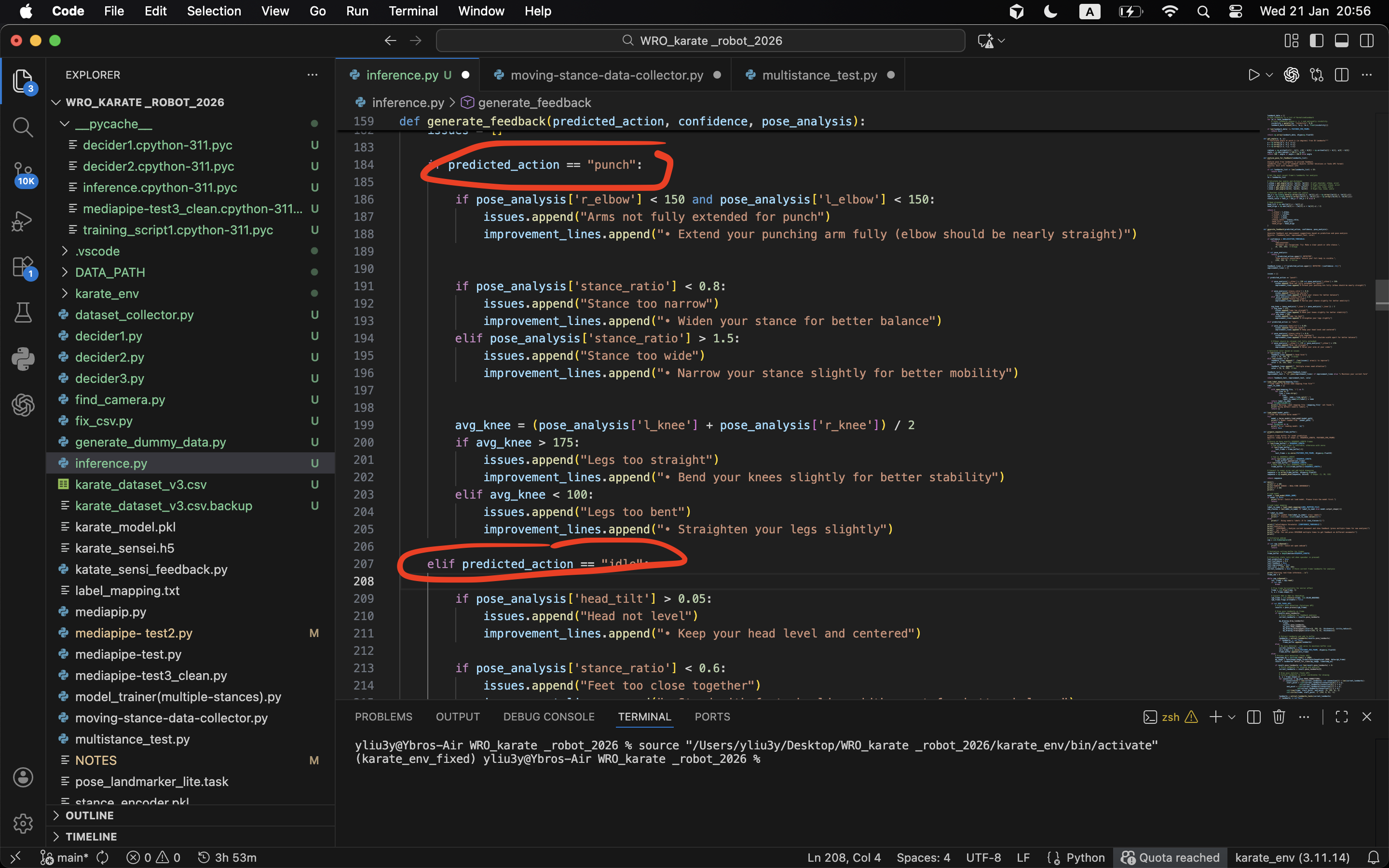

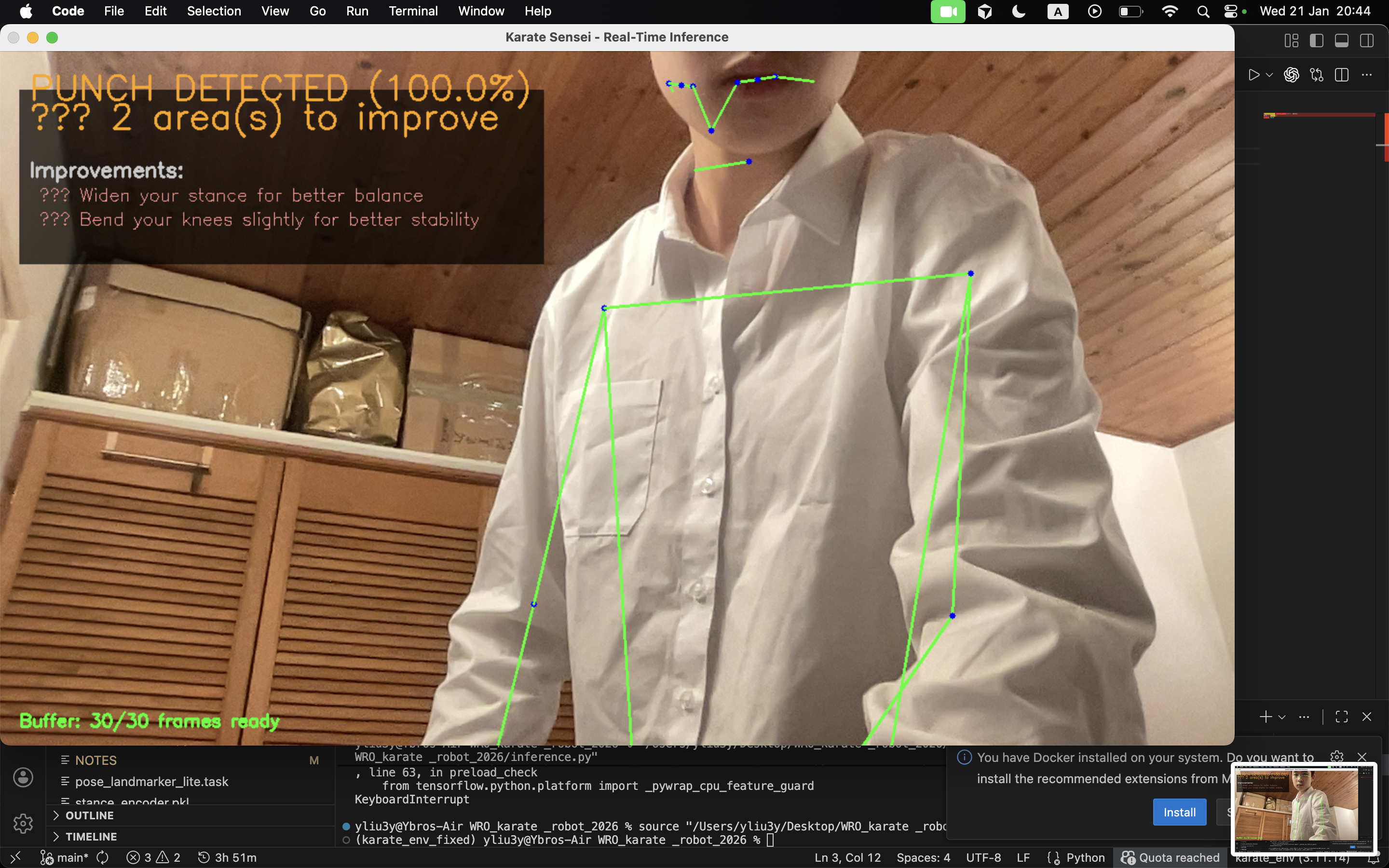

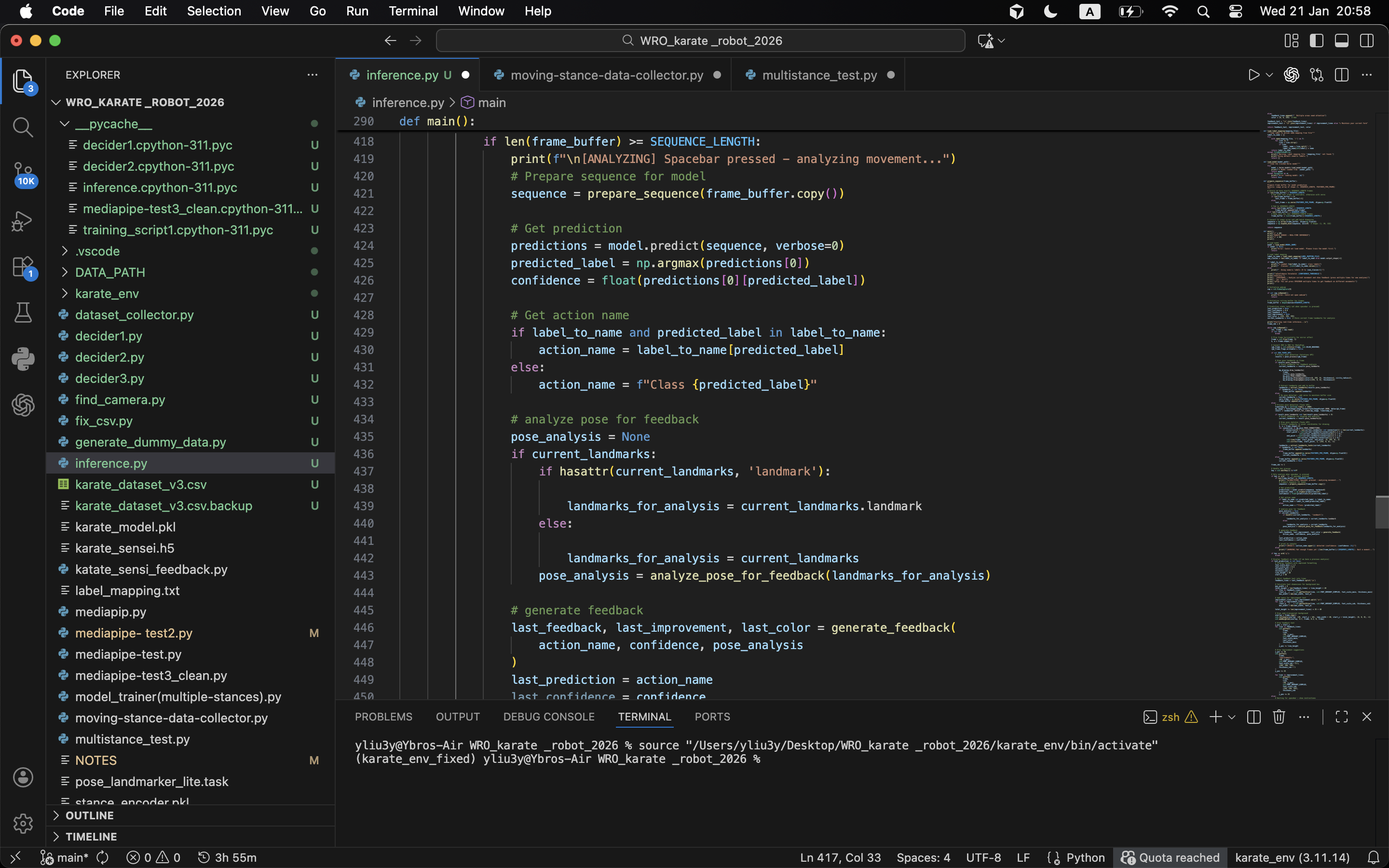

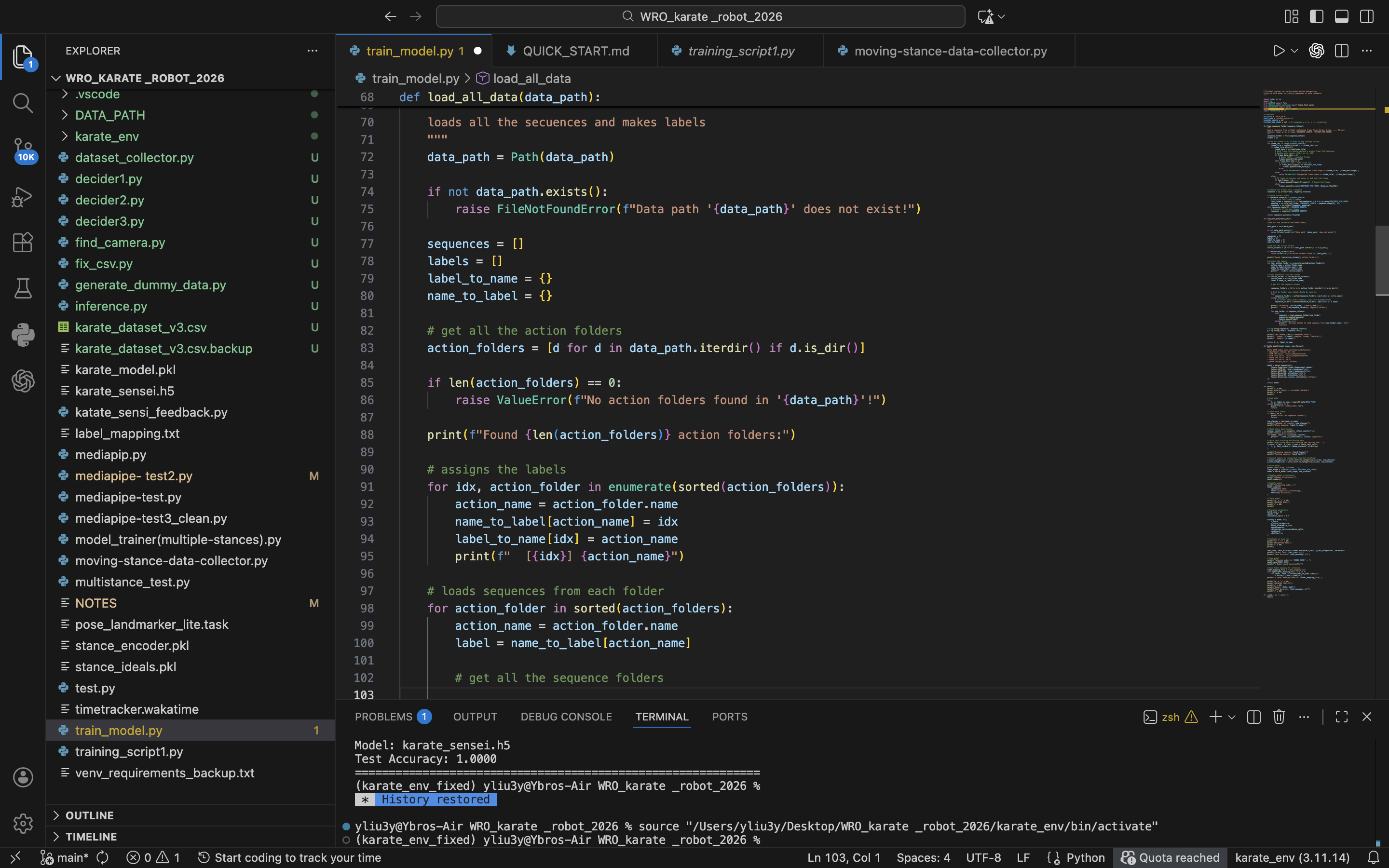

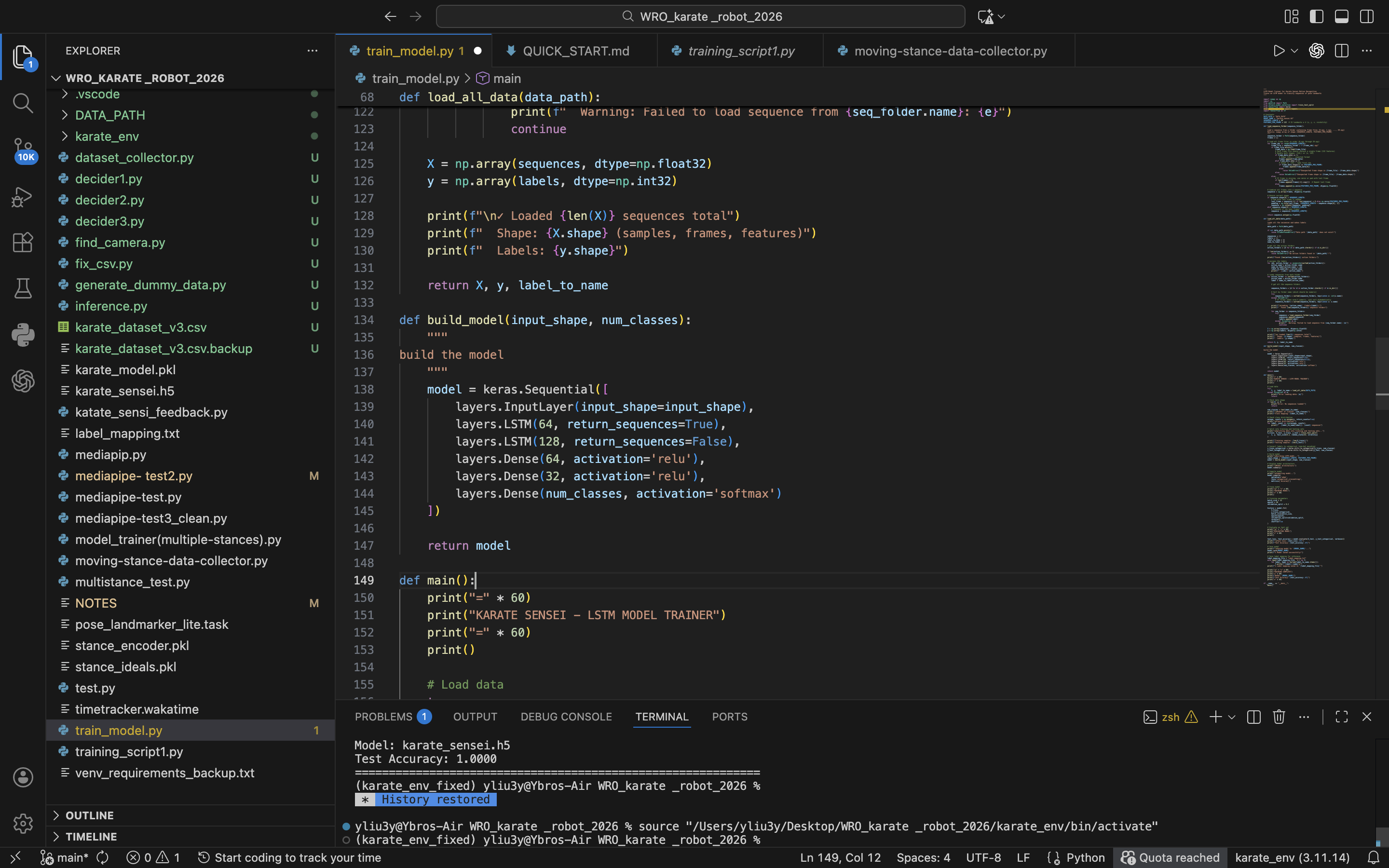

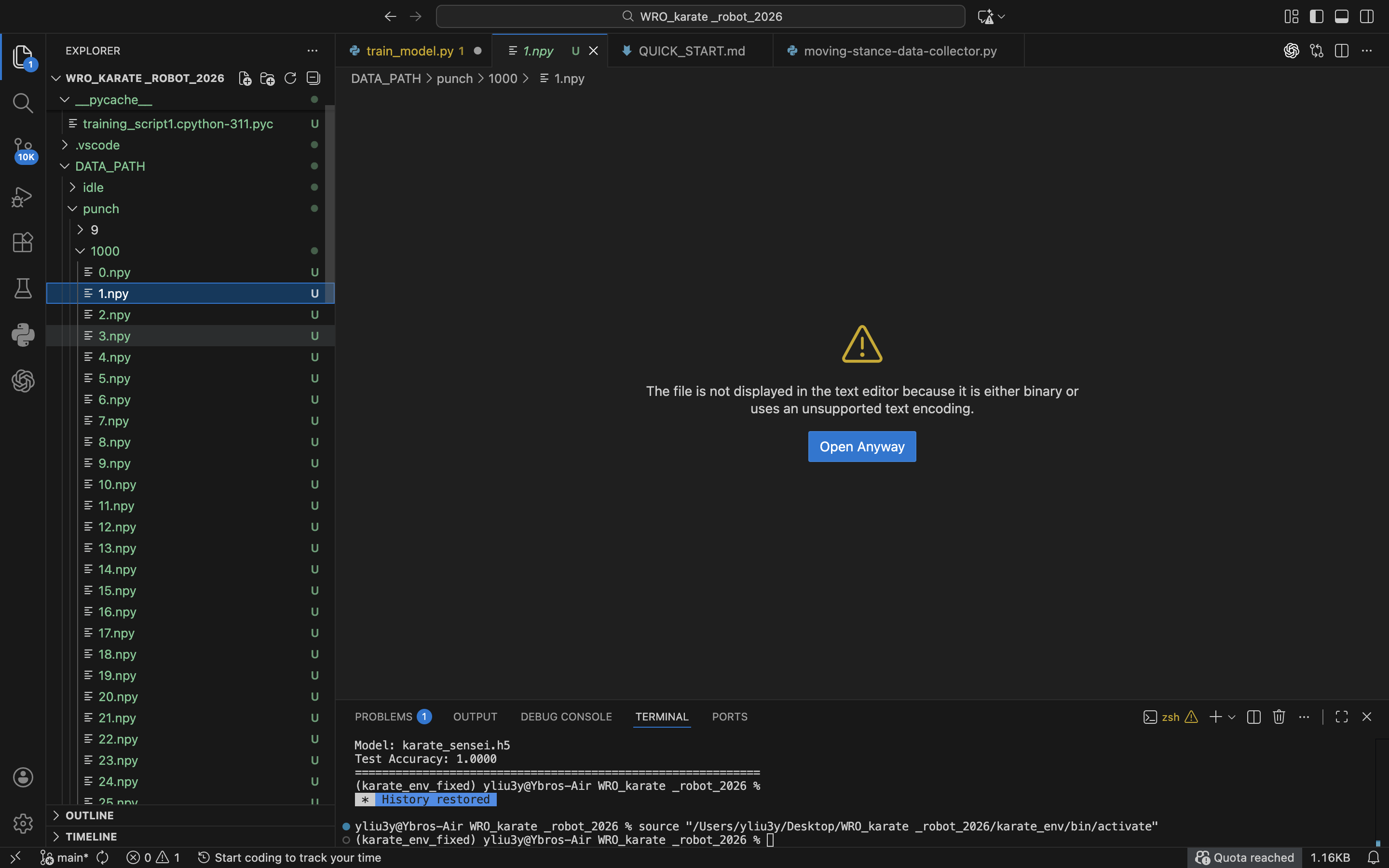

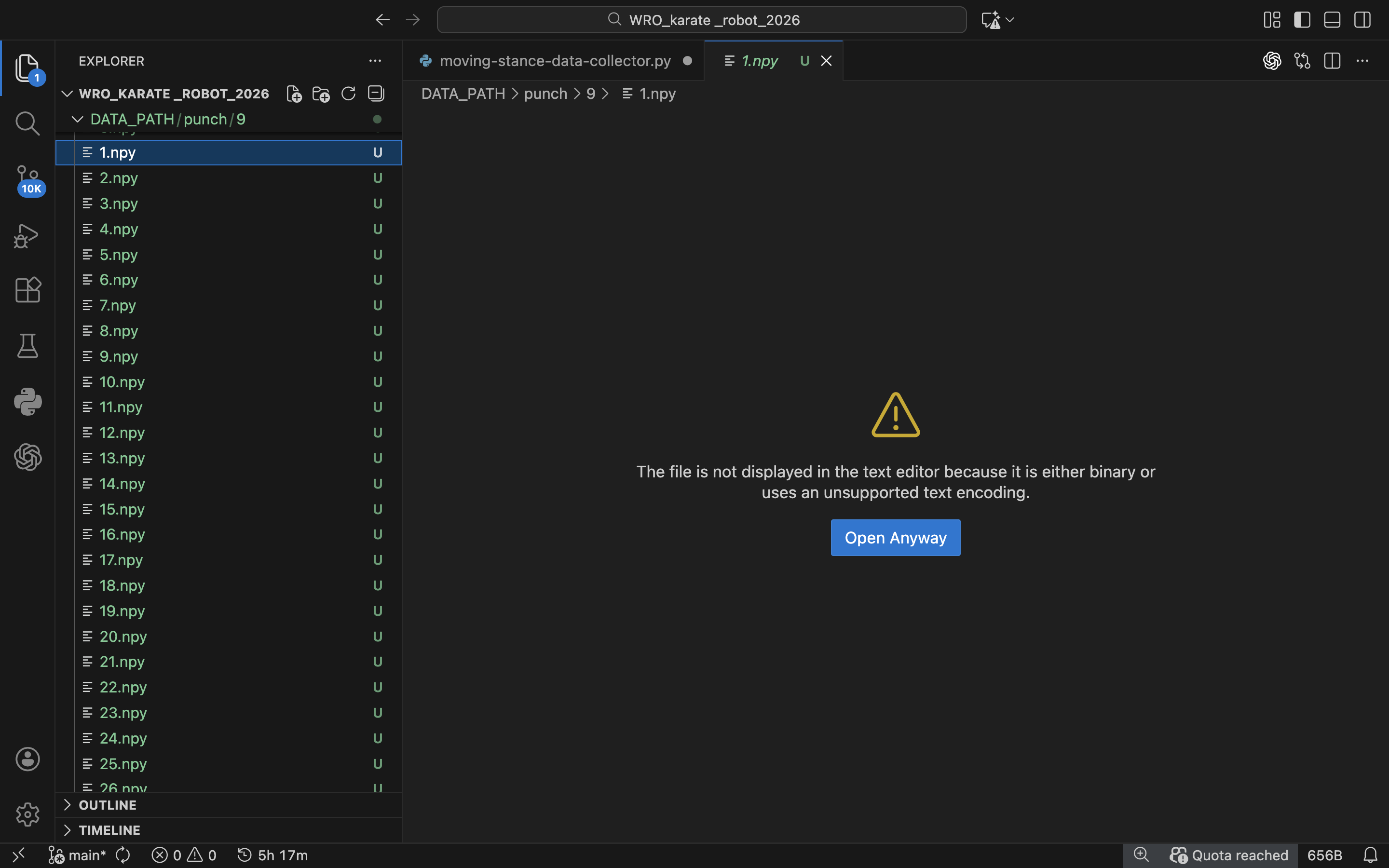

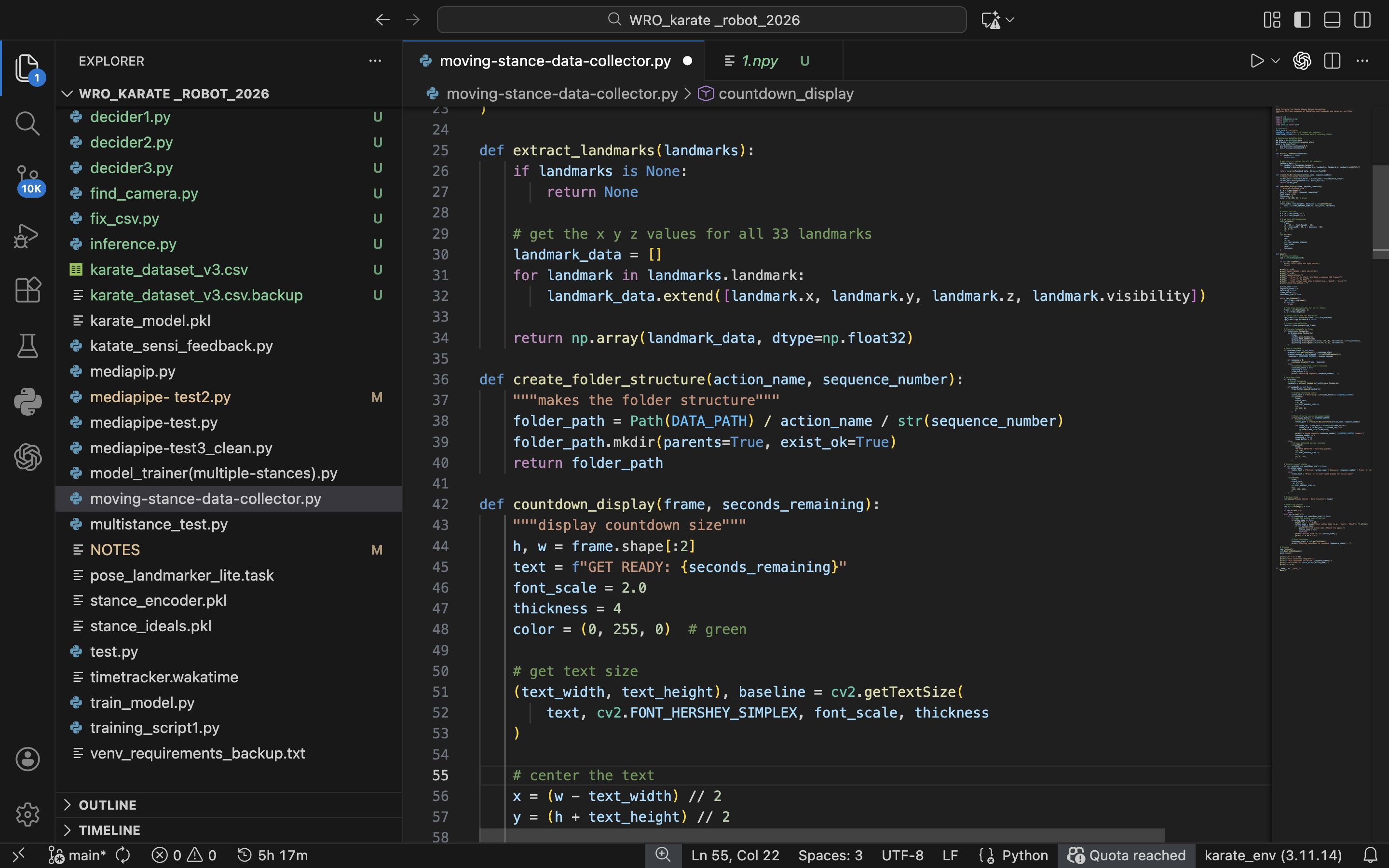

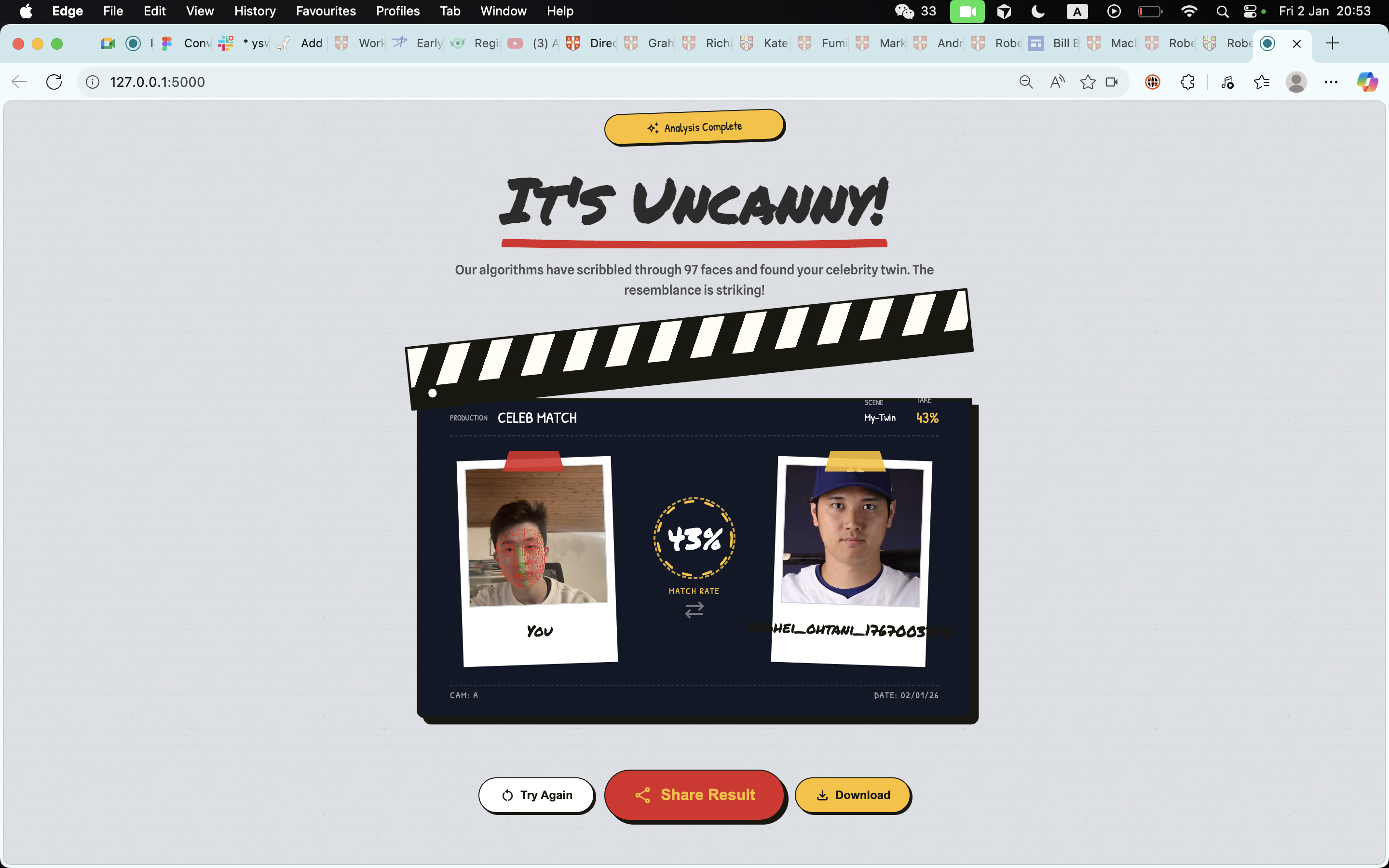

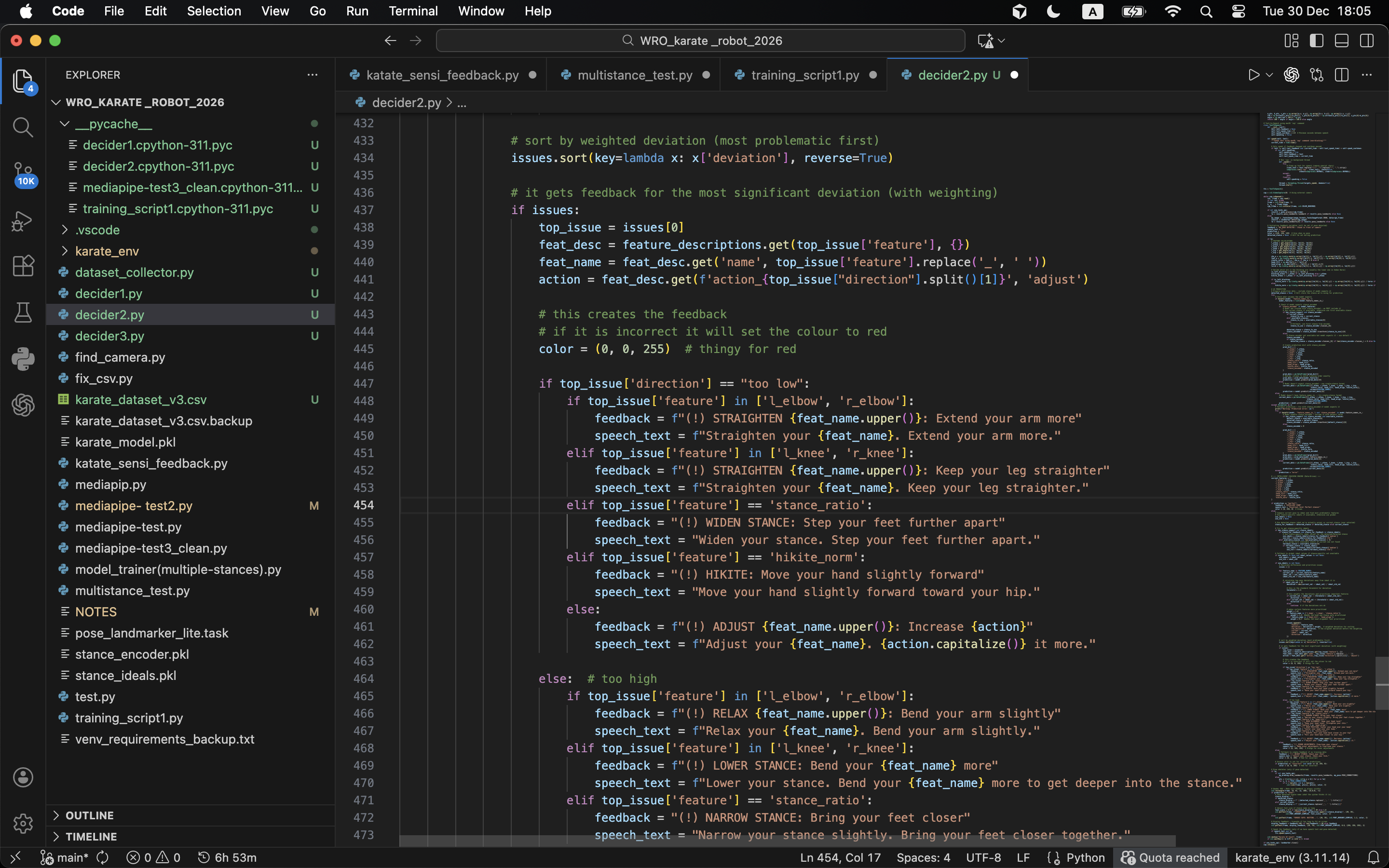

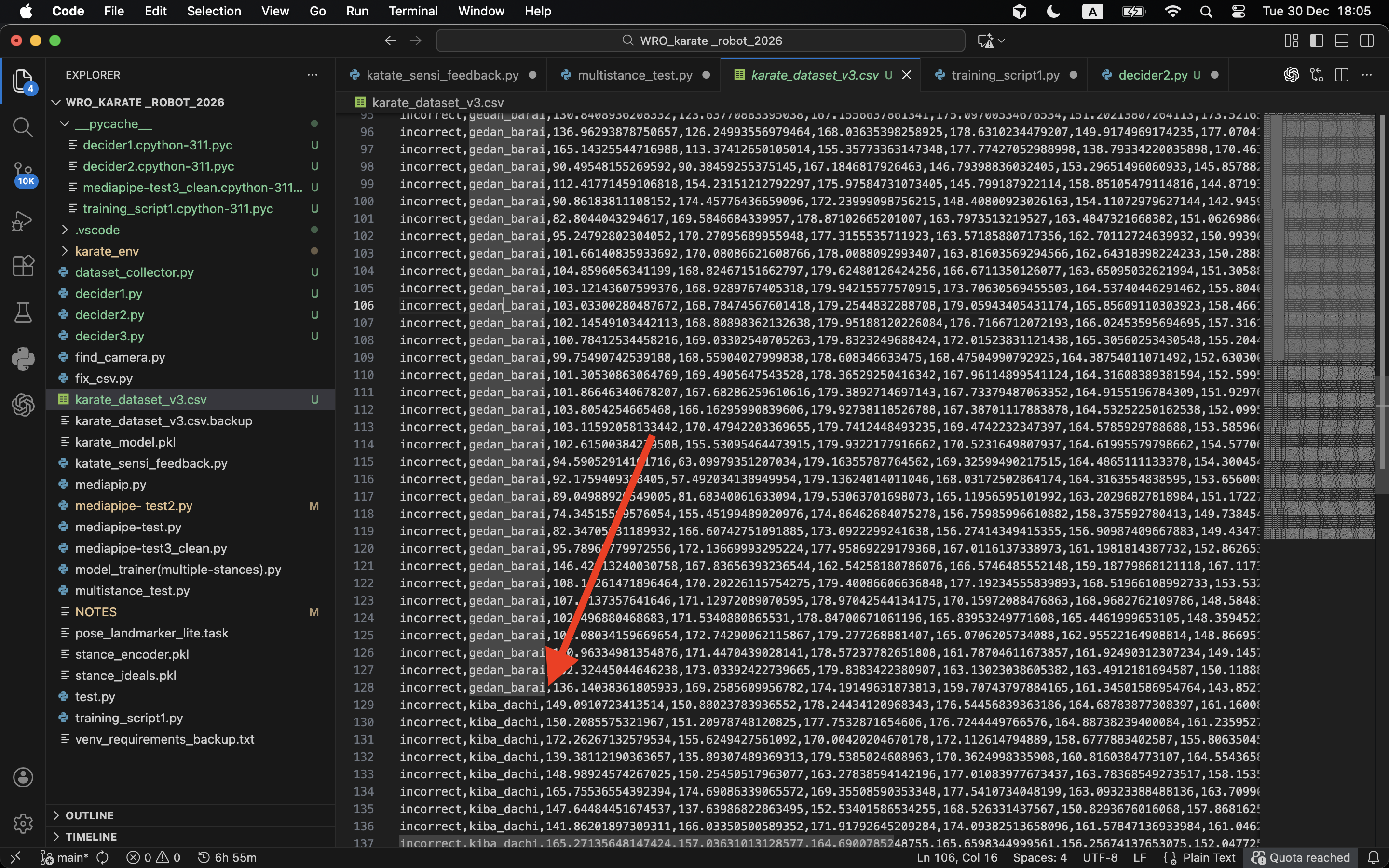

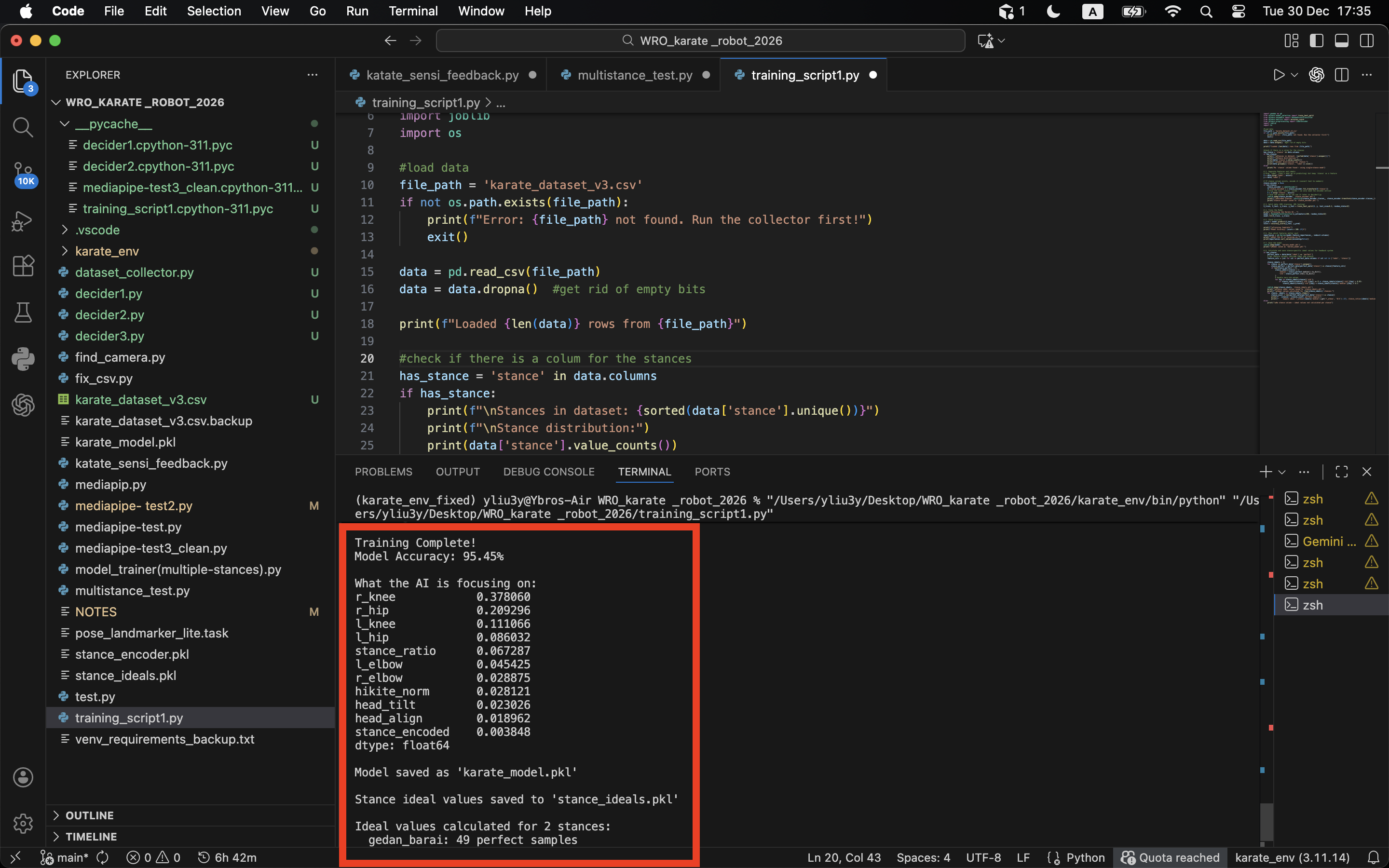

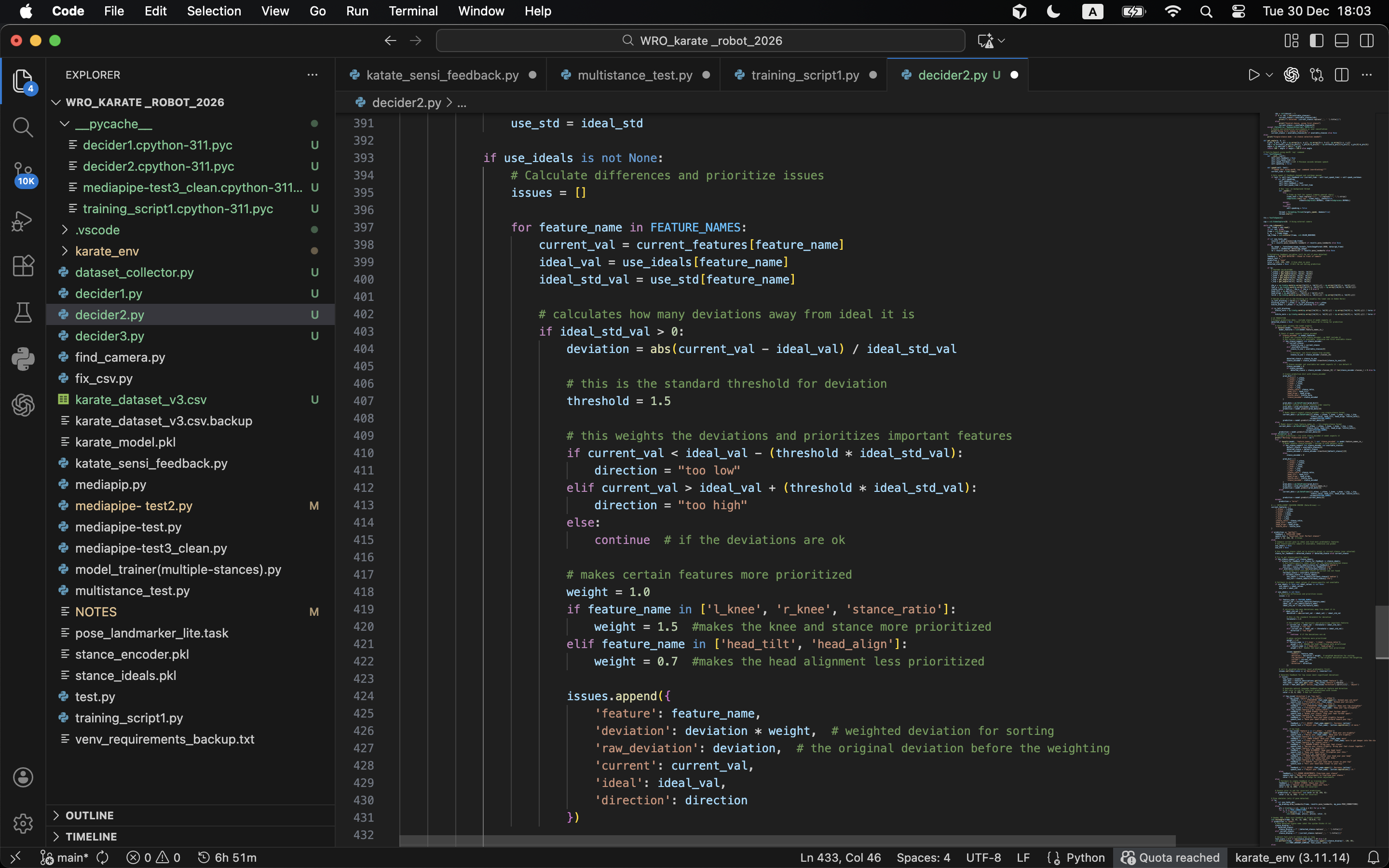

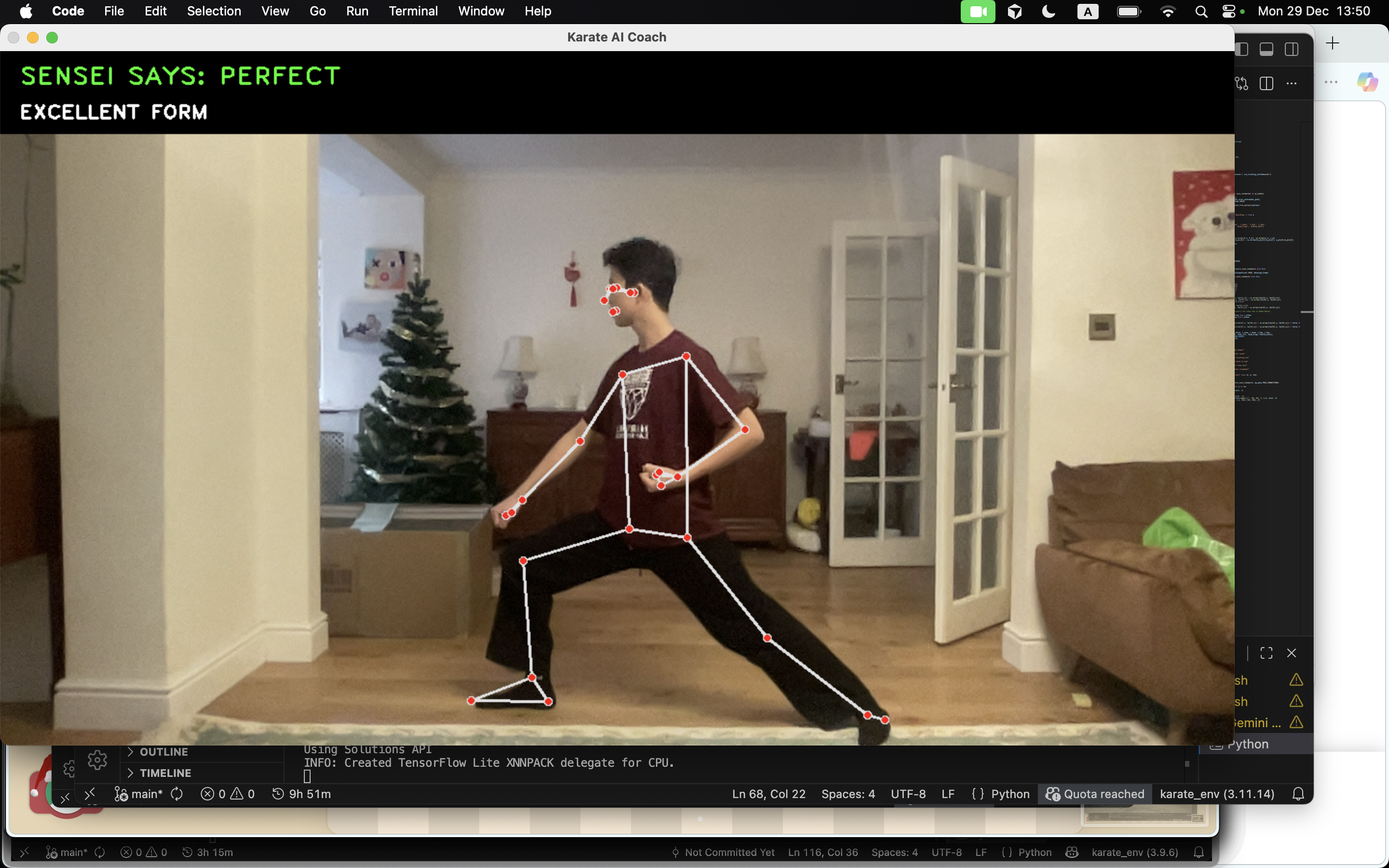

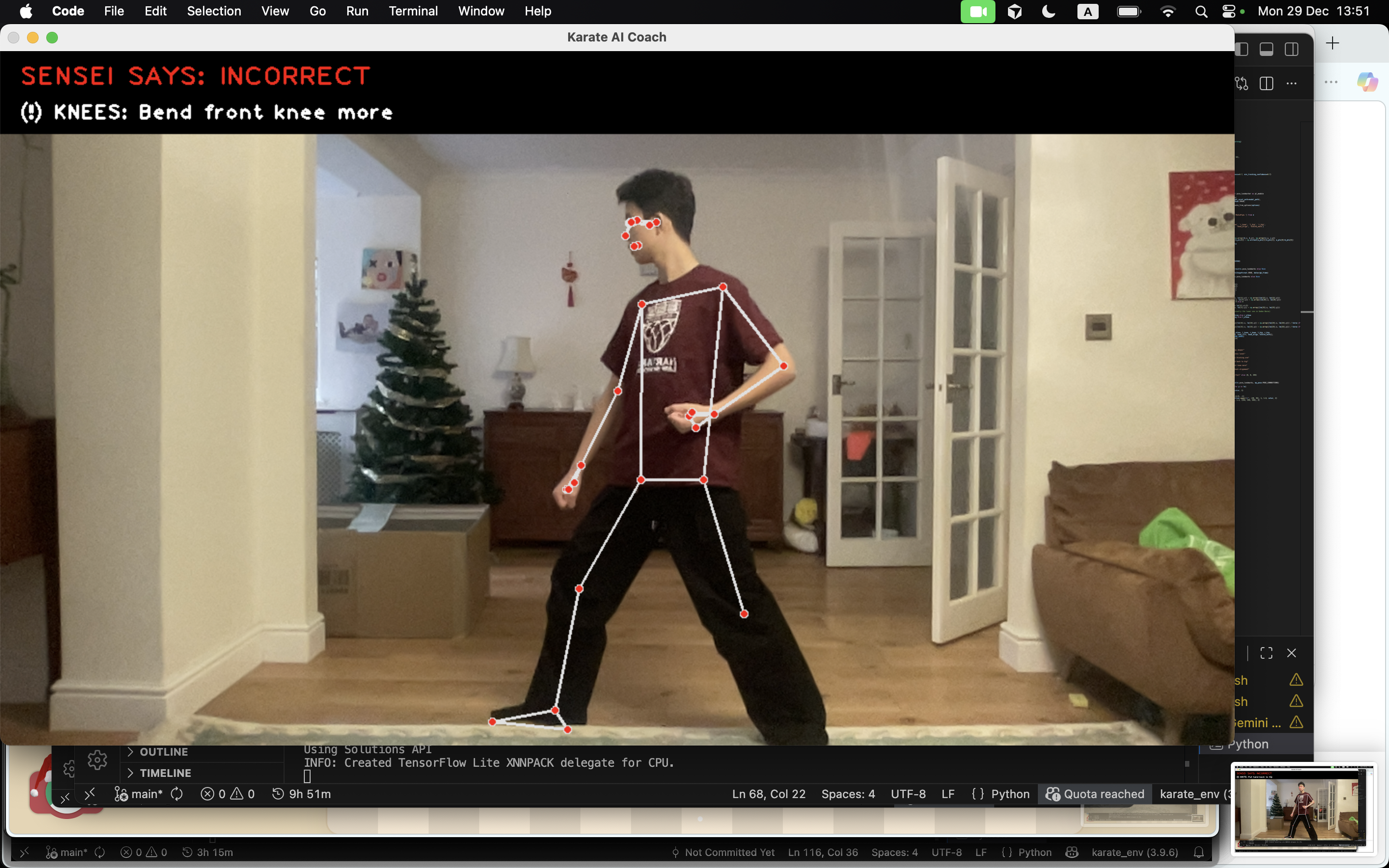

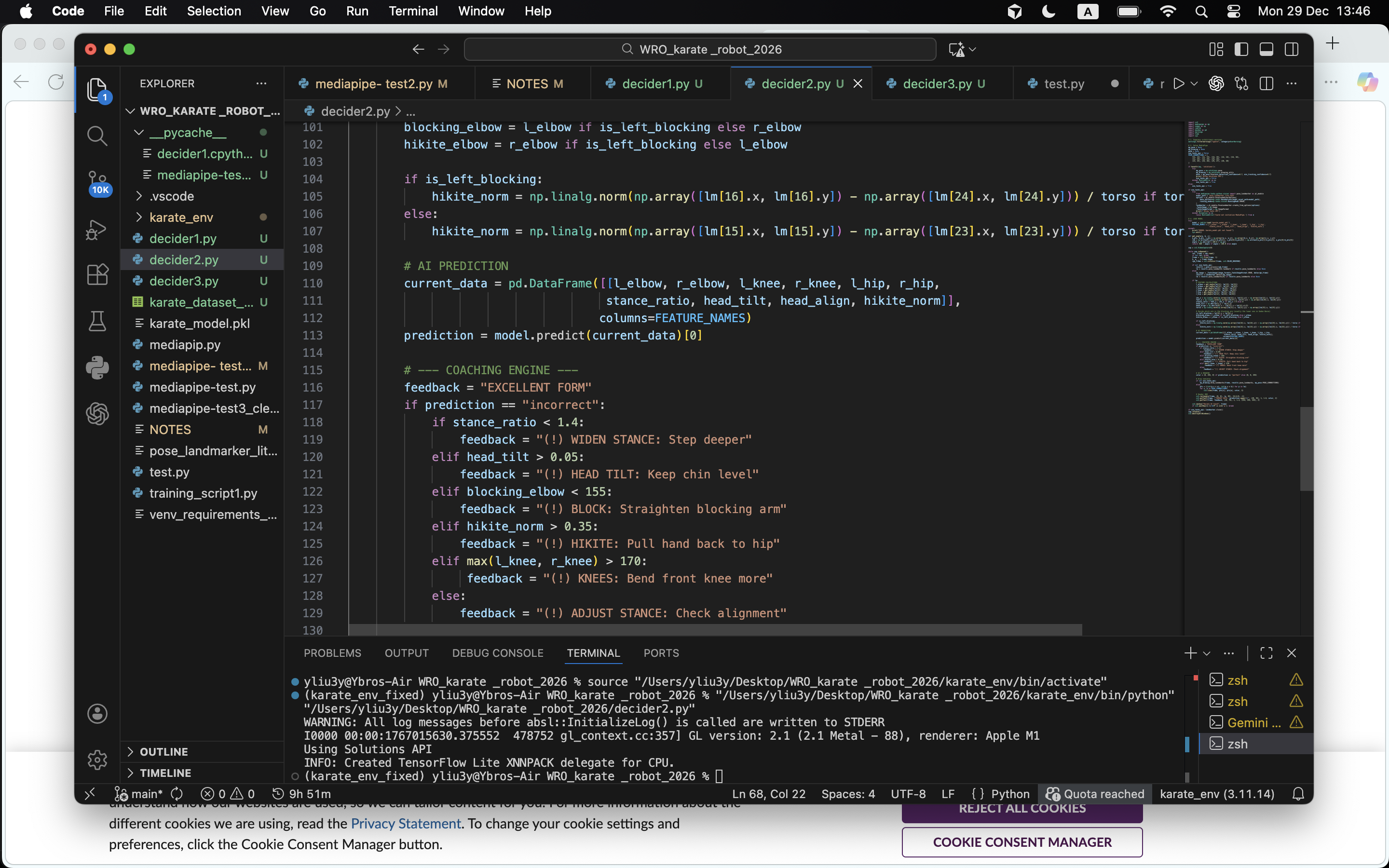

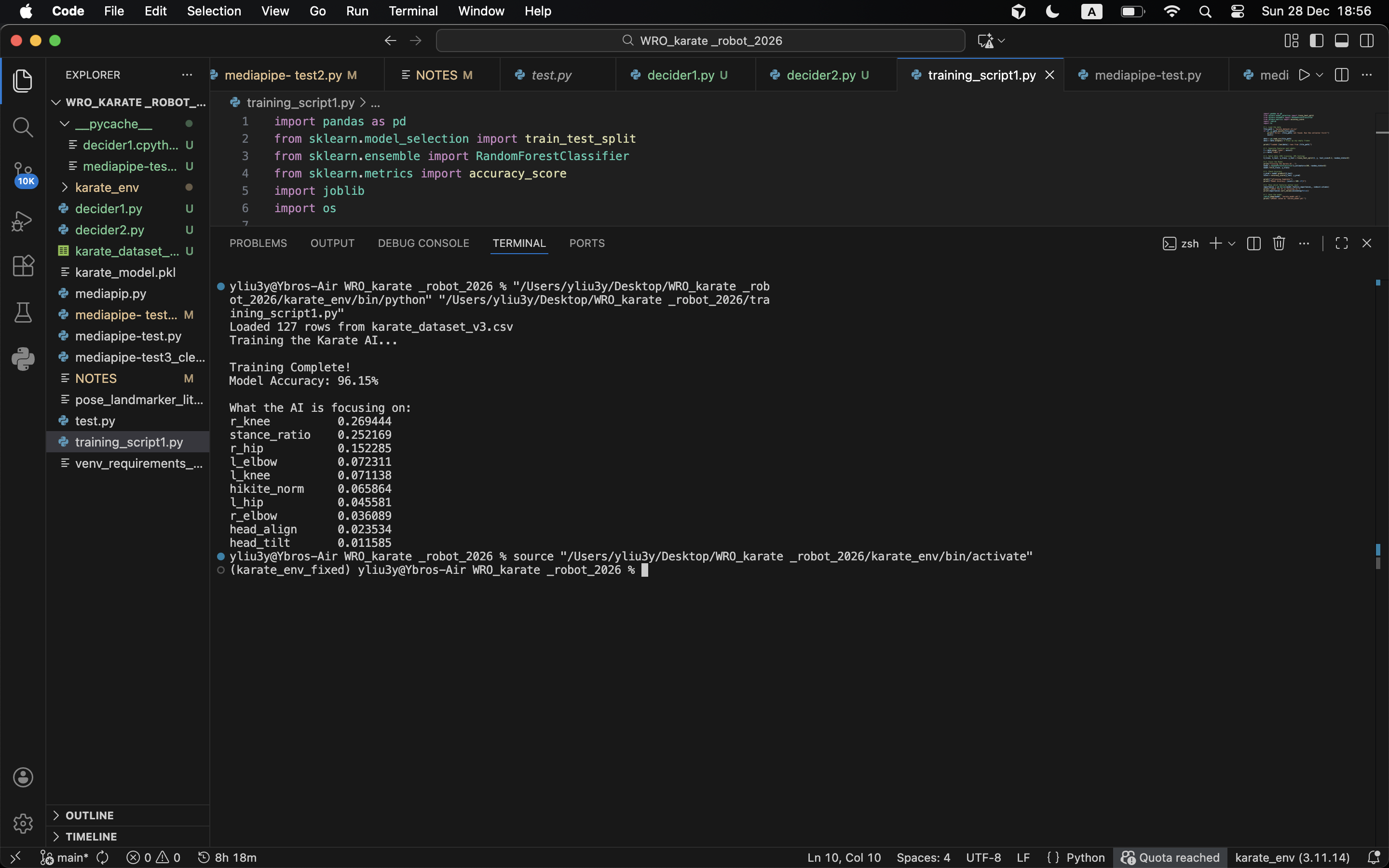

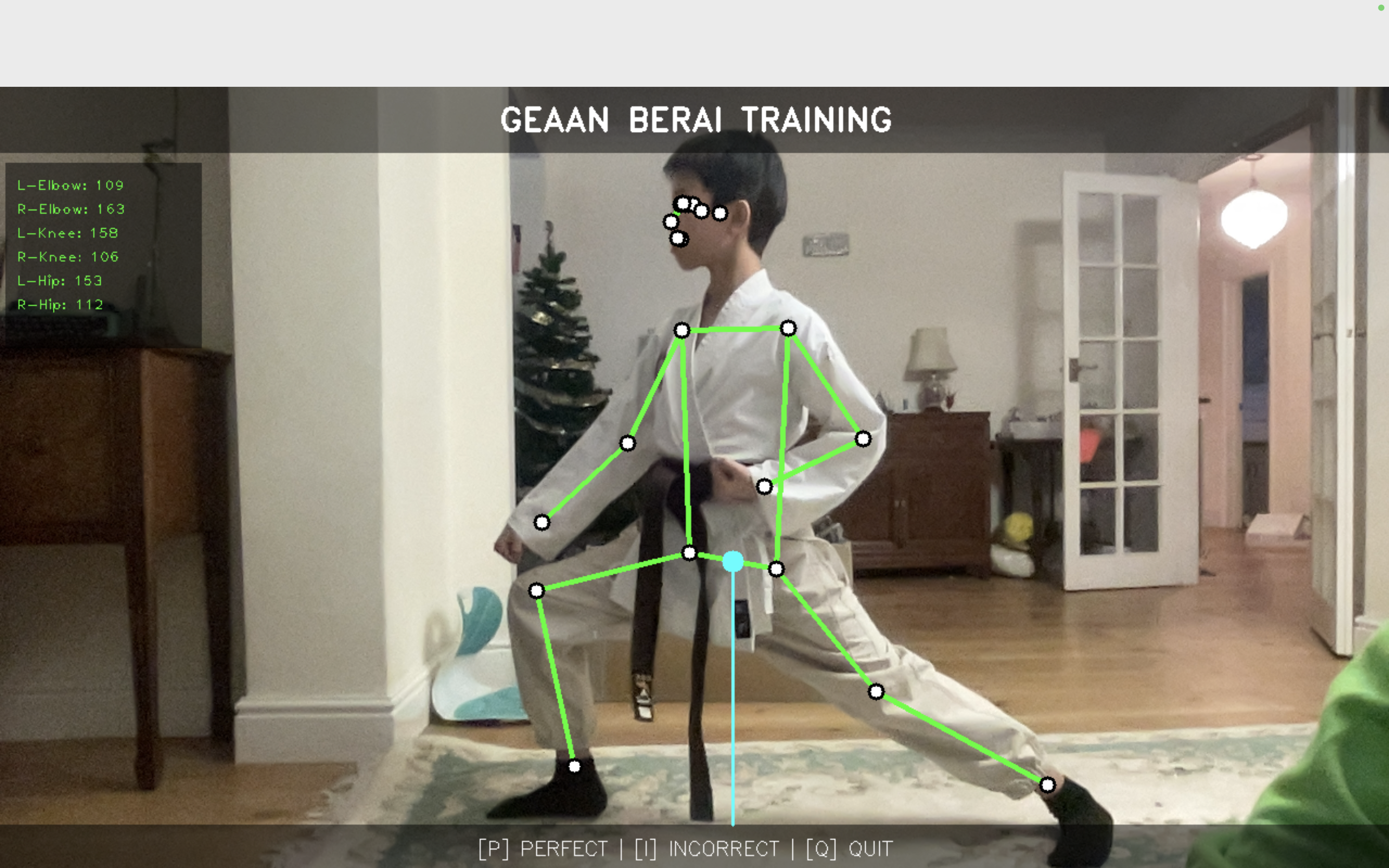

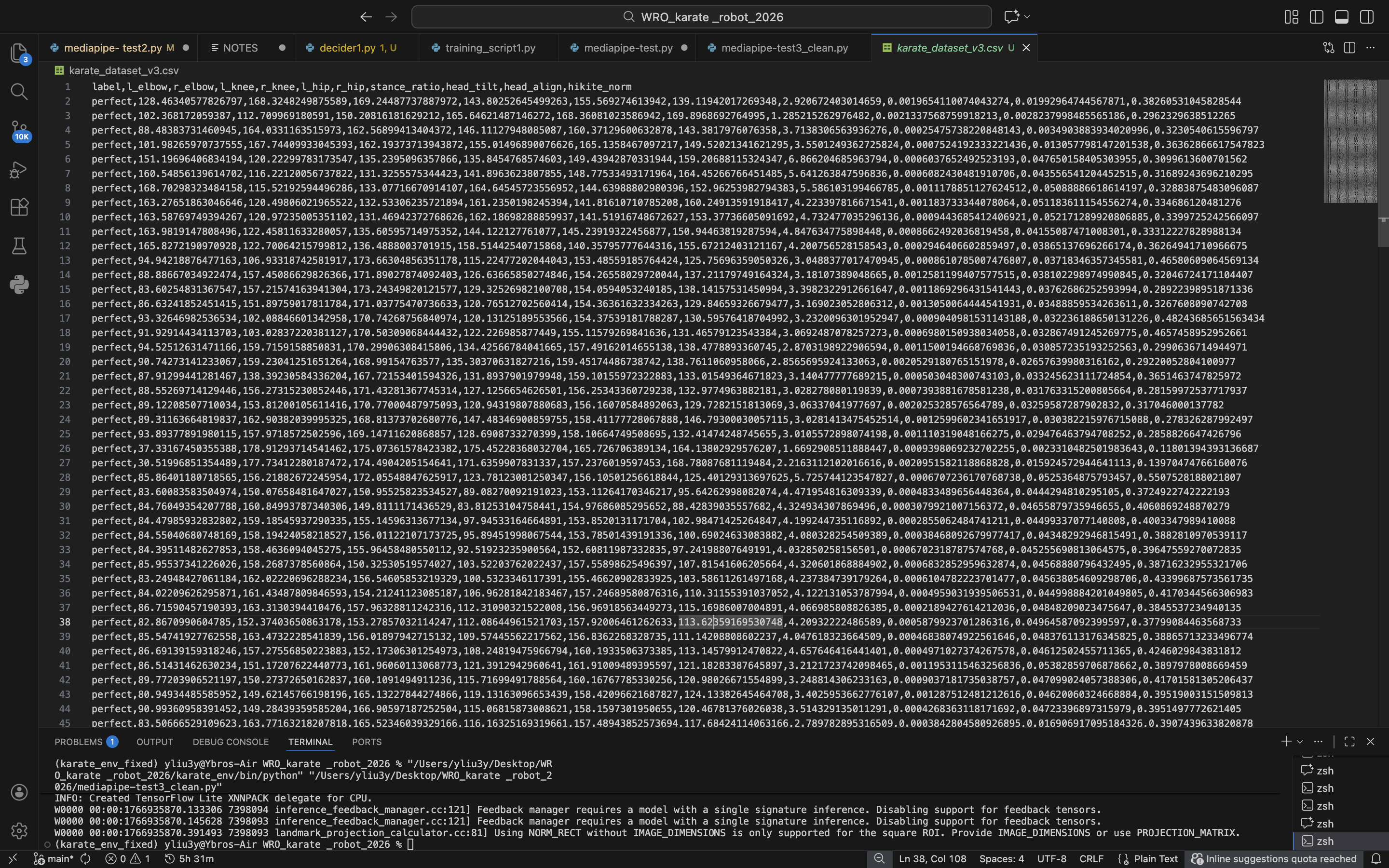

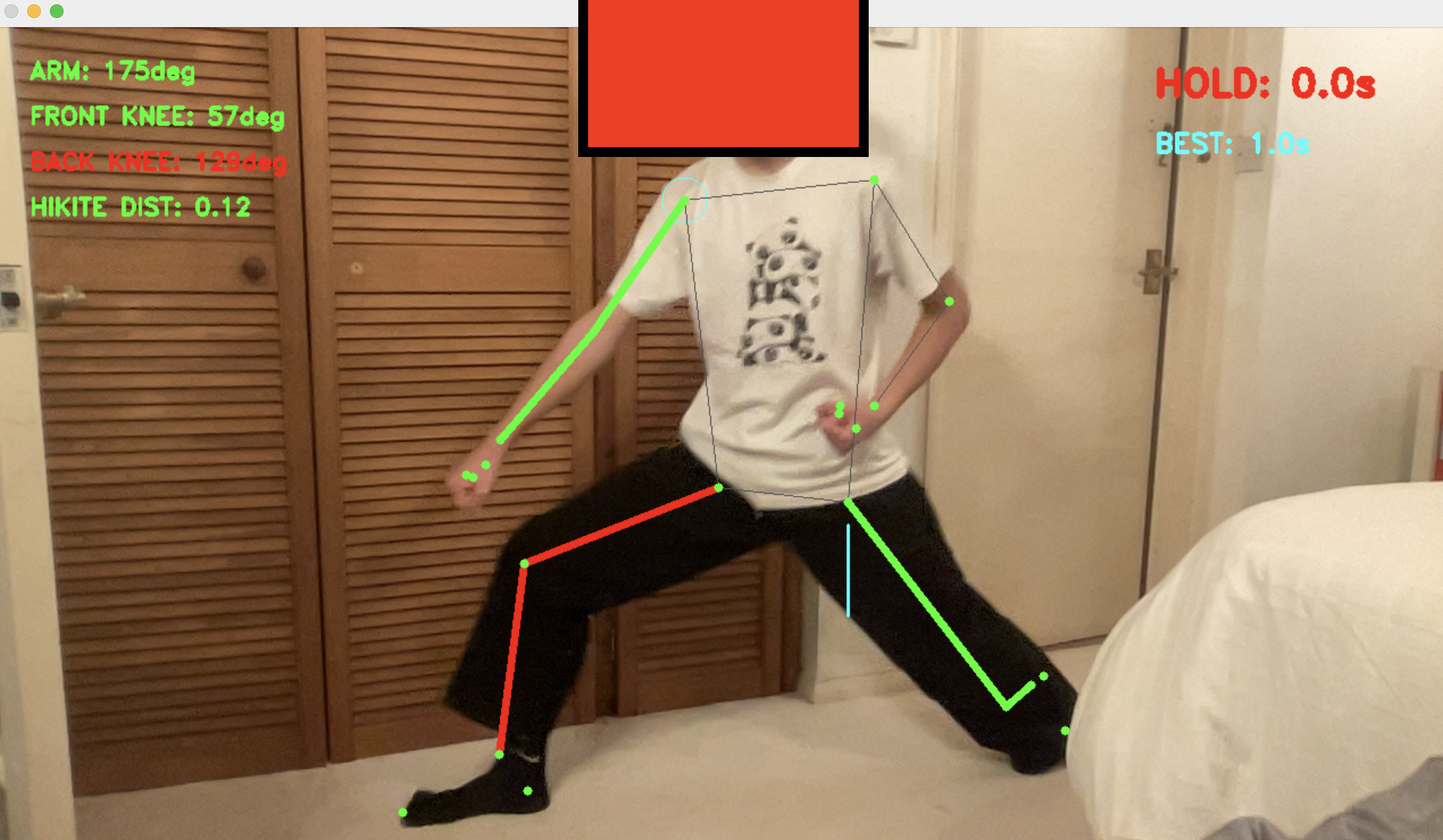

I am working on the inference for my project, using the LSTM model. Currently it will decide what action it is through the model, and the percentage of “correctness”. I am trying to make it work at the moment but it dosent seem to be working very well. at the moment i am generating feedback using simple if statements to see if the angles of joints are correct, similar to what i was doing before. However, i am having trouble seeing if it is my inference script that is wrong or the model thats not working, as it has trouble determining if the action im doing is really a punch or just idle. It will often misinterpret me sitting still testing as a punch that is 100 correct. Some feedback that i got recommended me to add a 30 frame buffer at the start but that didnt really work, also instead of automatically detecting movement i made it simpler for me by making it press spacebar to detect but that didnt really work either. For now i want to focus on testing wether its the models fault or mine so i will strip it down to its basic, try a different approach for the inference script and just check if the model works at all, if not i will have to re-code it.

Log in to leave a comment