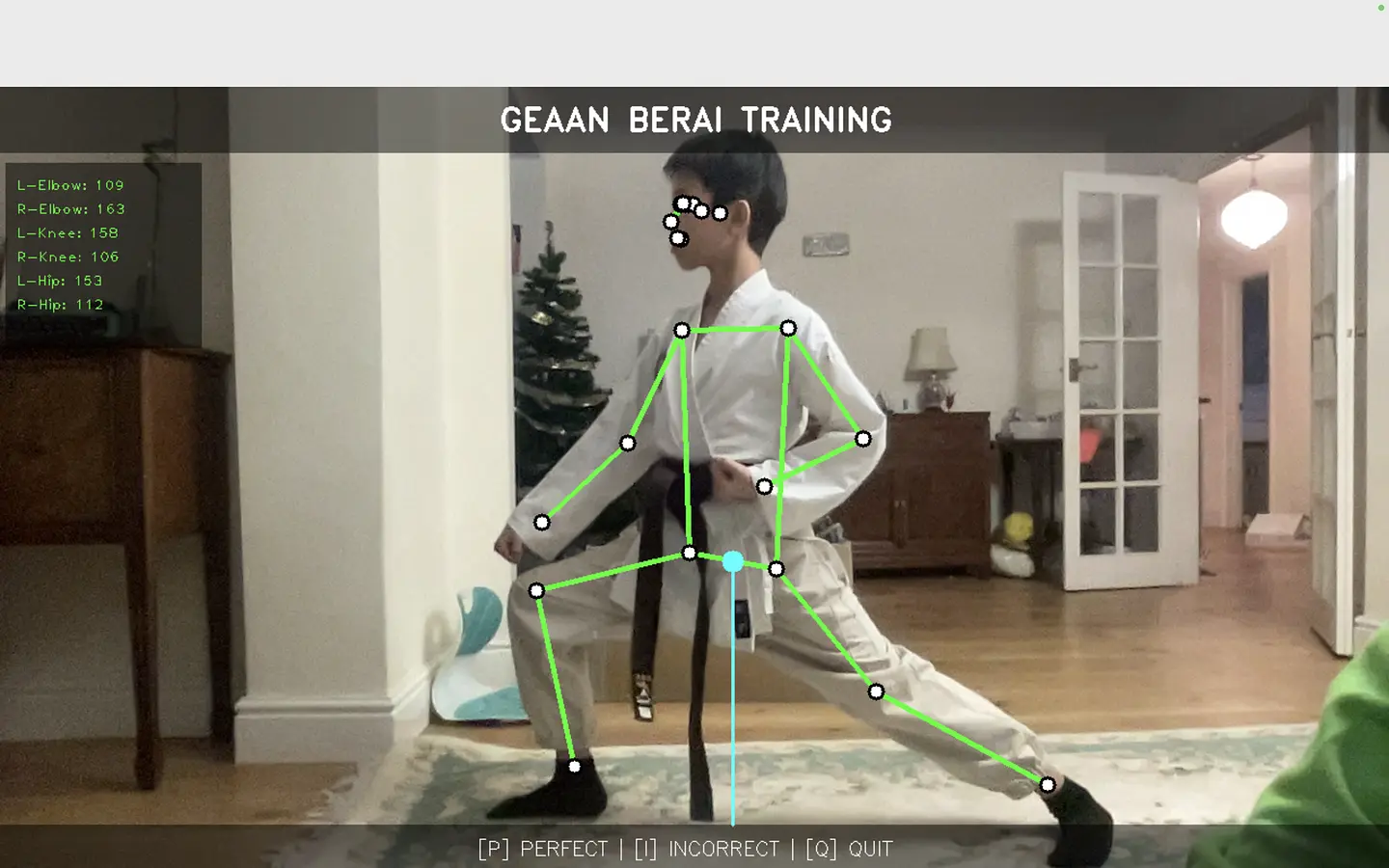

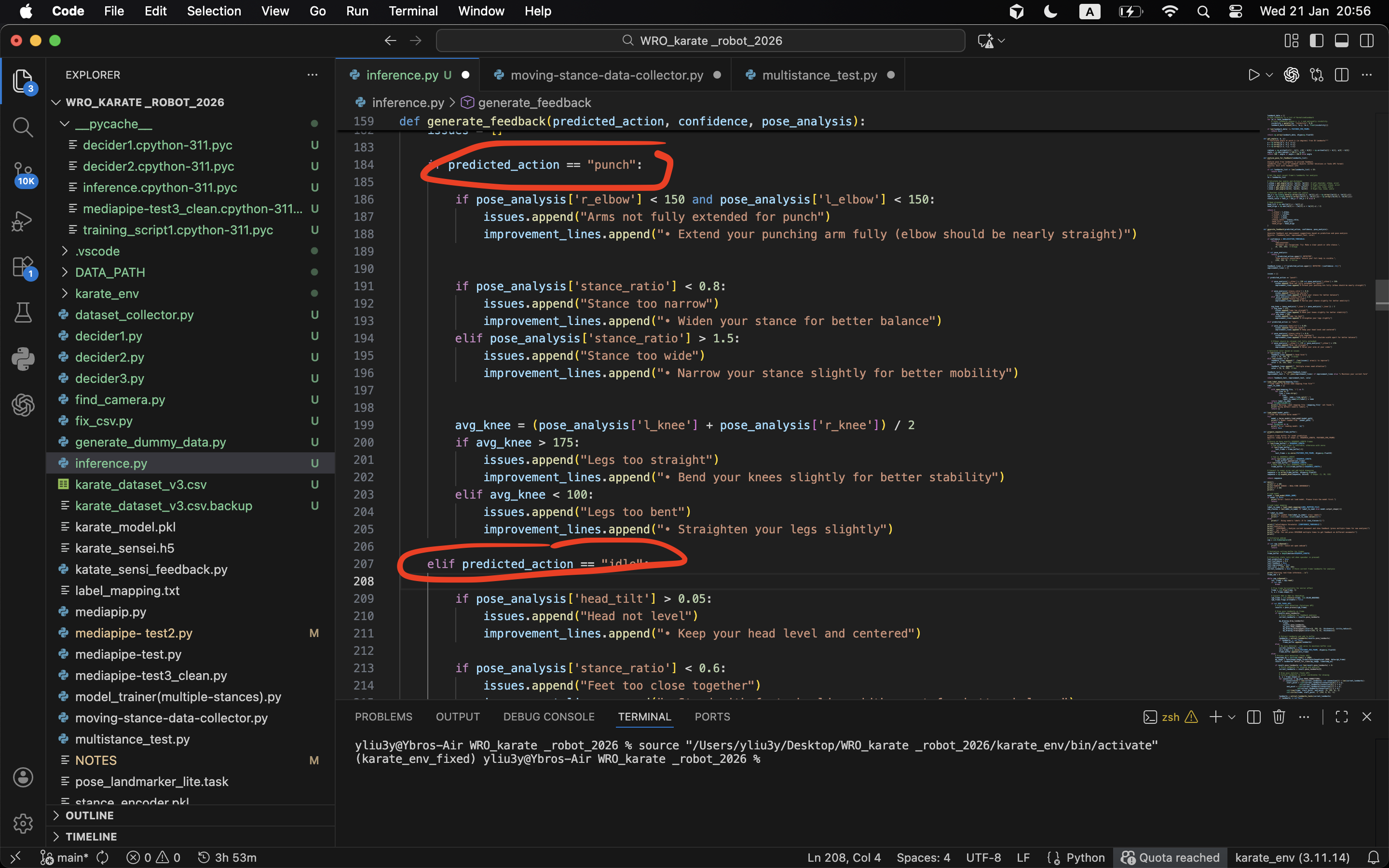

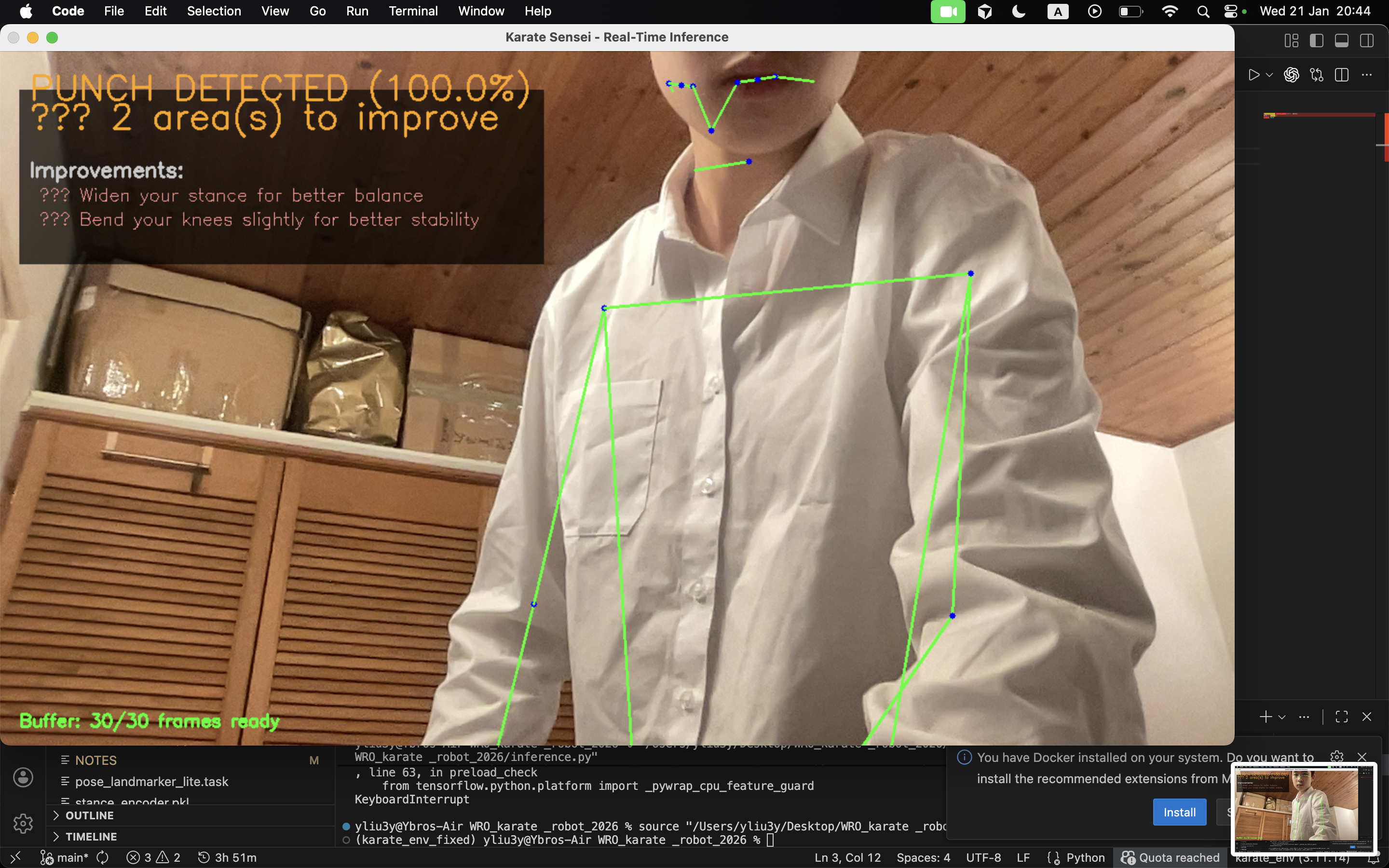

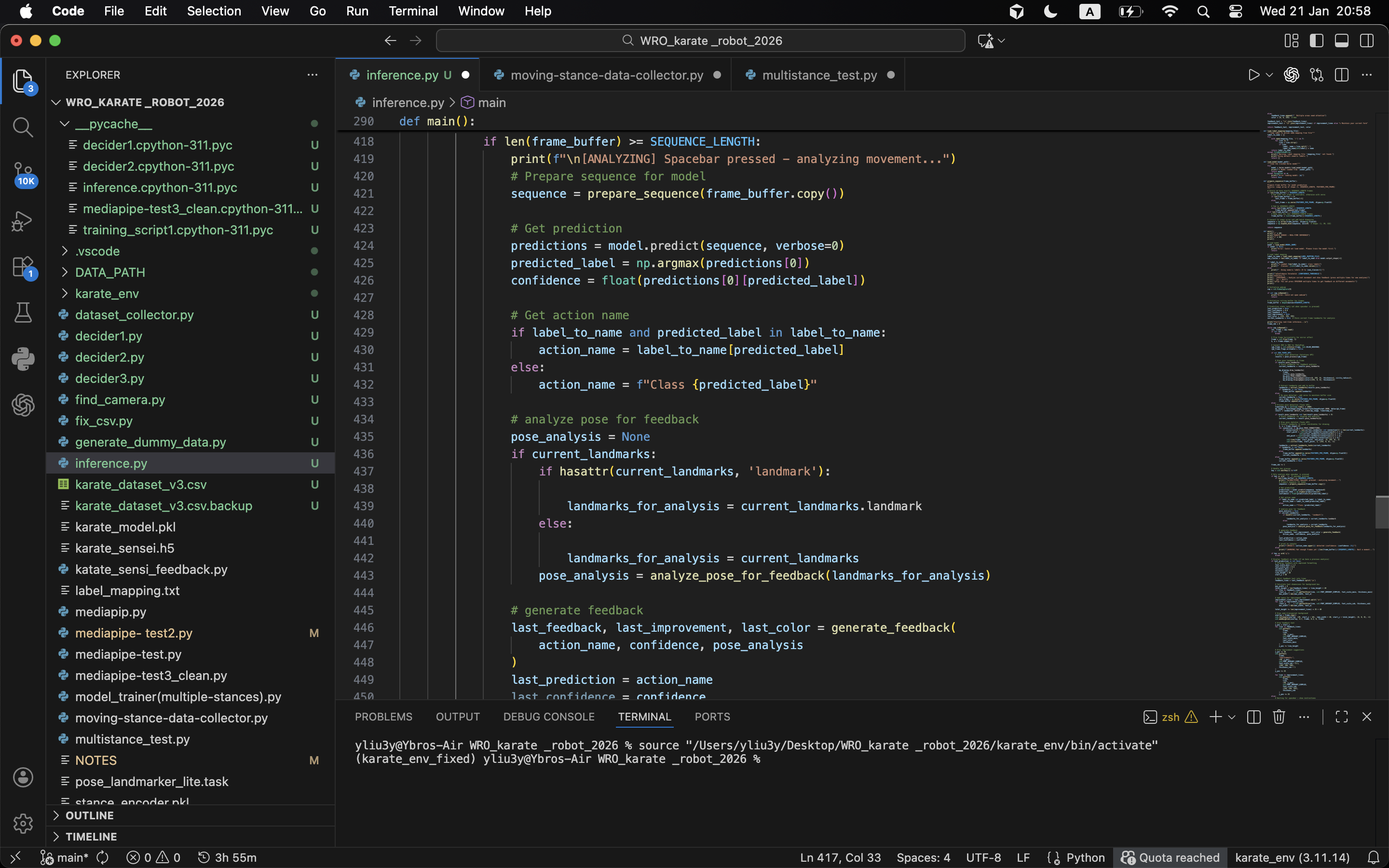

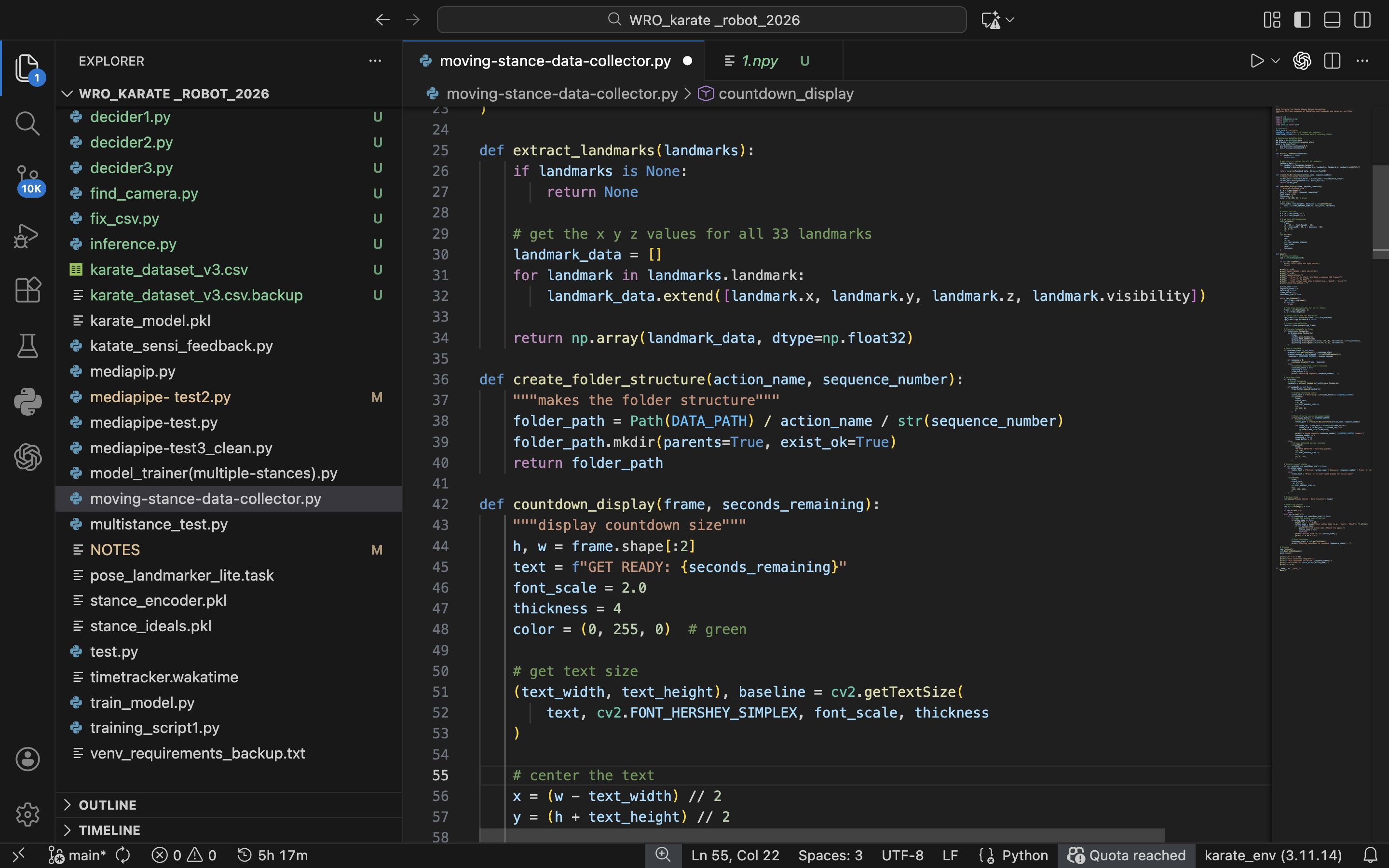

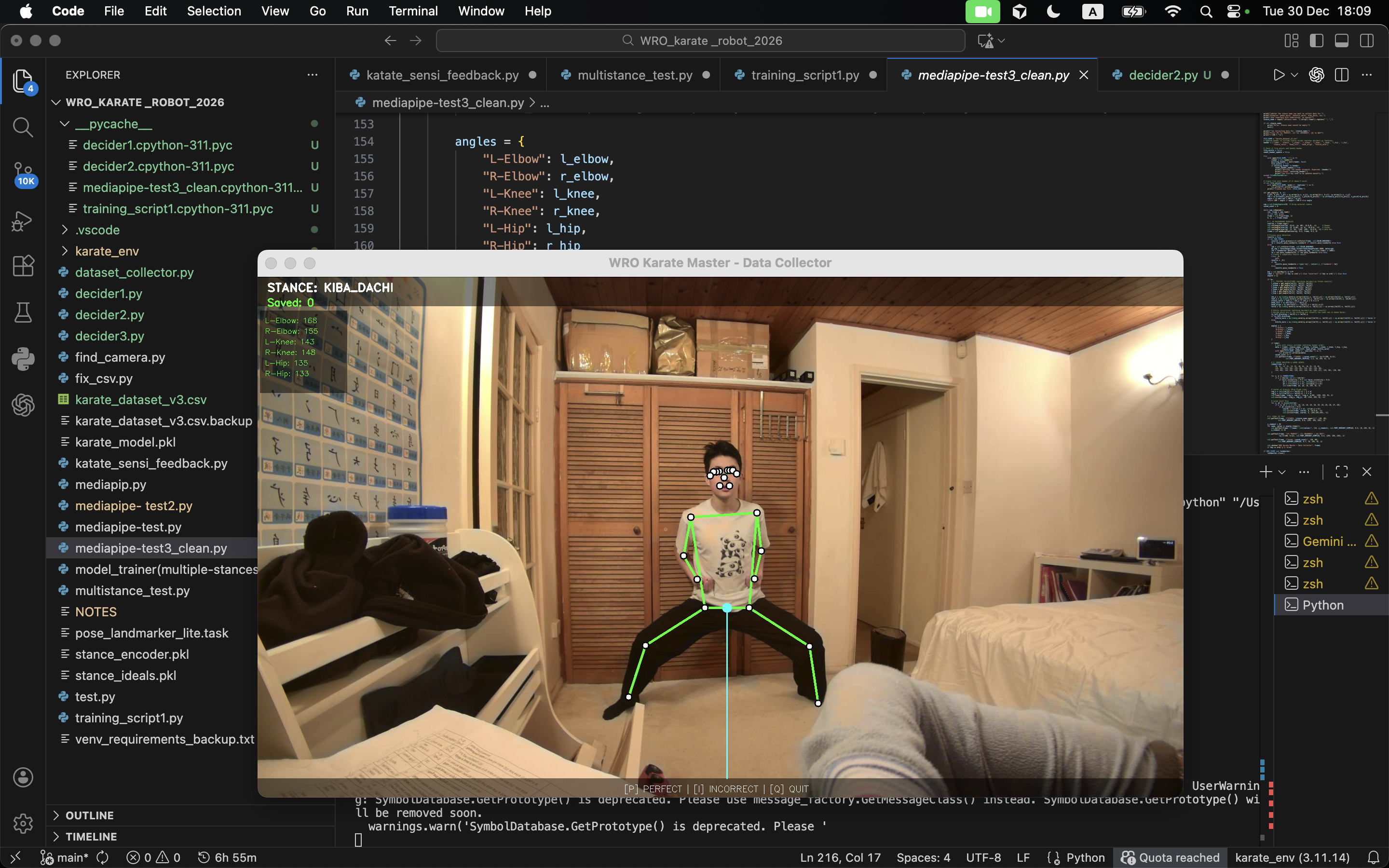

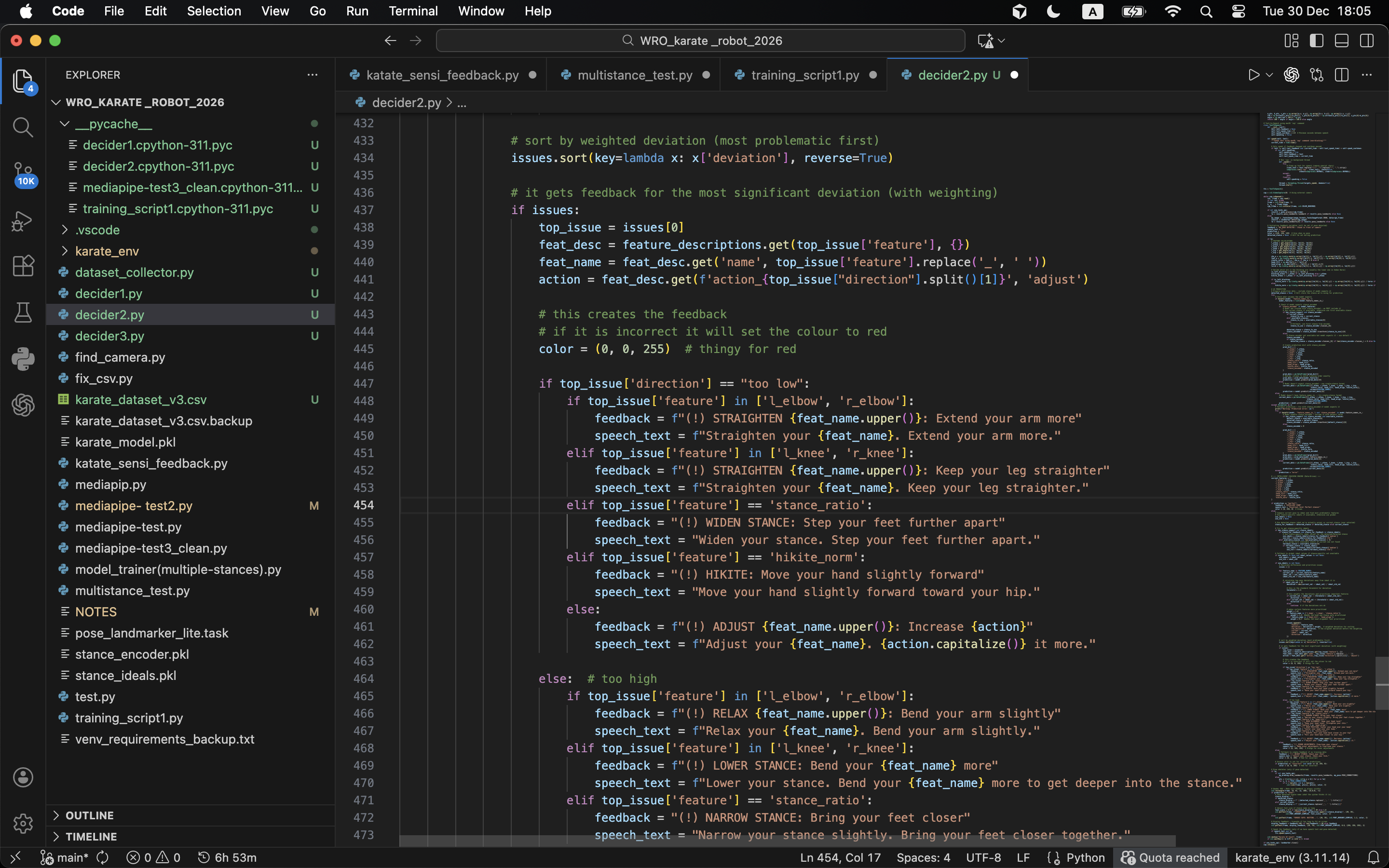

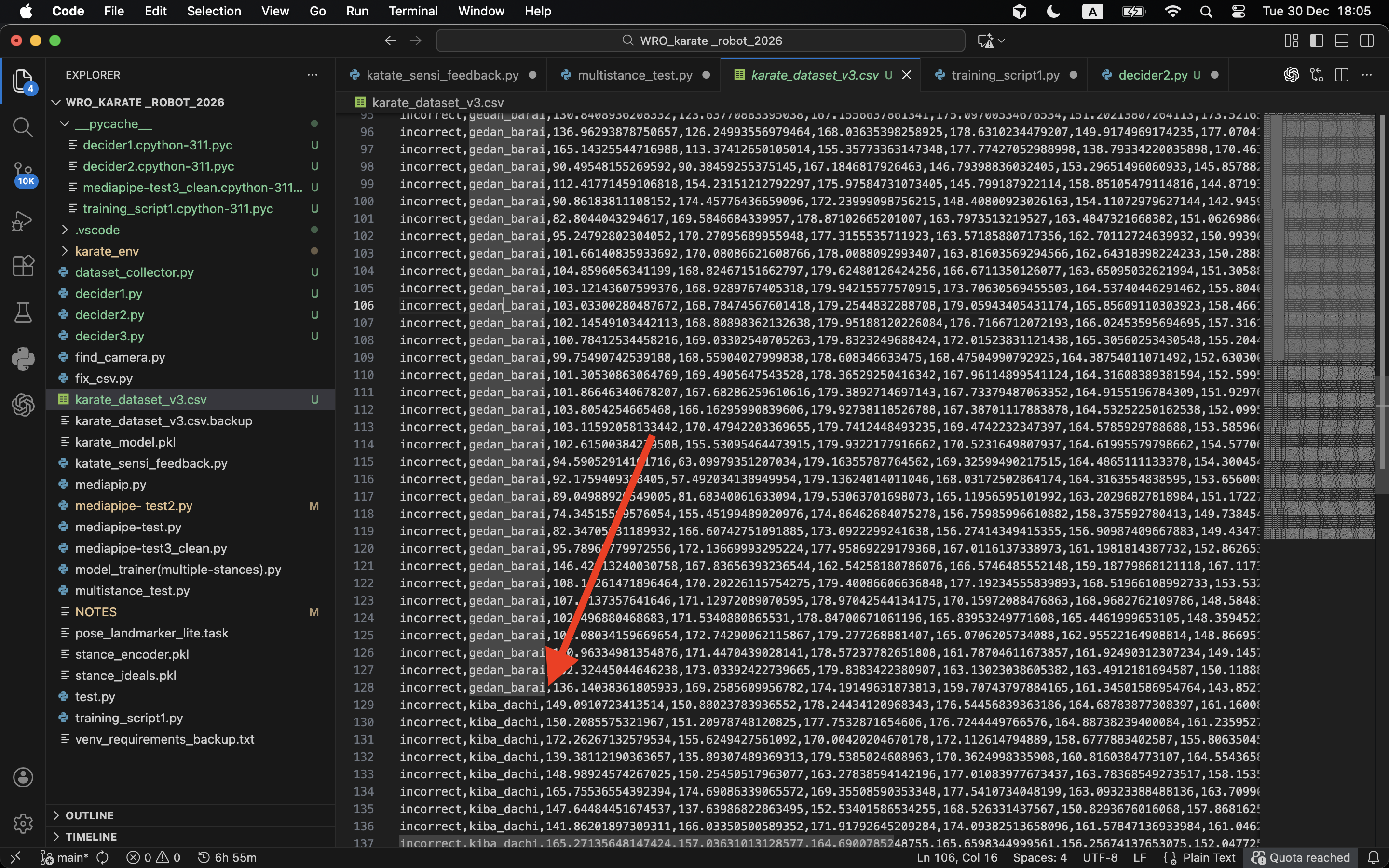

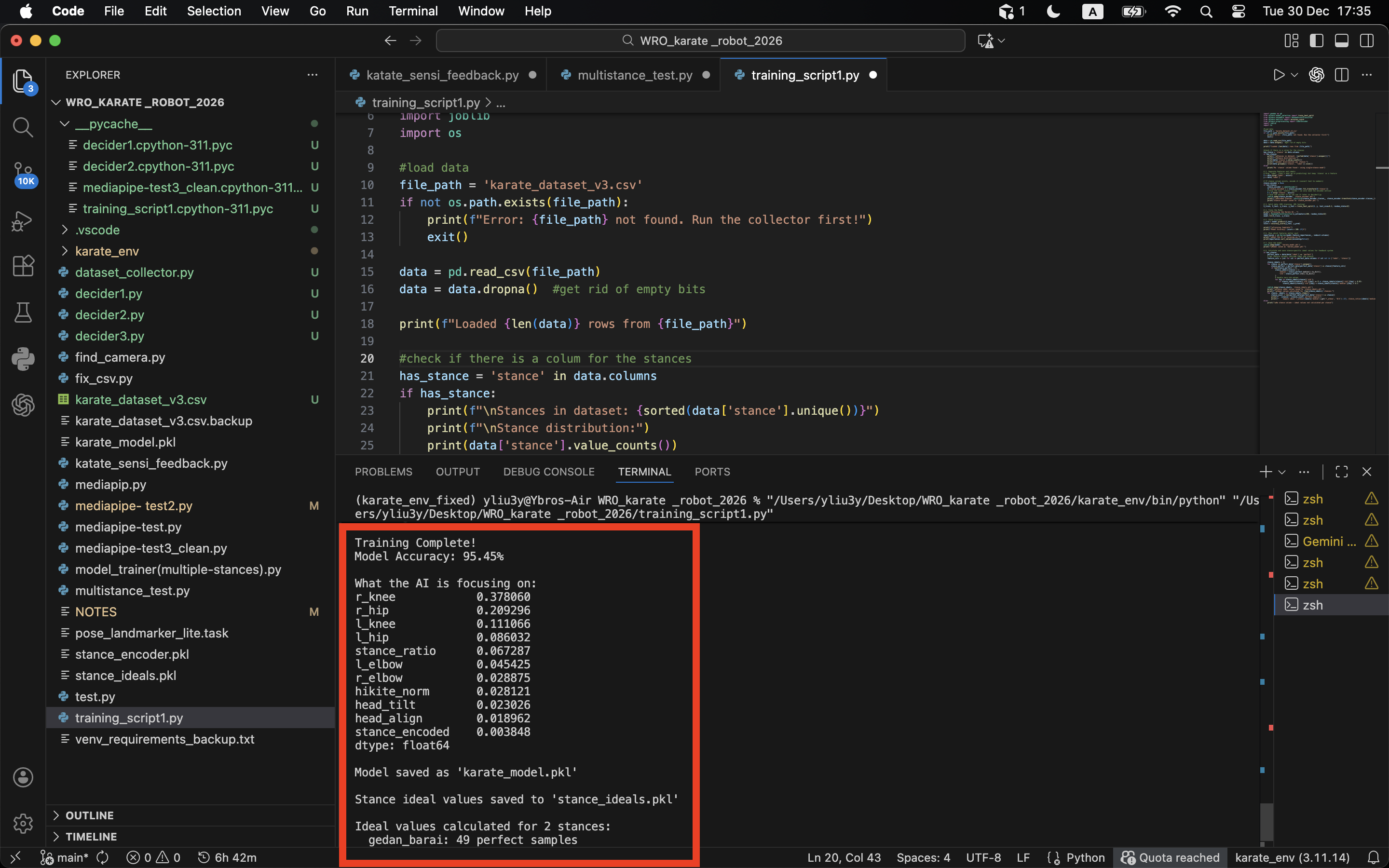

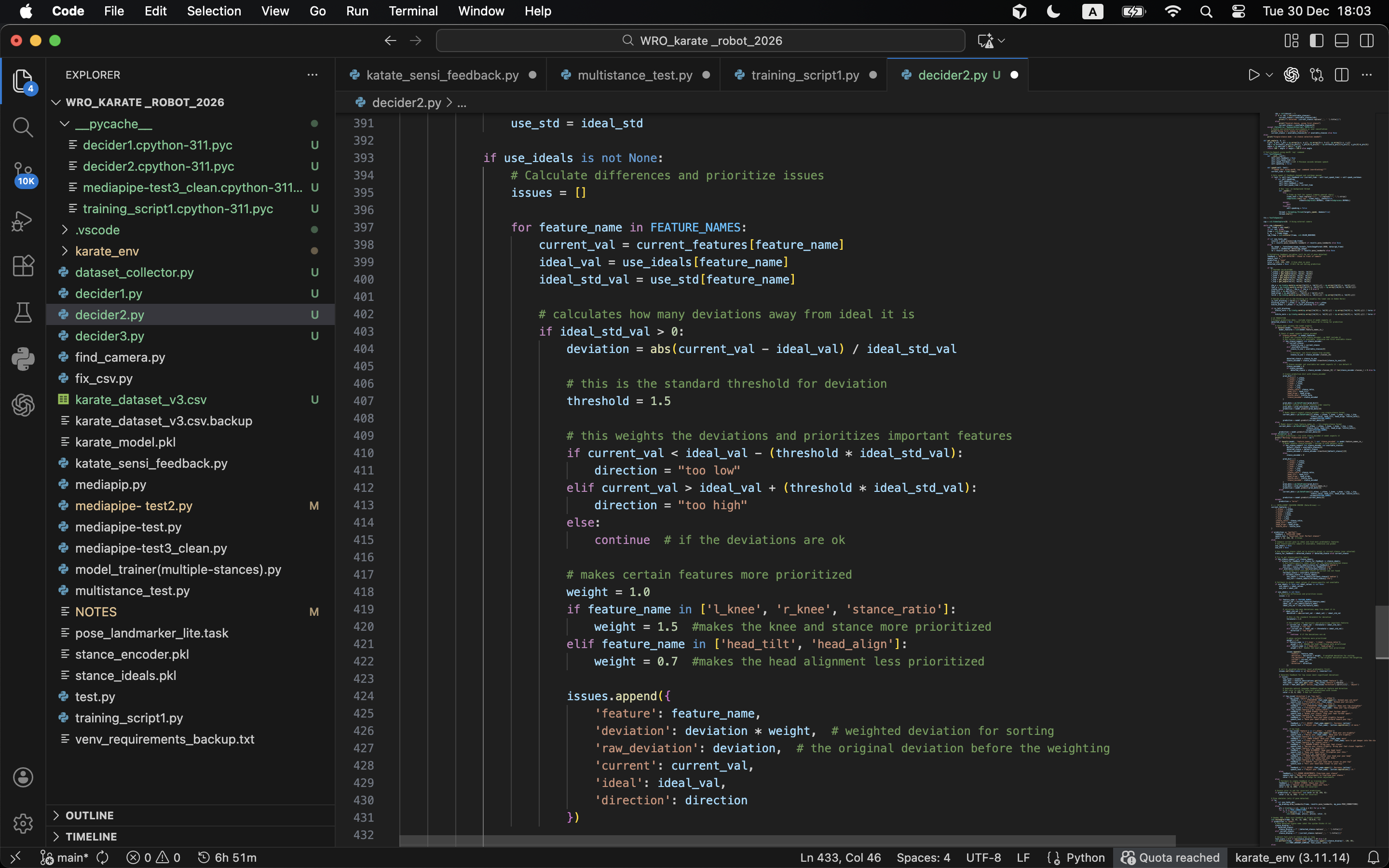

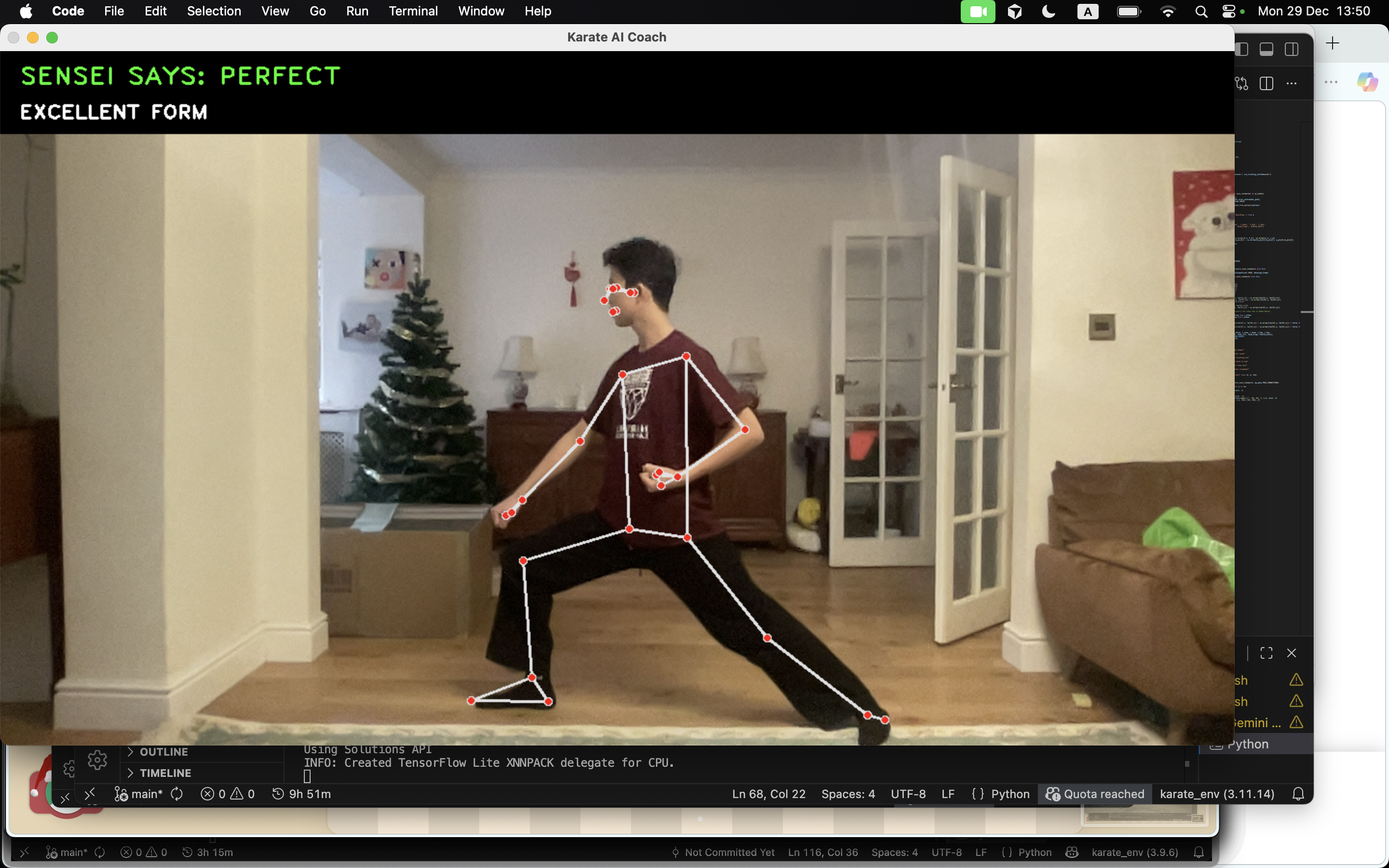

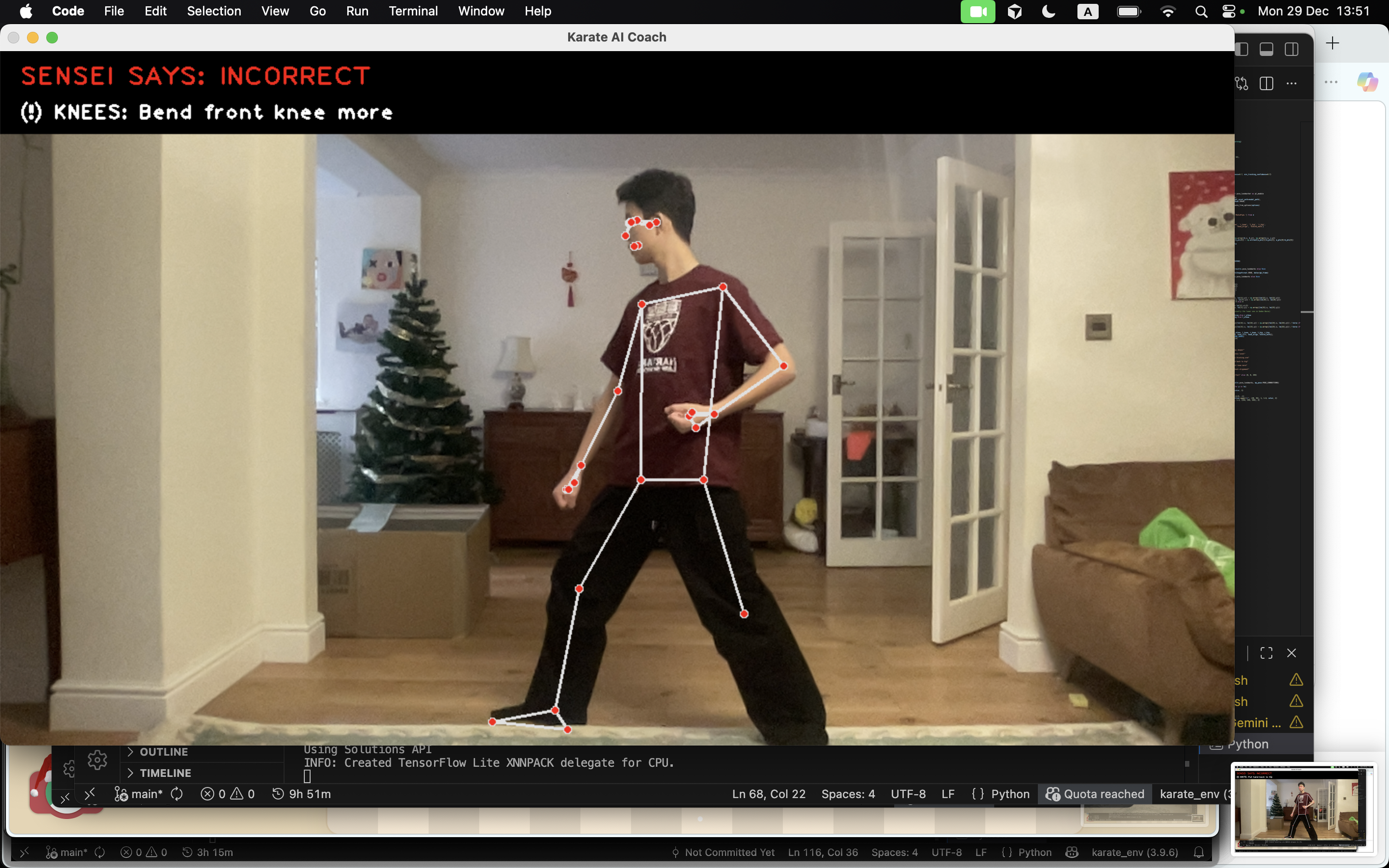

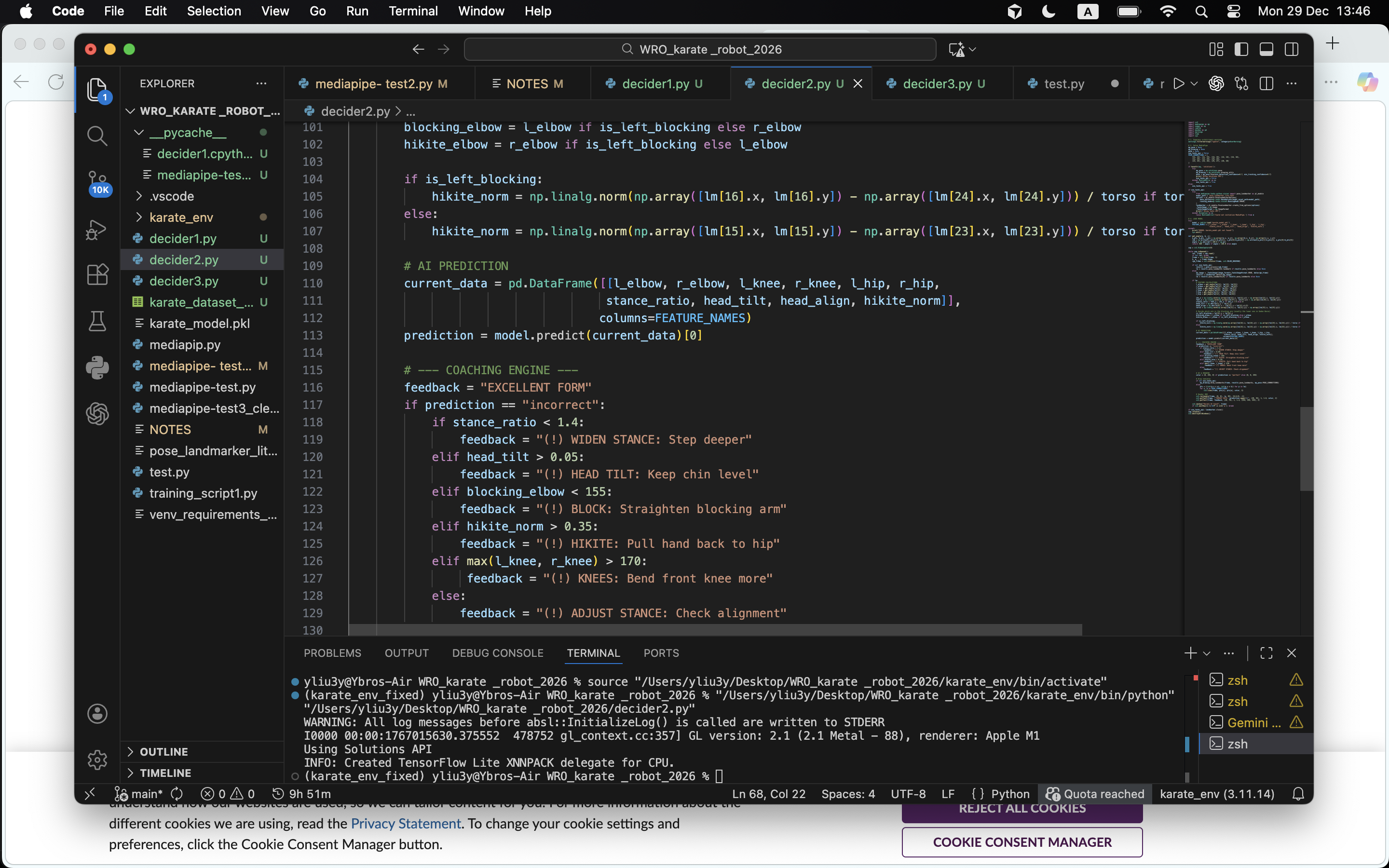

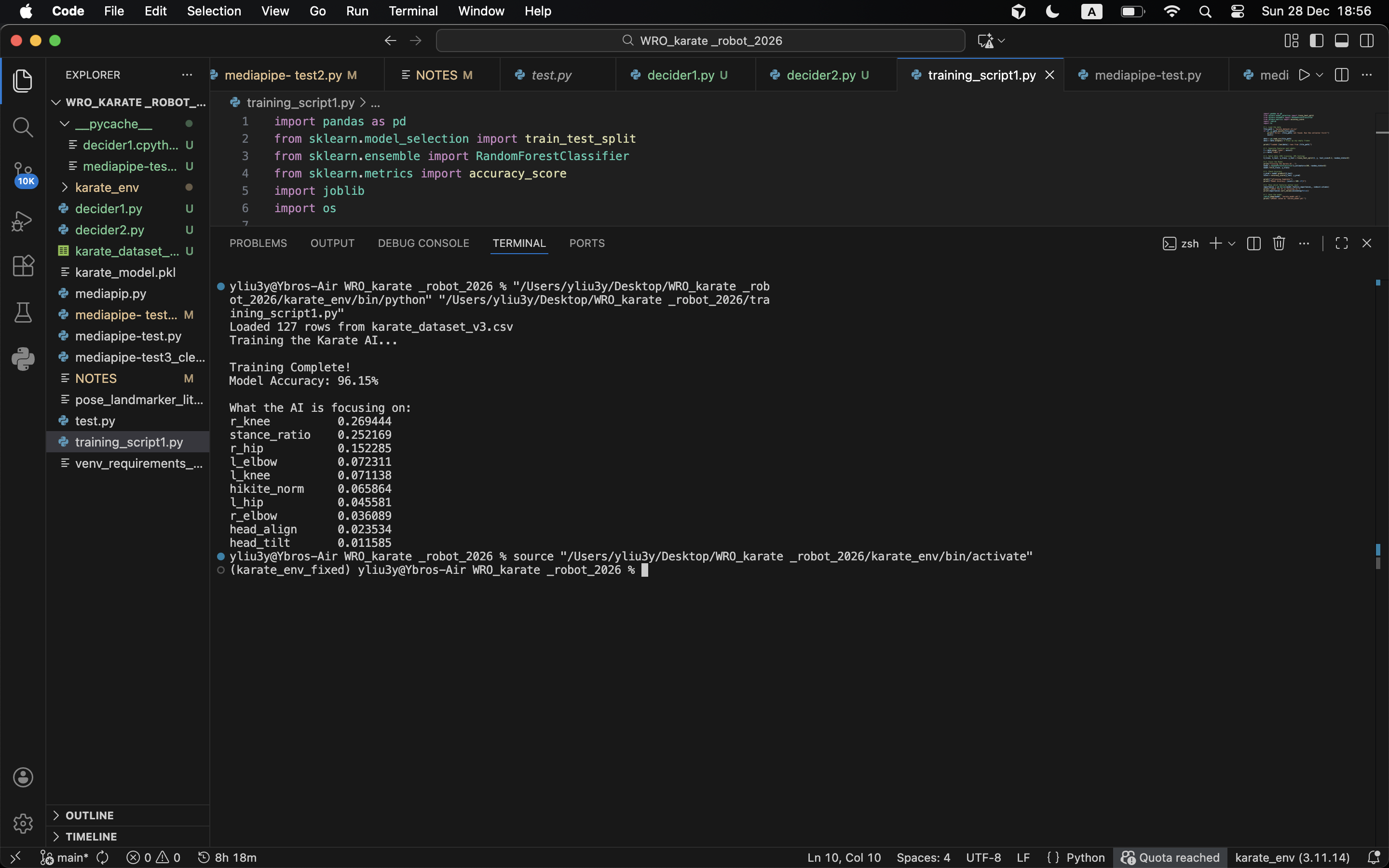

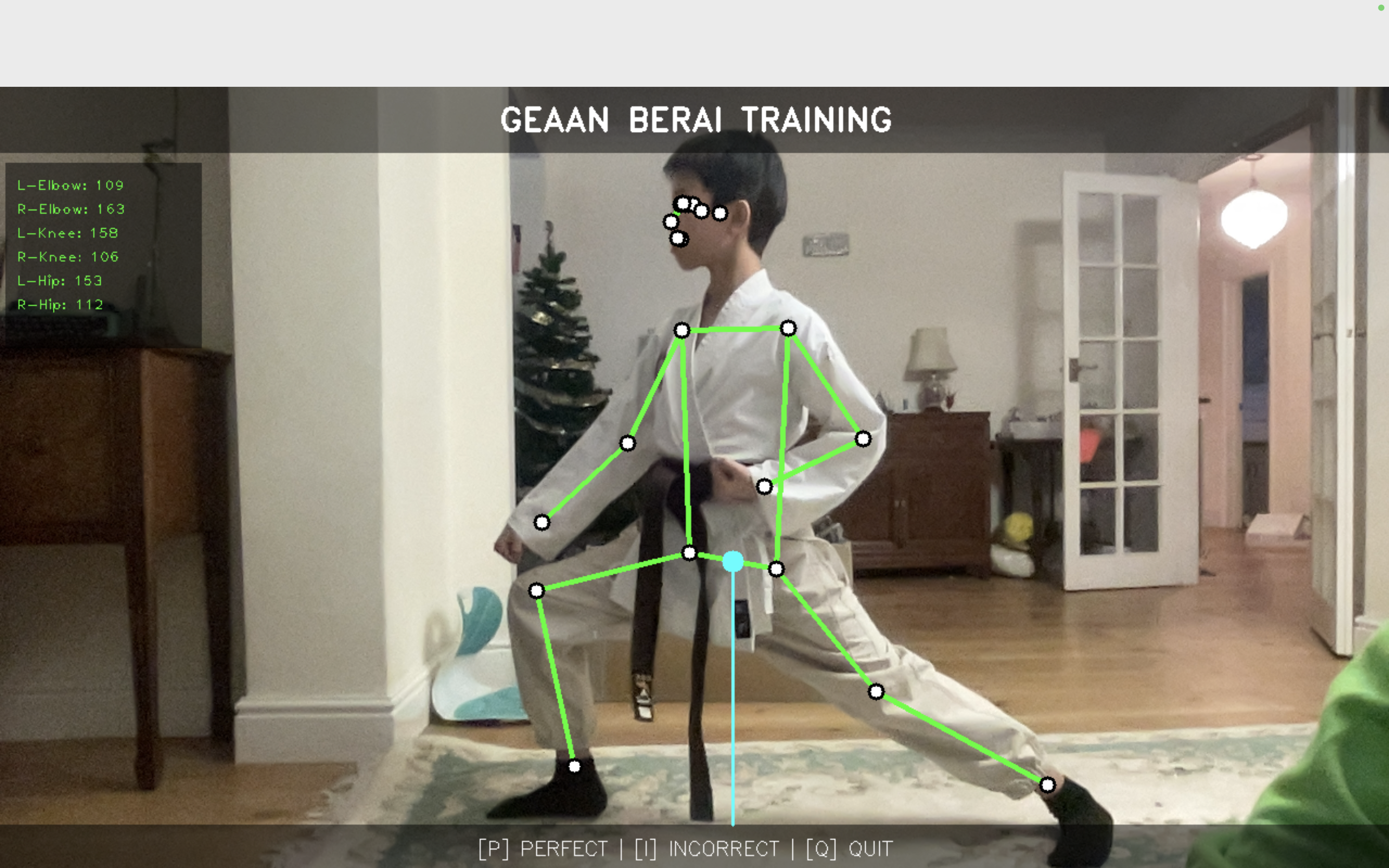

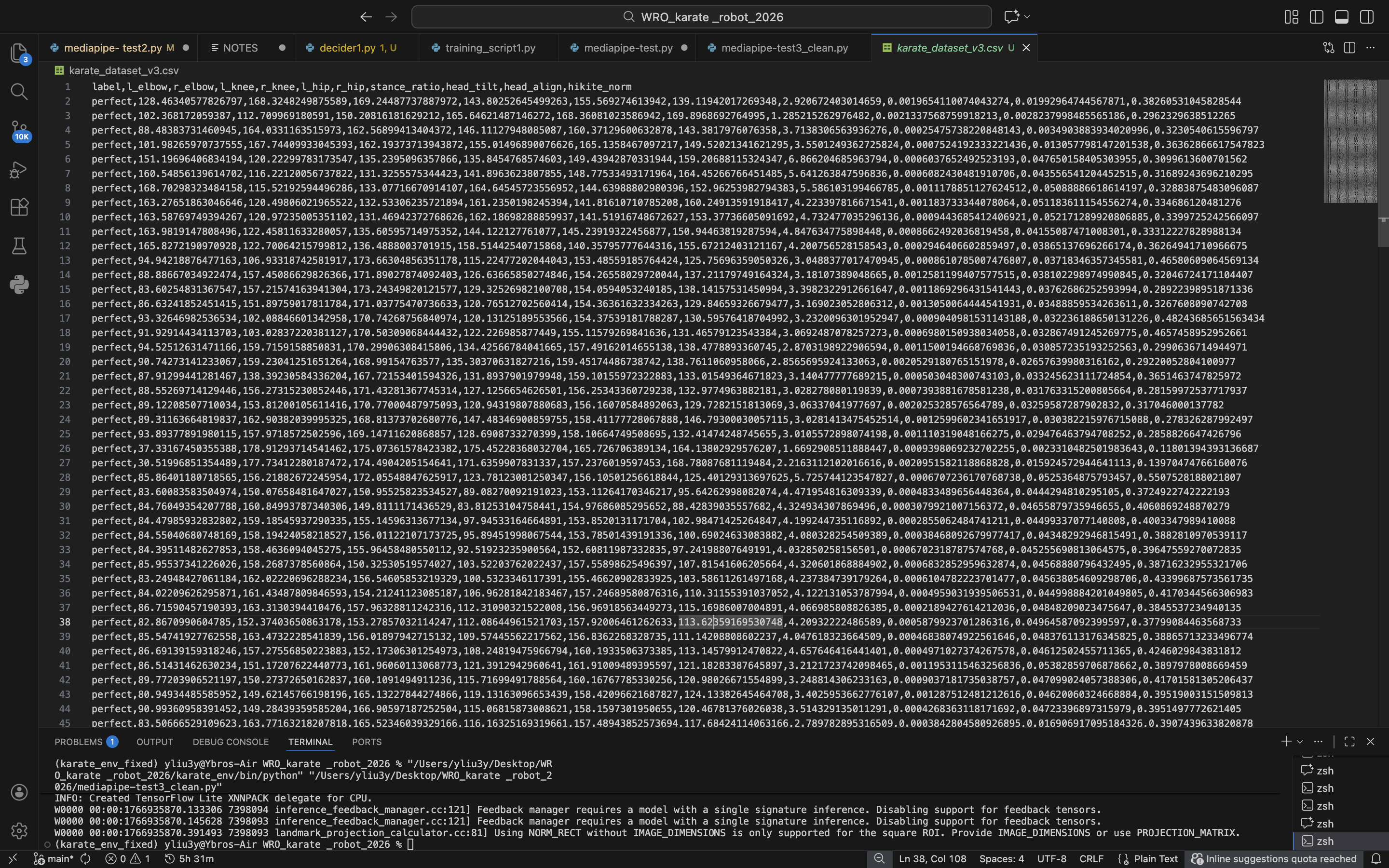

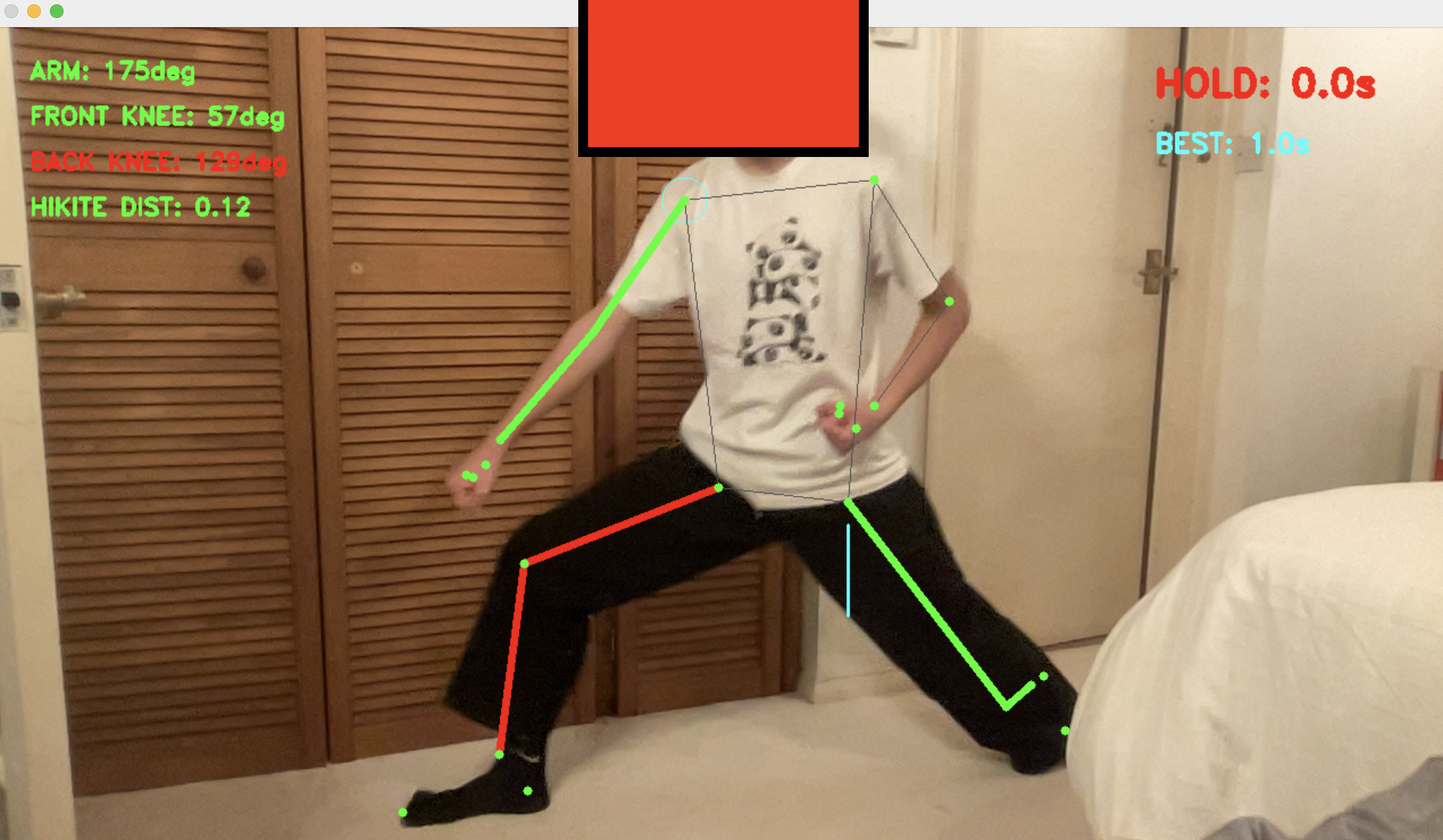

This project is a karate ai, that can track your movements using media pip, looking at the positioning of your body and the angles of your arms and legs, it can track how well your stance is and your positioning, then it can correct you and help you improve.

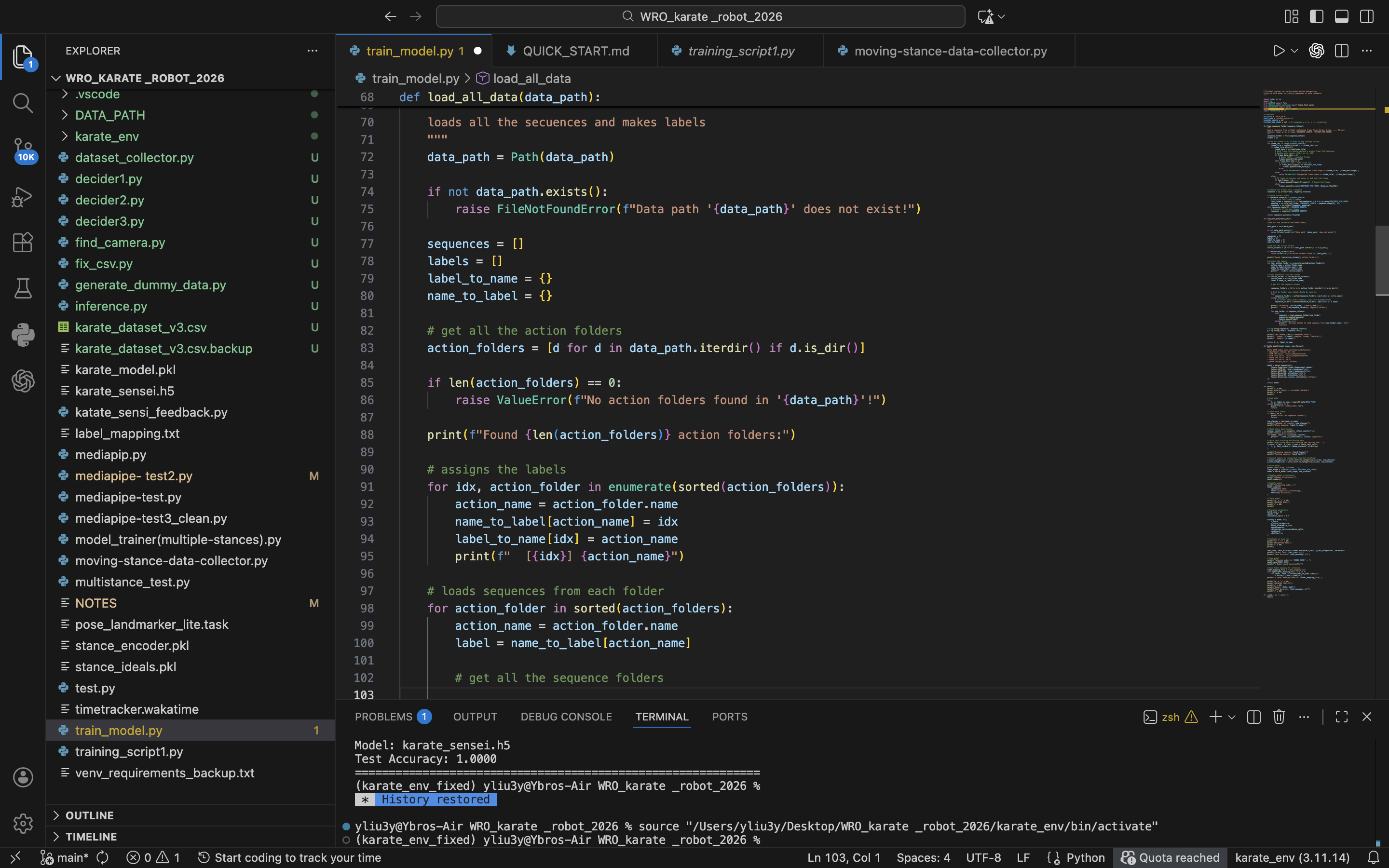

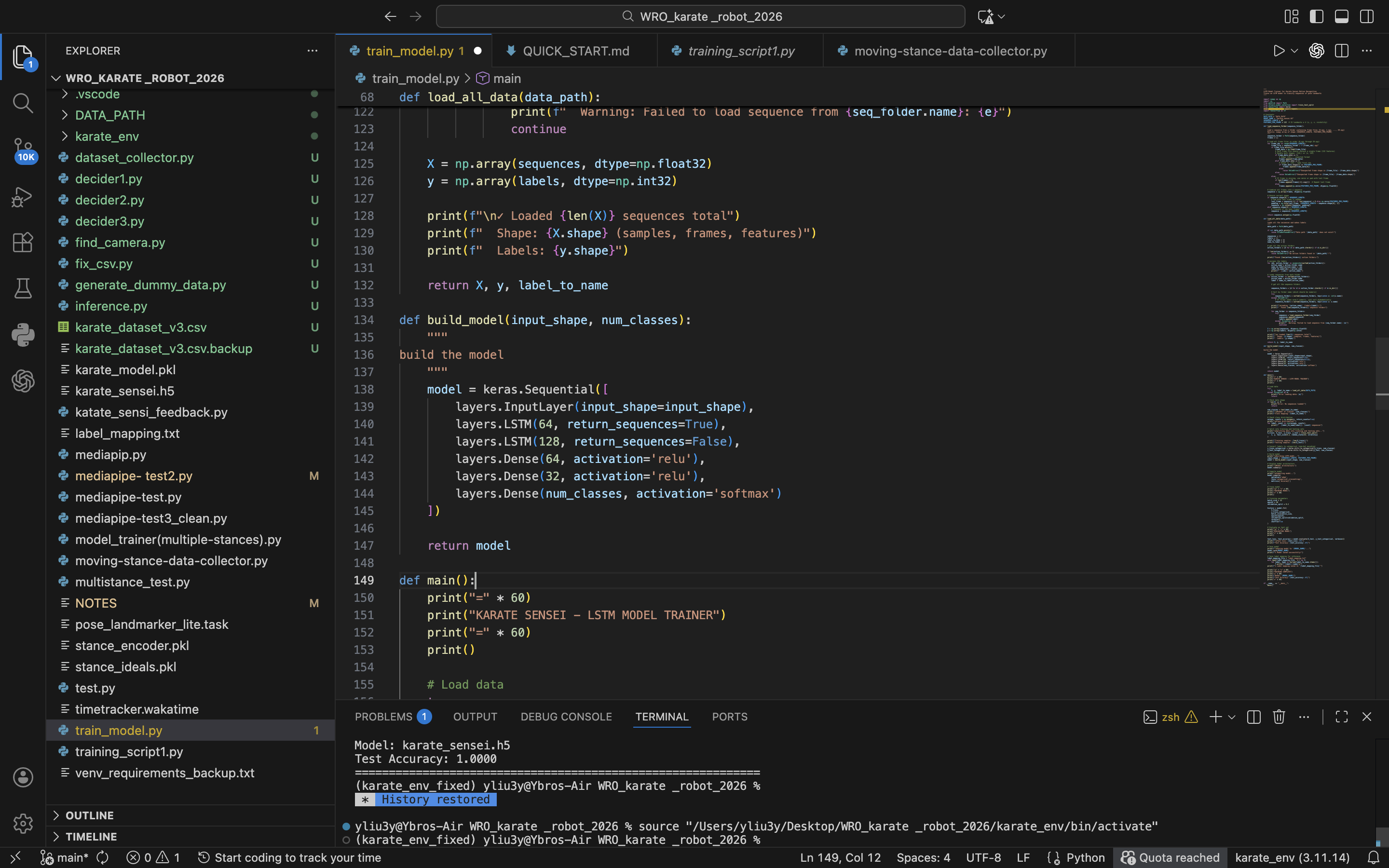

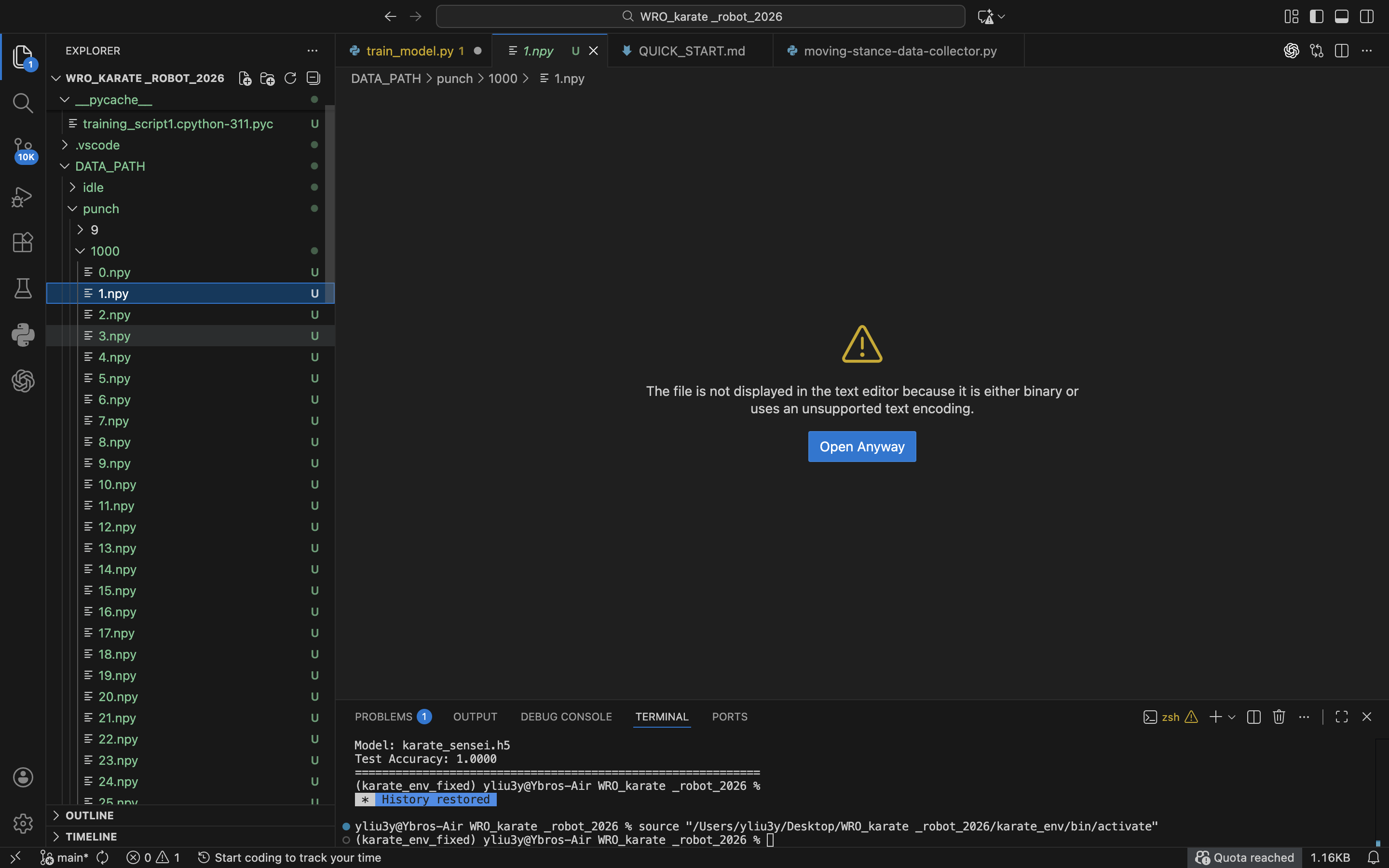

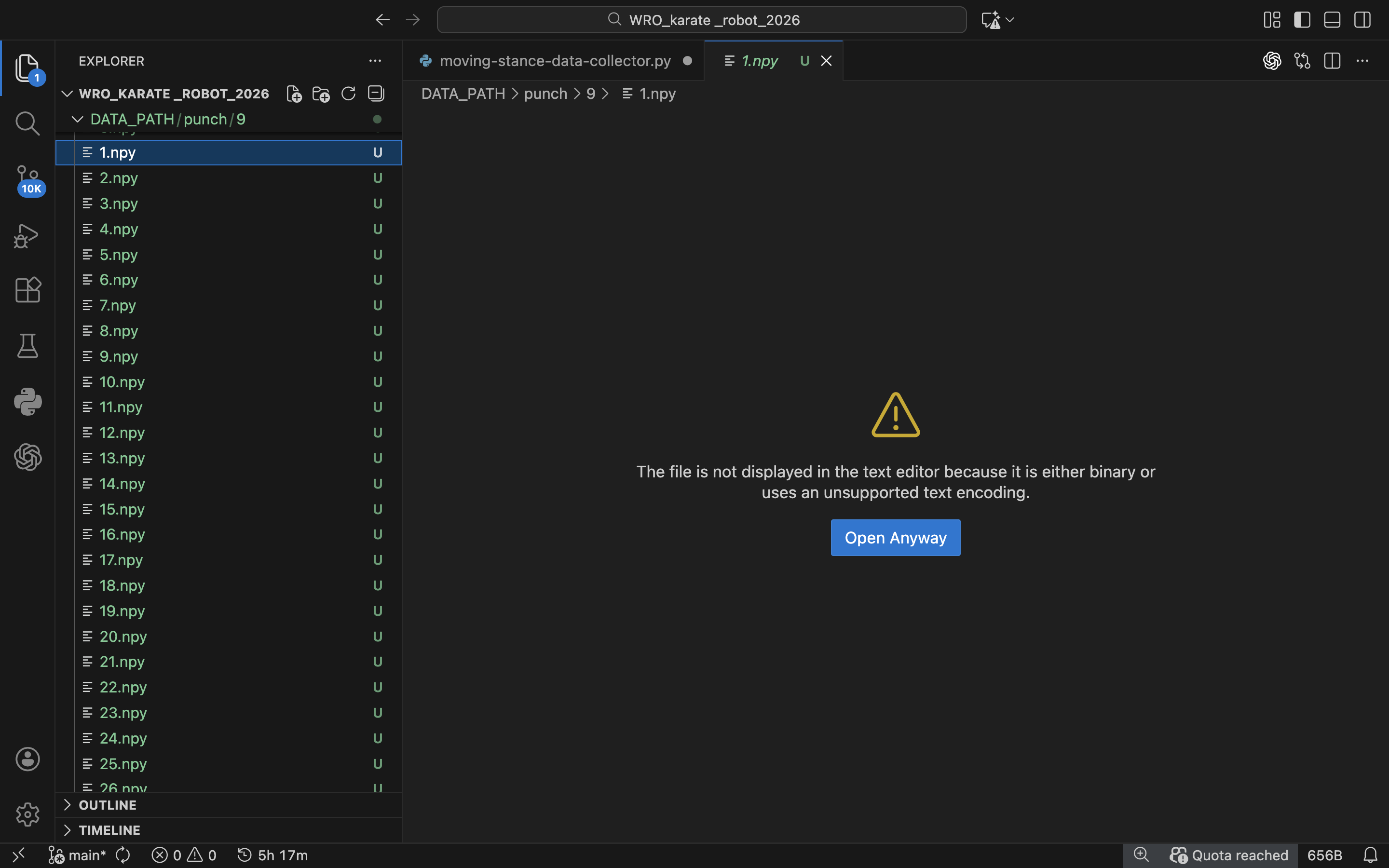

(this my progress atm)

In the future, i hope to implement hardware to it, for example adding sensors and making the camera move around so you that it can detect your movements when you are moving around. Force sensors in your shoes and movement + acceleration sensors allow it to more accurately detect your positioning. Additionally, i will train my own model on data collected from experts for higher accuracy.