1 devlogs

20m 10s

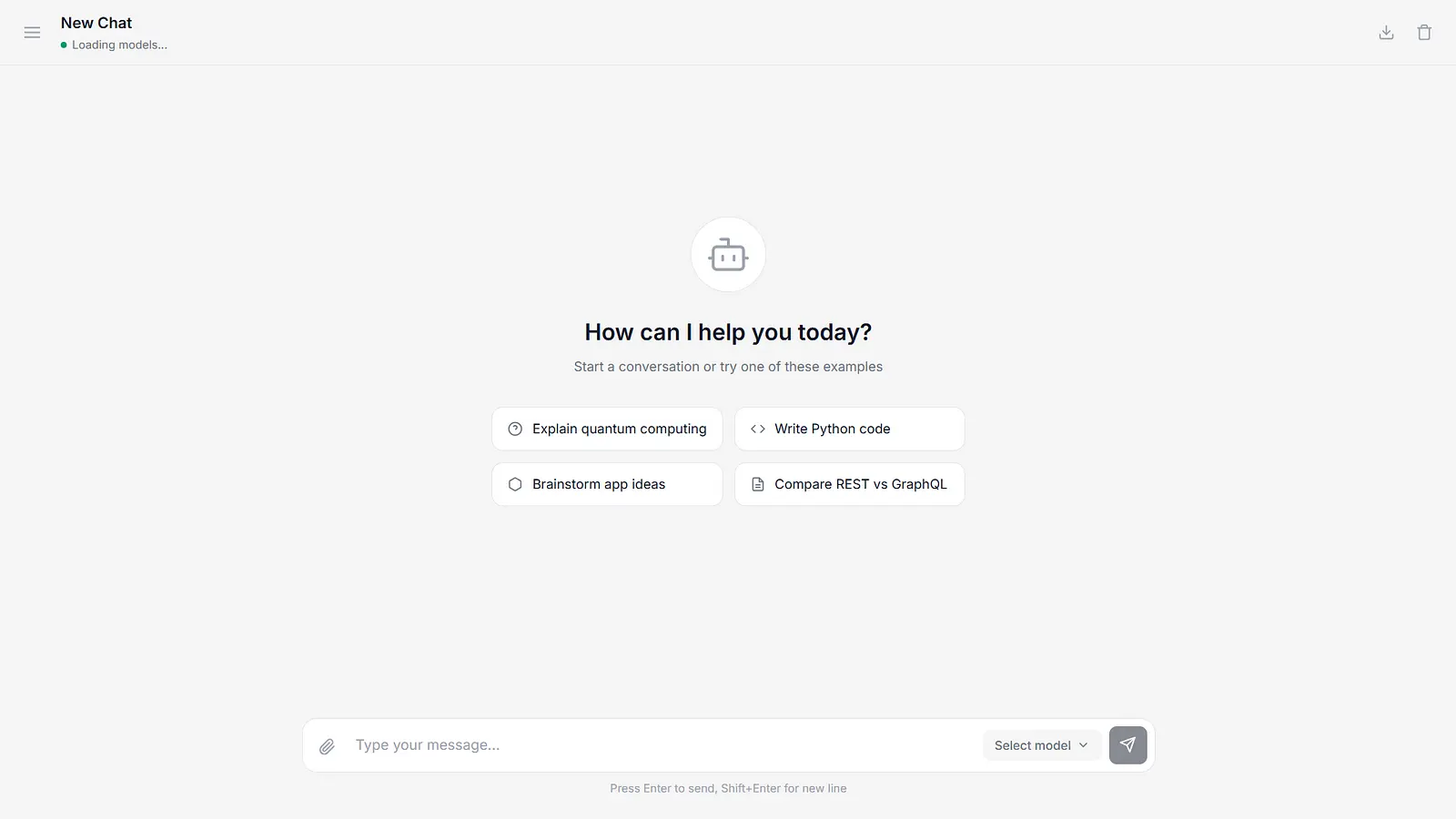

Interactive browser-based LLM chat with model selection, Markdown rendering, media pop-ups, chat history, copy & regenerate. Fully runs in your browser using localStorage — no backend needed! Click and try instantly.