I’ve shipped versions v0.6.1 and v0.6.2, both patches. Among the two, I’ve made many small back-end improvements like bot detection (on vercel) and error handling improvement & api health checks, but I’ve done plenty more too.

Full changelog:

[0.6.2] - 2026-01-27

Fixed

- Improved error handling and added API health endpoint to better catch when the API is down

- Improved caching and model context handling by moving the current time from user prompt to system prompt

- Added models (Kimi K2.5, GLM 4.7 Flash)

- Replaced Gemini 2.5 Flash to GLM 4.7 Flash for conversation title generation

- Replaced default LLM from Kimi K2 to Kimi K2.5

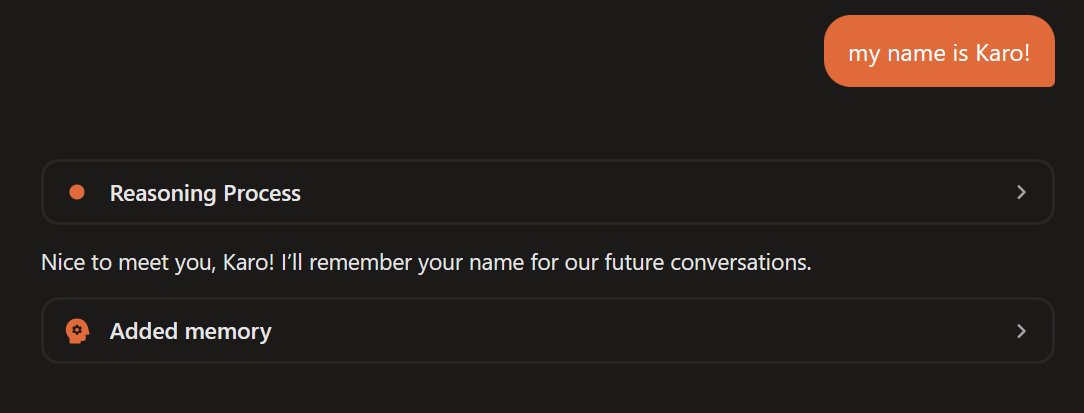

- Improved memory tool reliability

- Polished system prompt to better instruct model to use memory tools

[0.6.1] - 2026-01-25

Fixed

- Added models (Grok 4.1 Fast, MiniMax M2.1, GPT-5.2, Gemini 3 Pro Preview)

Log in to leave a comment