An on-device SLM for Moments, an open source journal app I’m currently making.

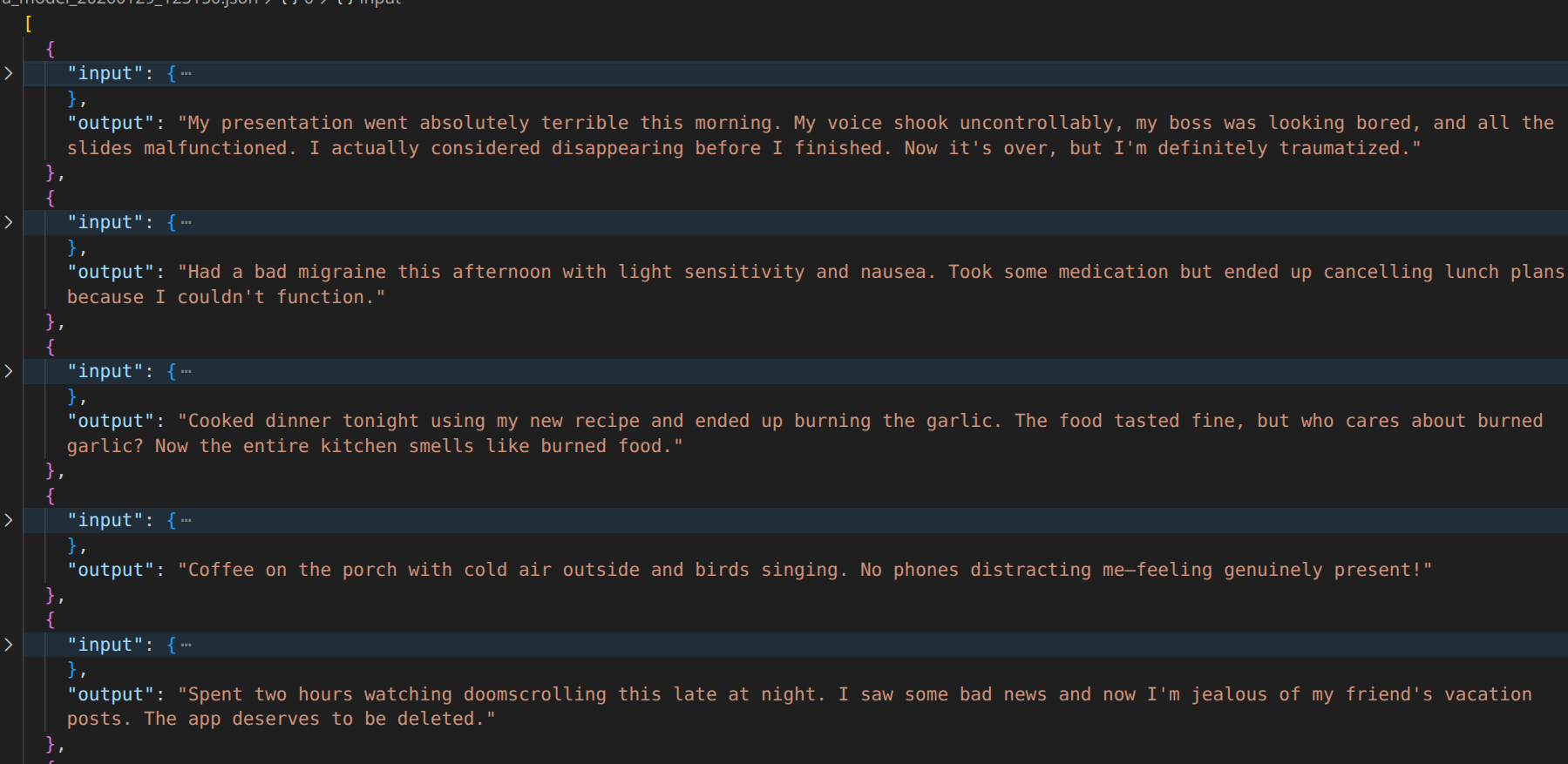

I’ve recovered, remade a dataset, and re-fine-tuned. By doing that I’ve made the best performing model so far.

The model now generates actual English sentences instead of gluing stuff together with commas and the word ‘and’. I’m now working on making it hallucinating less.

Log in to leave a comment

I hate my life.

I’ve tried a bunch of things in the past 2 hours, but I still can’t stop if from hallucinating random things not present in the input.

I’ve removed the required output JSON structure so the model only needs to output final entry (and uses two-passes generation for things like title and mood). I’ve programmatically converted input into actual sentences in hope that it’ll somehow makes it perform better. While it did, it’s minimal and the issue still persists.

I gave up and just used Qwen3-0.6B without any fine tune. It easily outperform my 14 hours effort. It even cutted my parameters count (and thus app size) by a whopping 600MB, wtf?

bruh, I’m overcomplicating things. I always am.

Log in to leave a comment

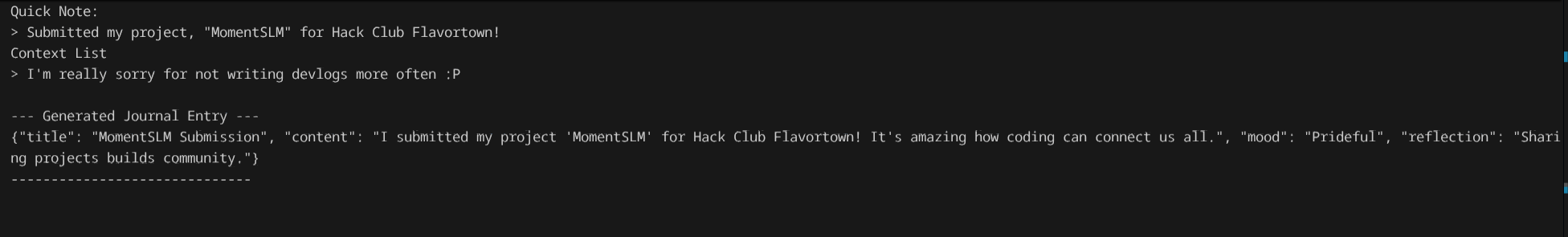

Hi! I’m sorry for logging 15 hours of work. Putting this into flavortown was really a last-minute decision. I’ll try my best to make up for it by writing the whole devlog here.

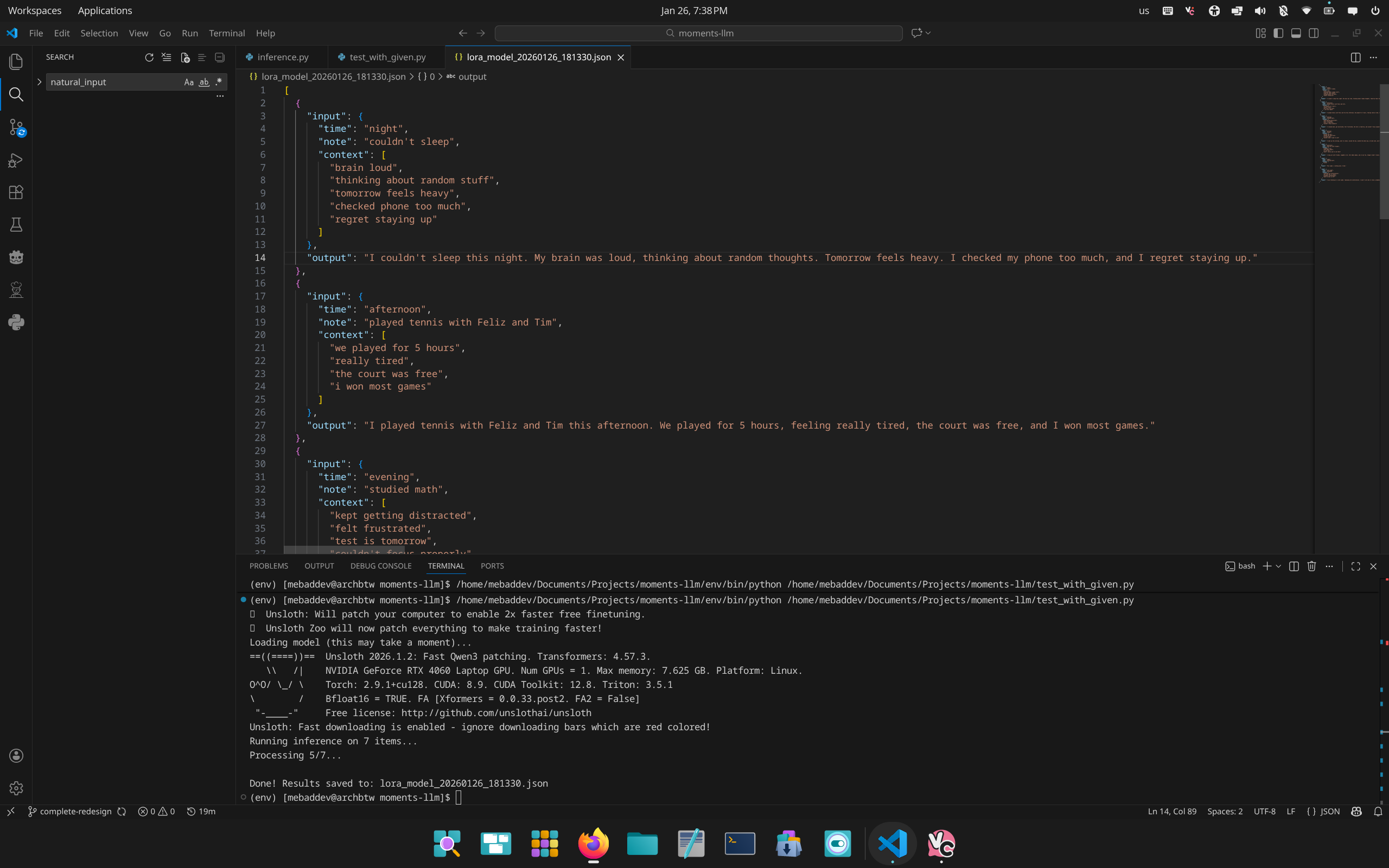

MomentSLM is an on device Small Language Model that powers my ‘write the bare minimum’ journal app, “Moment”. Under the hood, it’s a fine tuned version of LFM-1.2B-instruct with my own custom dataset. It’s really simple, but it took a lot of time because there’s simply too many things I can change. For example:

- Base model

- Tried:

Qwen3-0.6B,Qwen3-1.7B,LFM2.5-1.2B-Base,LFM-2.5-1.2B-Instruct

- Tried:

- Temperature

- Prompt

- Dataset

- Refined 6 times

- Other hyperparameters

- For example. repeat penalty

- Top P

- Top K

- Input/Output format

- Ok, this one is on me, but I’ve tried 3 different formats, and rewrote the dataset 3 times.

And every time one of these properties change, I try everything again to find the optimal settings. Combining that with 8 minutes per fine-tune and I’ve spent quite a lot of time on this project.

- Ok, this one is on me, but I’ve tried 3 different formats, and rewrote the dataset 3 times.

It’s not an easy task. Being a small language model, MomentSLM has some trouble understanding the context of my input, and thus generated some quite interesting outputs. For instance:

- I ran on the path barefoot, wearing my old shoes and stepping through a mist of dust (you can’t be barefoot while wearing shoes)

- The soft fur of the cat made me fall asleep. I watched the television and purred, curled up on the couch for a nap. (Last time I checked, I’m not a cat so this doesn’t make any sense)

- Trying to cook something for dinner and it’s going to be burnt food. It’s a bit disappointing, but I’m trying to stay positive. (I don’t think I have to explain this one)

While it’s not perfect, I’m quite happy with it’s current status, and will write the devlog here once I’ve managed to get it working better.

Log in to leave a comment