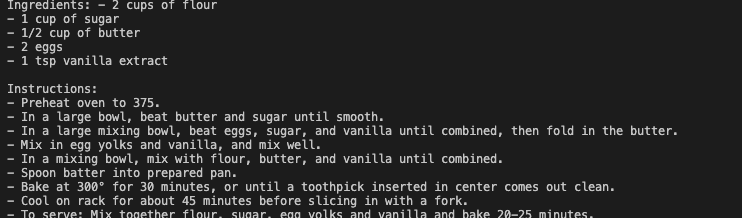

This project uses a distilledGPT2 encoding and a generic Transformer-based architecture, trained on a portion of a Huggingface database (corbt/all-recipes), to train a 83 M parameter generative model. Use it to find your next great recipe (1% of the time) or your next disaster (99% of the time… hey I don’t have any H100s). Disclaimer: you shouldn’t probably take these recipes seriously unless you’re absolutely sure it’s fine.

2 devlogs

2h 25m 37s

Ran it locally with the Colab-trained model, I can publish it at least

0

Log in to leave a comment

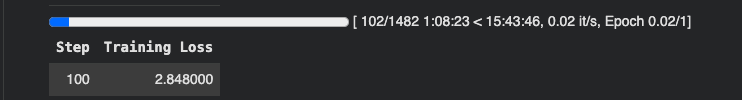

Created the model in Colabfold, complementing my existing efforts. Access to a T4 was nice… until it ran out. Now I have a 300 MB pth file that is probably corrupted (I probably truncated the file somewhere because my internet is slow). Next time I’ll probably train on a local M1 Pro. Anyhow I probably can deploy a working model tomorrow.

0

Log in to leave a comment